1 | Mathematics and Physics Have the Same Foundations

One of the many surprising (and to me, unexpected) implications of our Physics Project is its suggestion of a very deep correspondence between the foundations of physics and mathematics. We might have imagined that physics would have certain laws, and mathematics would have certain theories, and that while they might be historically related, there wouldn’t be any fundamental formal correspondence between them.

But what our Physics Project suggests is that underneath everything we physically experience there is a single very general abstract structure—that we call the ruliad—and that our physical laws arise in an inexorable way from the particular samples we take of this structure. We can think of the ruliad as the entangled limit of all possible computations—or in effect a representation of all possible formal processes. And this then leads us to the idea that perhaps the ruliad might underlie not only physics but also mathematics—and that everything in mathematics, like everything in physics, might just be the result of sampling the ruliad.

Of course, mathematics as it’s normally practiced doesn’t look the same as physics. But the idea is that they can both be seen as views of the same underlying structure. What makes them different is that physical and mathematical observers sample this structure in somewhat different ways. But since in the end both kinds of observers are associated with human experience they inevitably have certain core characteristics in common. And the result is that there should be “fundamental laws of mathematics” that in some sense mirror the perceived laws of physics that we derive from our physical observation of the ruliad.

So what might those fundamental laws of mathematics be like? And how might they inform our conception of the foundations of mathematics, and our view of what mathematics really is?

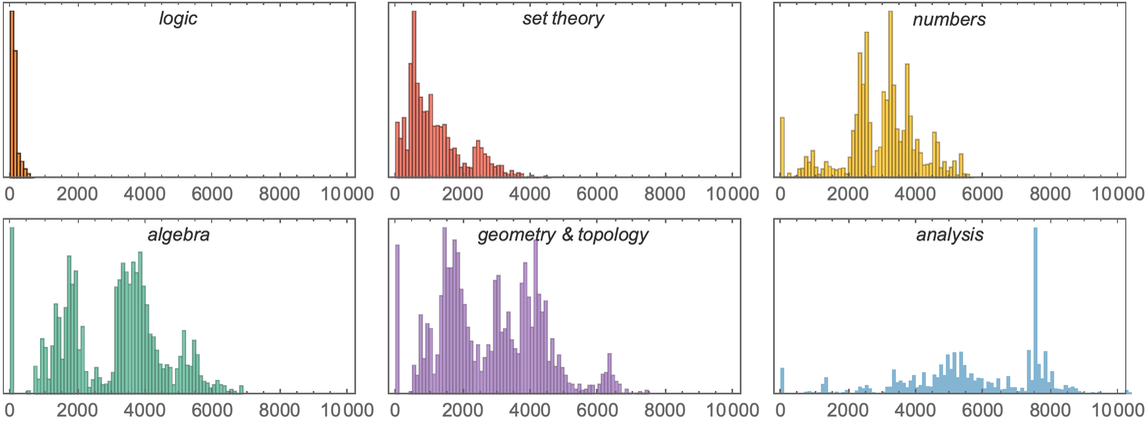

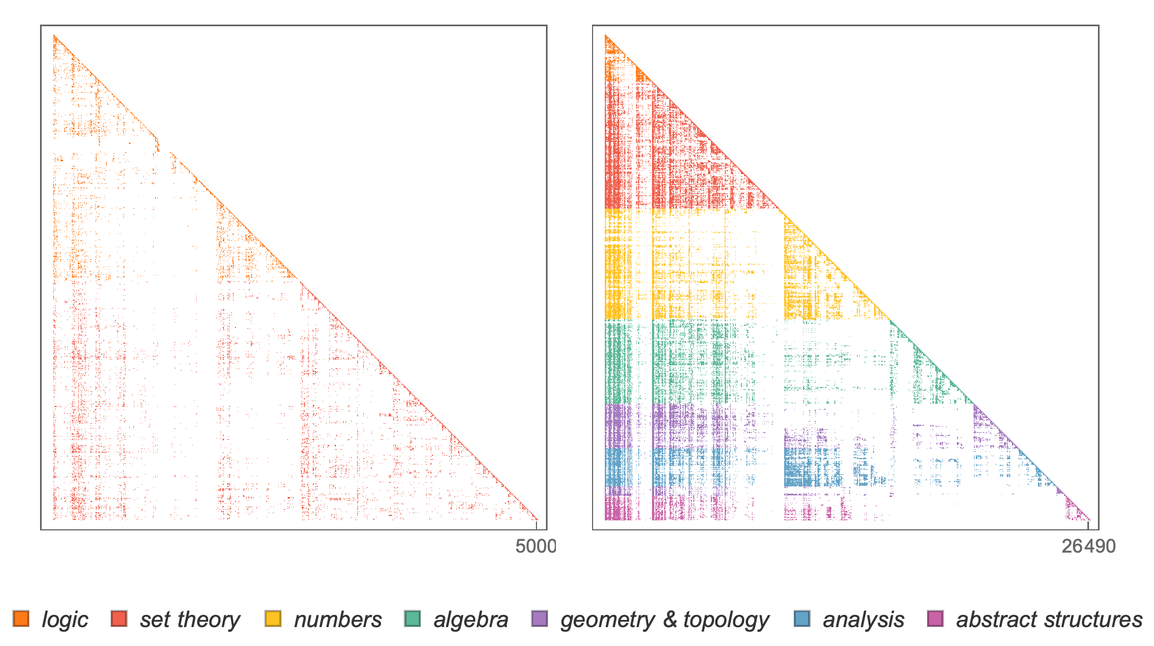

The most obvious manifestation of the mathematics that we humans have developed over the course of many centuries is the few million mathematical theorems that have been published in the literature of mathematics. But what can be said in generality about this thing we call mathematics? Is there some notion of what mathematics is like “in bulk”? And what might we be able to say, for example, about the structure of mathematics in the limit of infinite future development?

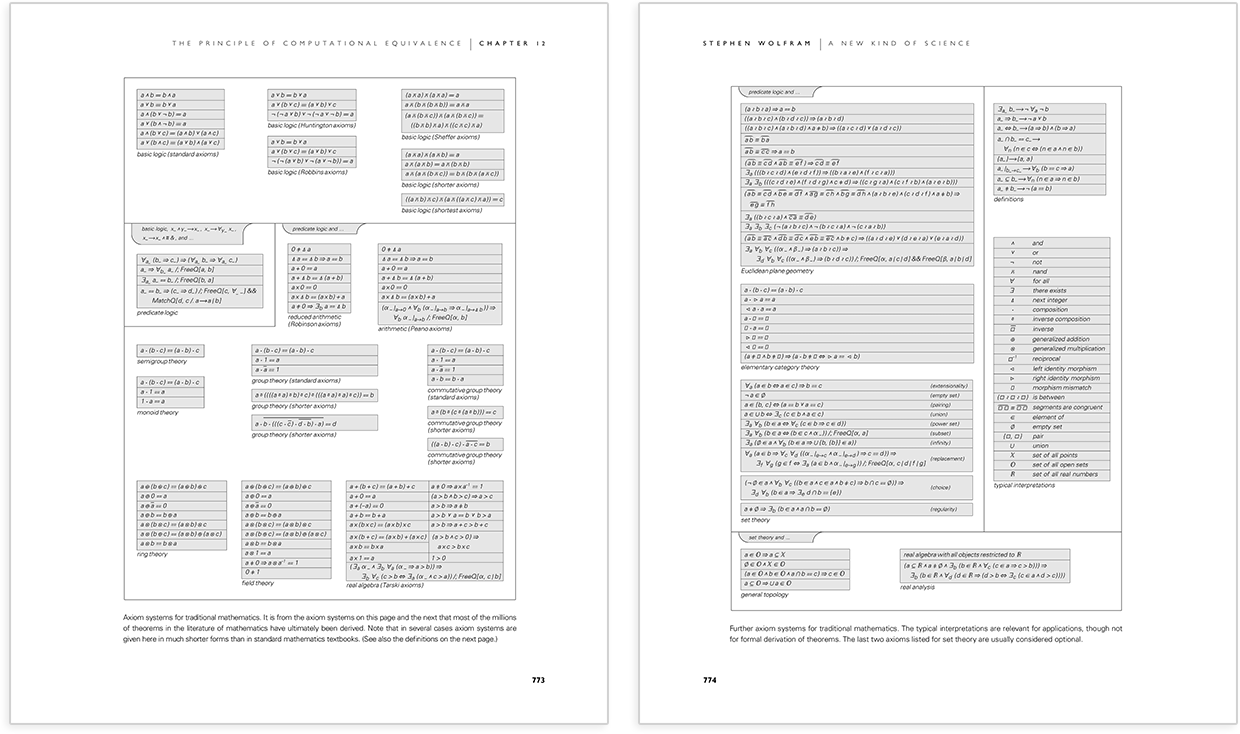

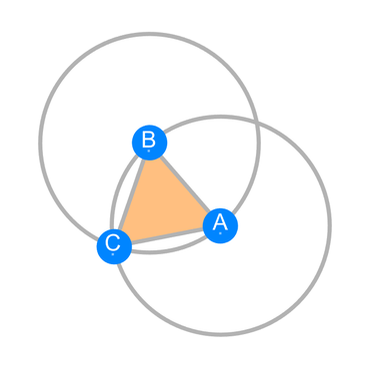

When we do physics, the traditional approach has been to start from our basic sensory experience of the physical world, and of concepts like space, time and motion—and then to try to formalize our descriptions of these things, and build on these formalizations. And in its early development—for example by Euclid—mathematics took the same basic approach. But beginning a little more than a century ago there emerged the idea that one could build mathematics purely from formal axioms, without necessarily any reference to what is accessible to sensory experience.

And in a way our Physics Project begins from a similar place. Because at the outset it just considers purely abstract structures and abstract rules—typically described in terms of hypergraph rewriting—and then tries to deduce their consequences. Many of these consequences are incredibly complicated, and full of computational irreducibility. But the remarkable discovery is that when sampled by observers with certain general characteristics that make them like us, the behavior that emerges must generically have regularities that we can recognize, and in fact must follow exactly known core laws of physics.

And already this begins to suggest a new perspective to apply to the foundations of mathematics. But there’s another piece, and that’s the idea of the ruliad. We might have supposed that our universe is based on some particular chosen underlying rule, like an axiom system we might choose in mathematics. But the concept of the ruliad is in effect to represent the entangled result of “running all possible rules”. And the key point is then that it turns out that an “observer like us” sampling the ruliad must perceive behavior that corresponds to known laws of physics. In other words, without “making any choice” it’s inevitable—given what we’re like as observers—that our “experience of the ruliad” will show fundamental laws of physics.

But now we can make a bridge to mathematics. Because in embodying all possible computational processes the ruliad also necessarily embodies the consequences of all possible axiom systems. As humans doing physics we’re effectively taking a certain sampling of the ruliad. And we realize that as humans doing mathematics we’re also doing essentially the same kind of thing.

But will we see “general laws of mathematics” in the same kind of way that we see “general laws of physics”? It depends on what we’re like as “mathematical observers”. In physics, there turn out to be general laws—and concepts like space and motion—that we humans can assimilate. And in the abstract it might not be that anything similar would be true in mathematics. But it seems as if the thing mathematicians typically call mathematics is something for which it is—and where (usually in the end leveraging our experience of physics) it’s possible to successfully carve out a sampling of the ruliad that’s again one we humans can assimilate.

When we think about physics we have the idea that there’s an actual physical reality that exists—and that we experience physics within this. But in the formal axiomatic view of mathematics, things are different. There’s no obvious “underlying reality” there; instead there’s just a certain choice we make of axiom system. But now, with the concept of the ruliad, the story is different. Because now we have the idea that “deep underneath” both physics and mathematics there’s the same thing: the ruliad. And that means that insofar as physics is “grounded in reality”, so also must mathematics be.

When most working mathematicians do mathematics it seems to be typical for them to reason as if the constructs they’re dealing with (whether they be numbers or sets or whatever) are “real things”. But usually there’s a concept that in principle one could “drill down” and formalize everything in terms of some axiom system. And indeed if one wants to get a global view of mathematics and its structure as it is today, it seems as if the best approach is to work from the formalization that’s been done with axiom systems.

In starting from the ruliad and the ideas of our Physics Project we’re in effect positing a certain “theory of mathematics”. And to validate this theory we need to study the “phenomena of mathematics”. And, yes, we could do this in effect by directly “reading the whole literature of mathematics”. But it’s more efficient to start from what’s in a sense the “current prevailing underlying theory of mathematics” and to begin by building on the methods of formalized mathematics and axiom systems.

Over the past century a certain amount of metamathematics has been done by looking at the general properties of these methods. But most often when the methods are systematically used today, it’s to set up some particular mathematical derivation, normally with the aid of a computer. But here what we want to do is think about what happens if the methods are used “in bulk”. Underneath there may be all sorts of specific detailed formal derivations being done. But somehow what emerges from this is something higher level, something “more human”—and ultimately something that corresponds to our experience of pure mathematics.

How might this work? We can get an idea from an analogy in physics. Imagine we have a gas. Underneath, it consists of zillions of molecules bouncing around in detailed and complicated patterns. But most of our “human” experience of the gas is at a much more coarse-grained level—where we perceive not the detailed motions of individual molecules, but instead continuum fluid mechanics.

And so it is, I think, with mathematics. All those detailed formal derivations—for example of the kind automated theorem proving might do—are like molecular dynamics. But most of our “human experience of mathematics”—where we talk about concepts like integers or morphisms—is like fluid dynamics. The molecular dynamics is what builds up the fluid, but for most questions of “human interest” it’s possible to “reason at the fluid dynamics level”, without dropping down to molecular dynamics.

It’s certainly not obvious that this would be possible. It could be that one might start off describing things at a “fluid dynamics” level—say in the case of an actual fluid talking about the motion of vortices—but that everything would quickly get “shredded”, and that there’d soon be nothing like a vortex to be seen, only elaborate patterns of detailed microscopic molecular motions. And similarly in mathematics one might imagine that one would be able to prove theorems in terms of things like real numbers but actually find that everything gets “shredded” to the point where one has to start talking about elaborate issues of mathematical logic and different possible axiomatic foundations.

But in physics we effectively have the Second Law of thermodynamics—which we now understand in terms of computational irreducibility—that tells us that there’s a robust sense in which the microscopic details are systematically “washed out” so that things like fluid dynamics “work”. Just sometimes—like in studying Brownian motion, or hypersonic flow—the molecular dynamics level still “shines through”. But for most “human purposes” we can describe fluids just using ordinary fluid dynamics.

So what’s the analog of this in mathematics? Presumably it’s that there’s some kind of “general law of mathematics” that explains why one can so often do mathematics “purely in the large”. Just like in fluid mechanics there can be “corner-case” questions that probe down to the “molecular scale”—and indeed that’s where we can expect to see things like undecidability, as a rough analog of situations where we end up tracing the potentially infinite paths of single molecules rather than just looking at “overall fluid effects”. But somehow in most cases there’s some much stronger phenomenon at work—that effectively aggregates low-level details to allow the kind of “bulk description” that ends up being the essence of what we normally in practice call mathematics.

But is such a phenomenon something formally inevitable, or does it somehow depend on us humans “being in the loop”? In the case of the Second Law it’s crucial that we only get to track coarse-grained features of a gas—as we humans with our current technology typically do. Because if instead we watched and decoded what every individual molecule does, we wouldn’t end up identifying anything like the usual bulk “Second-Law” behavior. In other words, the emergence of the Second Law is in effect a direct consequence of the fact that it’s us humans—with our limitations on measurement and computation—who are observing the gas.

So is something similar happening with mathematics? At the underlying “molecular level” there’s a lot going on. But the way we humans think about things, we’re effectively taking just particular kinds of samples. And those samples turn out to give us “general laws of mathematics” that give us our usual experience of “human-level mathematics”.

To ultimately ground this we have to go down to the fully abstract level of the ruliad, but we’ll already see many core effects by looking at mathematics essentially just at a traditional “axiomatic level”, albeit “in bulk”.

The full story—and the full correspondence between physics and mathematics—requires in a sense “going below” the level at which we have recognizable formal axiomatic mathematical structures; it requires going to a level at which we’re just talking about making everything out of completely abstract elements, which in physics we might interpret as “atoms of space” and in mathematics as some kind of “symbolic raw material” below variables and operators and everything else familiar in traditional axiomatic mathematics.

The deep correspondence we’re describing between physics and mathematics might make one wonder to what extent the methods we use in physics can be applied to mathematics, and vice versa. In axiomatic mathematics the emphasis tends to be on looking at particular theorems and seeing how they can be knitted together with proofs. And one could certainly imagine an analogous “axiomatic physics” in which one does particular experiments, then sees how they can “deductively” be knitted together. But our impression that there’s an “actual reality” to physics makes us seek broader laws. And the correspondence between physics and mathematics implied by the ruliad now suggests that we should be doing this in mathematics as well.

What will we find? Some of it in essence just confirms impressions that working pure mathematicians already have. But it provides a definite framework for understanding these impressions and for seeing what their limits may be. It also lets us address questions like why undecidability is so comparatively rare in practical pure mathematics, and why it is so common to discover remarkable correspondences between apparently quite different areas of mathematics. And beyond that, it suggests a host of new questions and approaches both to mathematics and metamathematics—that help frame the foundations of the remarkable intellectual edifice that we call mathematics.

2 | The Underlying Structure of Mathematics and Physics

If we “drill down” to what we’ve called above the “molecular level” of mathematics, what will we find there? There are many technical details (some of which we’ll discuss later) about the historical conventions of mathematics and its presentation. But in broad outline we can think of there as being a kind of “gas” of “mathematical statements”—like 1 + 1 = 2 or x + y = y + x—represented in some specified symbolic language. (And, yes, Wolfram Language provides a well-developed example of what that language can be like.)

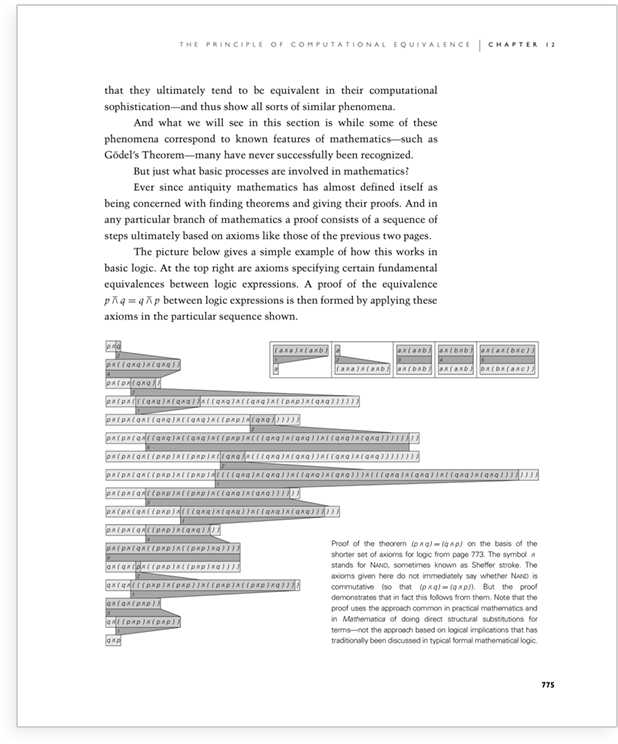

But how does the “gas of statements” behave? The essential point is that new statements are derived from existing ones by “interactions” that implement laws of inference (like that q can be derived from the statement p and the statement “p implies q”). And if we trace the paths by which one statement can be derived from others, these correspond to proofs. And the whole graph of all these derivations is then a representation of the possible historical development of mathematics—with slices through this graph corresponding to the sets of statements reached at a given stage.

By talking about things like a “gas of statements” we’re making this sound a bit like physics. But while in physics a gas consists of actual, physical molecules, in mathematics our statements are just abstract things. But this is where the discoveries of our Physics Project start to be important. Because in our project we’re “drilling down” beneath for example the usual notions of space and time to an “ultimate machine code” for the physical universe. And we can think of that ultimate machine code as operating on things that are in effect just abstract constructs—very much like in mathematics.

In particular, we imagine that space and everything in it is made up of a giant network (hypergraph) of “atoms of space”—with each “atom of space” just being an abstract element that has certain relations with other elements. The evolution of the universe in time then corresponds to the application of computational rules that (much like laws of inference) take abstract relations and yield new relations—thereby progressively updating the network that represents space and everything in it.

But while the individual rules may be very simple, the whole detailed pattern of behavior to which they lead is normally very complicated—and typically shows computational irreducibility, so that there’s no way to systematically find its outcome except in effect by explicitly tracing each step. But despite all this underlying complexity it turns out—much like in the case of an ordinary gas—that at a coarse-grained level there are much simpler (“bulk”) laws of behavior that one can identify. And the remarkable thing is that these turn out to be exactly general relativity and quantum mechanics (which, yes, end up being the same theory when looked at in terms of an appropriate generalization of the notion of space).

But down at the lowest level, is there some specific computational rule that’s “running the universe”? I don’t think so. Instead, I think that in effect all possible rules are always being applied. And the result is the ruliad: the entangled structure associated with performing all possible computations.

But what then gives us our experience of the universe and of physics? Inevitably we are observers embedded within the ruliad, sampling only certain features of it. But what features we sample are determined by the characteristics of us as observers. And what seem to be critical to have “observers like us” are basically two characteristics. First, that we are computationally bounded. And second, that we somehow persistently maintain our coherence—in the sense that we can consistently identify what constitutes “us” even though the detailed atoms of space involved are continually changing.

But we can think of different “observers like us” as taking different specific samples, corresponding to different reference frames in rulial space, or just different positions in rulial space. These different observers may describe the universe as evolving according to different specific underlying rules. But the crucial point is that the general structure of the ruliad implies that so long as the observers are “like us”, it’s inevitable that their perception of the universe will be that it follows things like general relativity and quantum mechanics.

It’s very much like what happens with a gas of molecules: to an “observer like us” there are the same gas laws and the same laws of fluid dynamics essentially independent of the detailed structure of the individual molecules.

So what does all this mean for mathematics? The crucial and at first surprising point is that the ideas we’re describing in physics can in effect immediately be carried over to mathematics. And the key is that the ruliad represents not only all physics, but also all mathematics—and it shows that these are not just related, but in some sense fundamentally the same.

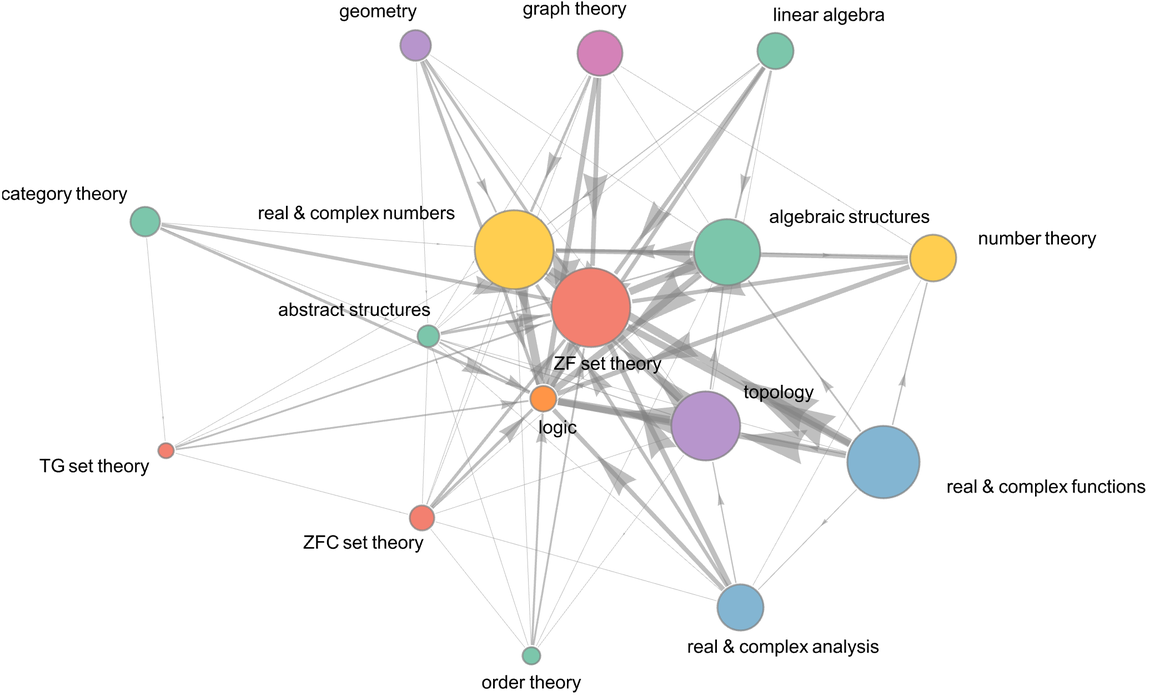

In the traditional formulation of axiomatic mathematics, one talks about deriving results from particular axiom systems—say Peano Arithmetic, or ZFC set theory, or the axioms of Euclidean geometry. But the ruliad in effect represents the entangled consequences not just of specific axiom systems but of all possible axiom systems (as well as all possible laws of inference).

But from this structure that in a sense corresponds to all possible mathematics, how do we pick out any particular mathematics that we’re interested in? The answer is that just as we are limited observers of the physical universe, so we are also limited observers of the “mathematical universe”.

But what are we like as “mathematical observers”? As I’ll argue in more detail later, we inherit our core characteristics from those we exhibit as “physical observers”. And that means that when we “do mathematics” we’re effectively sampling the ruliad in much the same way as when we “do physics”.

We can operate in different rulial reference frames, or at different locations in rulial space, and these will correspond to picking out different underlying “rules of mathematics”, or essentially using different axiom systems. But now we can make use of the correspondence with physics to say that we can also expect there to be certain “overall laws of mathematics” that are the result of general features of the ruliad as perceived by observers like us.

And indeed we can expect that in some formal sense these overall laws will have exactly the same structure as those in physics—so that in effect in mathematics we’ll have something like the notion of space that we have in physics, as well as formal analogs of things like general relativity and quantum mechanics.

What does this mean? It implies that—just as it’s possible to have coherent “higher-level descriptions” in physics that don’t just operate down at the level of atoms of space, so also this should be possible in mathematics. And this in a sense is why we can expect to consistently do what I described above as “human-level mathematics”, without usually having to drop down to the “molecular level” of specific axiomatic structures (or below).

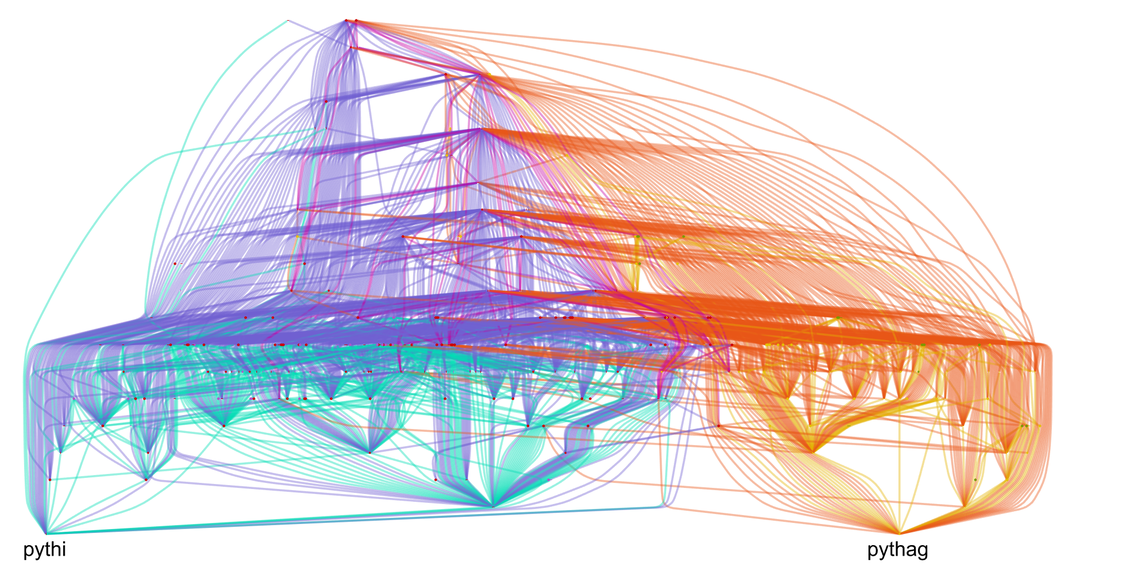

Say we’re talking about the Pythagorean theorem. Given some particular detailed axiom system for mathematics we can imagine using it to build up a precise—if potentially very long and pedantic—representation of the theorem. But let’s say we change some detail of our axioms, say associated with the way they talk about sets, or real numbers. We’ll almost certainly still be able to build up something we consider to be “the Pythagorean theorem”—even though the details of the representation will be different.

In other words, this thing that we as humans would call “the Pythagorean theorem” is not just a single point in the ruliad, but a whole cloud of points. And now the question is: what happens if we try to derive other results from the Pythagorean theorem? It might be that each particular representation of the theorem—corresponding to each point in the cloud—would lead to quite different results. But it could also be that essentially the whole cloud would coherently lead to the same results.

And the claim from the correspondence with physics is that there should be “general laws of mathematics” that apply to “observers like us” and that ensure that there’ll be coherence between all the different specific representations associated with the cloud that we identify as “the Pythagorean theorem”.

In physics it could have been that we’d always have to separately say what happens to every atom of space. But we know that there’s a coherent higher-level description of space—in which for example we can just imagine that objects can move while somehow maintaining their identity. And we can now expect that it’s the same kind of thing in mathematics: that just as there’s a coherent notion of space in physics where things can for example move without being “shredded”, so also this will happen in mathematics. And this is why it’s possible to do “higher-level mathematics” without always dropping down to the lowest level of axiomatic derivations.

It’s worth pointing out that even in physical space a concept like “pure motion” in which objects can move while maintaining their identity doesn’t always work. For example, close to a spacetime singularity, one can expect to eventually be forced to see through to the discrete structure of space—and for any “object” to inevitably be “shredded”. But most of the time it’s possible for observers like us to maintain the idea that there are coherent large-scale features whose behavior we can study using “bulk” laws of physics.

And we can expect the same kind of thing to happen with mathematics. Later on, we’ll discuss more specific correspondences between phenomena in physics and mathematics—and we’ll see the effects of things like general relativity and quantum mechanics in mathematics, or, more precisely, in metamathematics.

But for now, the key point is that we can think of mathematics as somehow being made of exactly the same stuff as physics: they’re both just features of the ruliad, as sampled by observers like us. And in what follows we’ll see the great power that arises from using this to combine the achievements and intuitions of physics and mathematics—and how this lets us think about new “general laws of mathematics”, and view the ultimate foundations of mathematics in a different light.

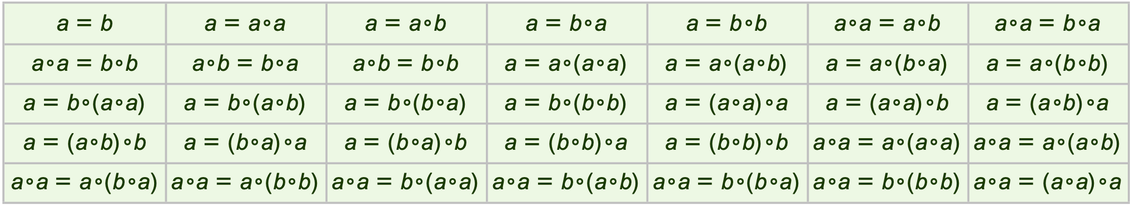

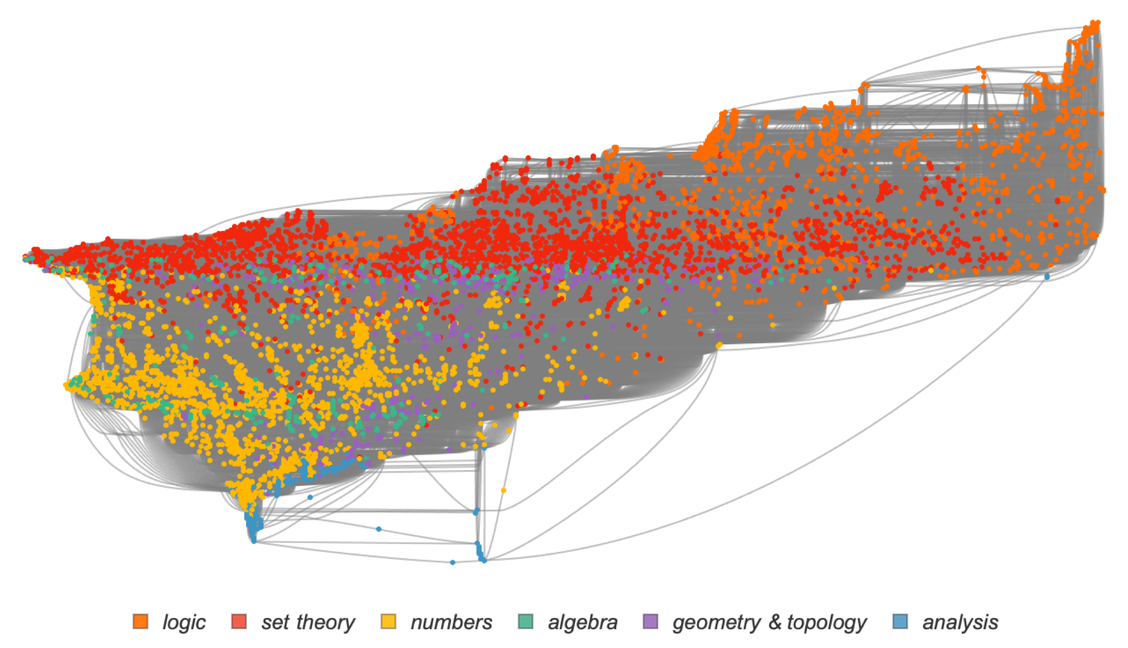

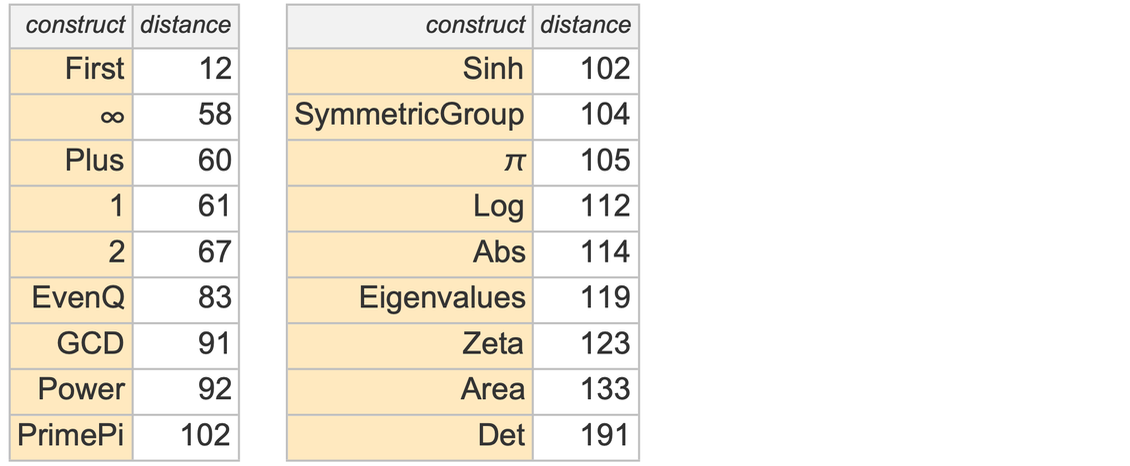

Consider all the mathematical statements that have appeared in mathematical books and papers. We can view these in some sense as the “observed phenomena” of (human) mathematics. And if we’re going to make a “general theory of mathematics” a first step is to do something like we’d typically do in natural science, and try to “drill down” to find a uniform underlying model—or at least representation—for all of them.

At the outset, it might not be clear what sort of representation could possibly capture all those different mathematical statements. But what’s emerged over the past century or so—with particular clarity in Mathematica and the Wolfram Language—is that there is in fact a rather simple and general representation that works remarkably well: a representation in which everything is a symbolic expression.

One can view a symbolic expression such as f[g[x][y, h[z]], w] as a hierarchical or tree structure, in which at every level some particular “head” (like f) is “applied to” one or more arguments. Often in practice one deals with expressions in which the heads have “known meanings”—as in Times[Plus[2, 3], 4] in Wolfram Language. And with this kind of setup symbolic expressions are reminiscent of human natural language, with the heads basically corresponding to “known words” in the language.

And presumably it’s this familiarity from human natural language that’s caused “human natural mathematics” to develop in a way that can so readily be represented by symbolic expressions.

But in typical mathematics there’s an important wrinkle. One often wants to make statements not just about particular things but about whole classes of things. And it’s common to then just declare that some of the “symbols” (like, say, x) that appear in an expression are “variables”, while others (like, say, Plus) are not. But in our effort to capture the essence of mathematics as uniformly as possible it seems much better to burn the idea of an object representing a whole class of things right into the structure of the symbolic expression.

And indeed this is a core idea in the Wolfram Language, where something like x or f is just a “symbol that stands for itself”, while x_ is a pattern (named x) that can stand for anything. (More precisely, _ on its own is what stands for “anything”, and x_—which can also be written x:_—just says that whatever _ stands for in a particular instance will be called x.)

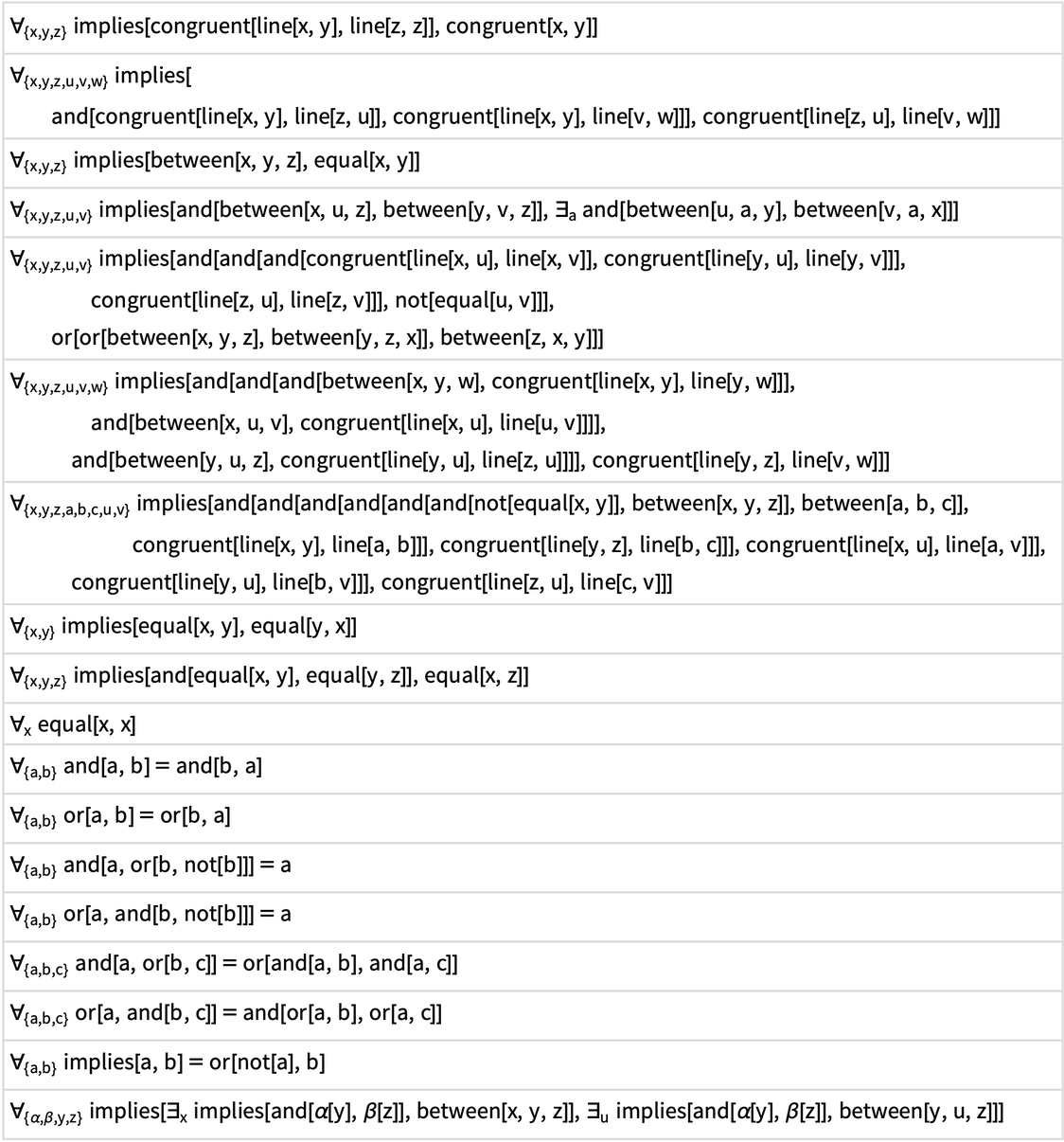

Then with this notation an example of a “mathematical statement” might be:

|

|

In more explicit form we could write this as Equal[f[x_, y_], f[f[y_, x_],y_]]—where Equal (![]() ) has the “known meaning” of representing equality. But what can we do with this statement? At a “mathematical level” the statement asserts that

) has the “known meaning” of representing equality. But what can we do with this statement? At a “mathematical level” the statement asserts that ![]() and

and ![]() should be considered equivalent. But thinking in terms of symbolic expressions there’s now a more explicit, lower-level, “structural” interpretation: that any expression whose structure matches

should be considered equivalent. But thinking in terms of symbolic expressions there’s now a more explicit, lower-level, “structural” interpretation: that any expression whose structure matches ![]() can equivalently be replaced by

can equivalently be replaced by ![]() (or, in Wolfram Language notation, just (y ∘ x) ∘ y) and vice versa. We can indicate this interpretation using the notation

(or, in Wolfram Language notation, just (y ∘ x) ∘ y) and vice versa. We can indicate this interpretation using the notation

|

|

which can be viewed as a shorthand for the pair of Wolfram Language rules:

|

|

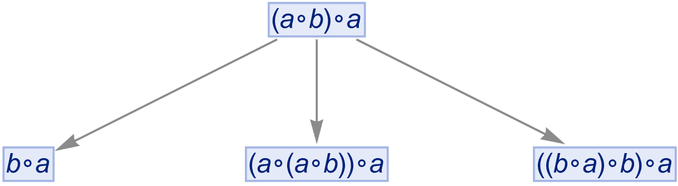

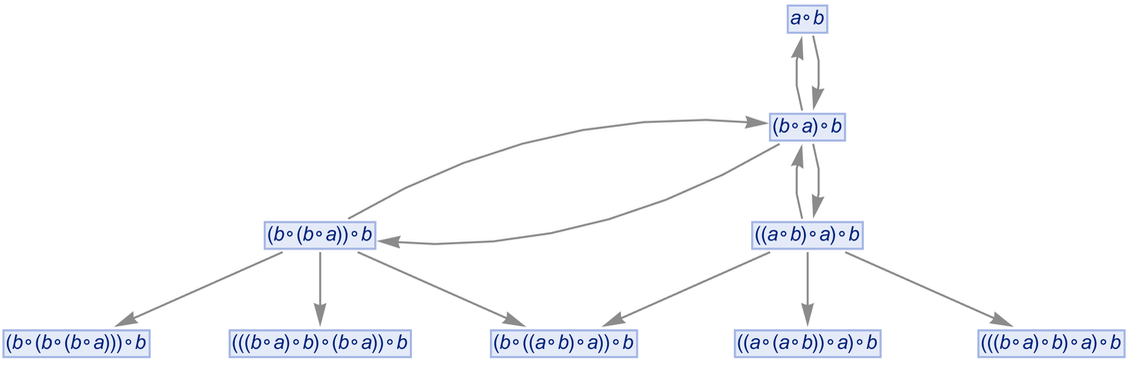

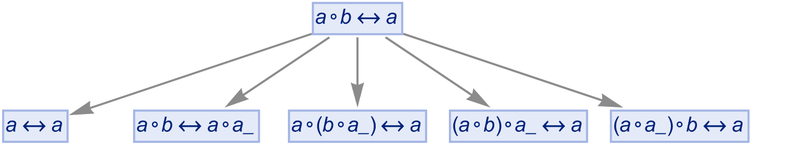

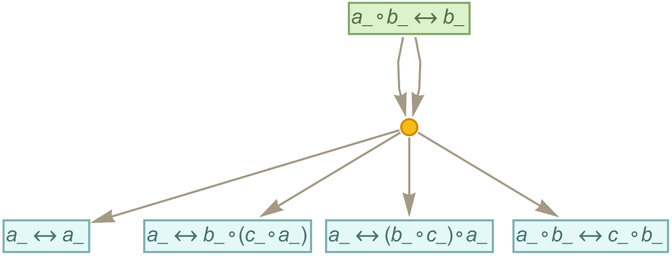

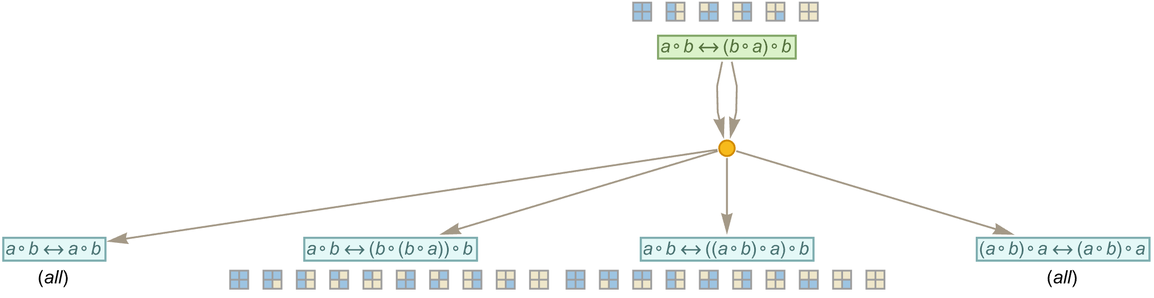

OK, so let’s say we have the expression ![]() . Now we can just apply the rules defined by our statement. Here’s what happens if we do this just once in all possible ways:

. Now we can just apply the rules defined by our statement. Here’s what happens if we do this just once in all possible ways:

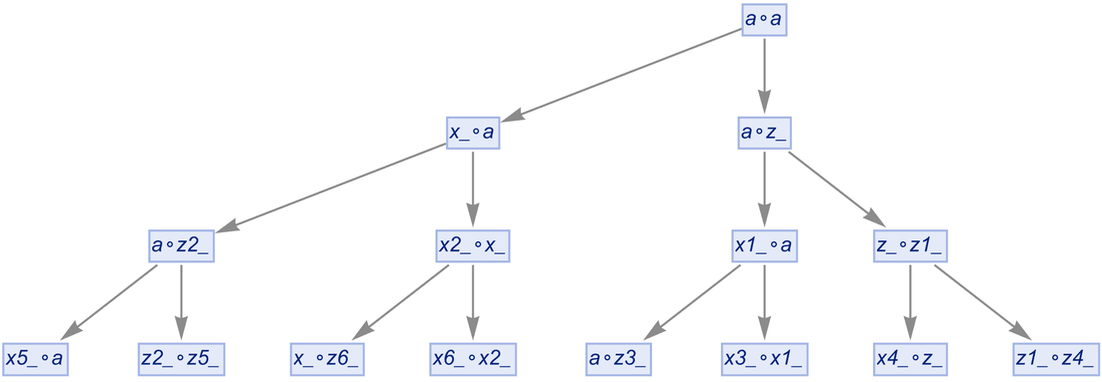

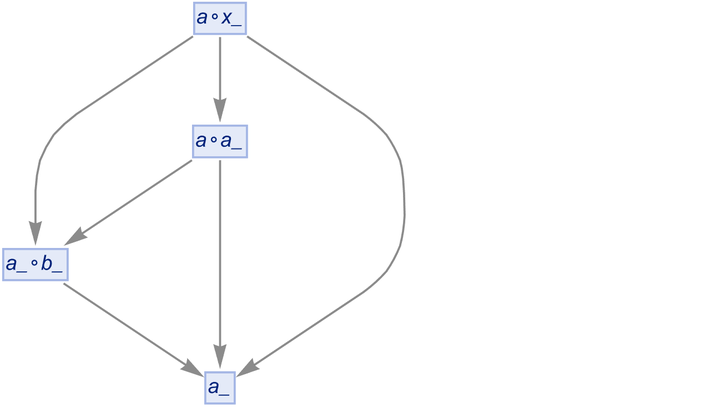

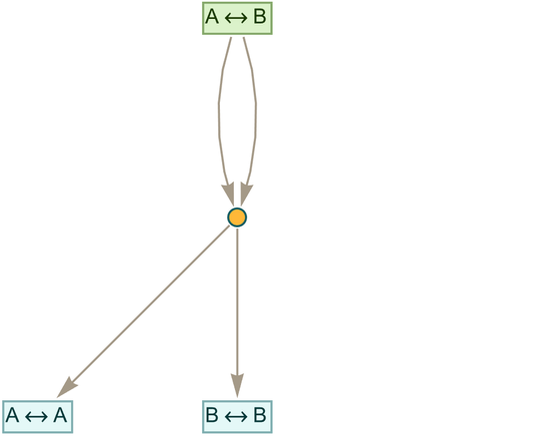

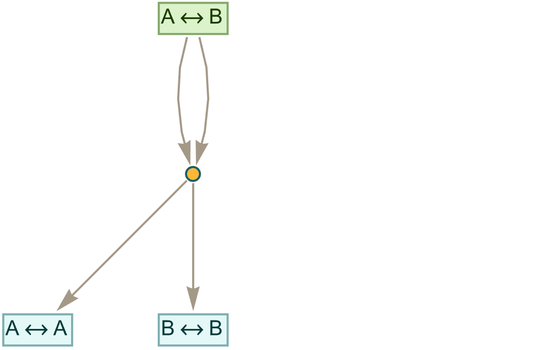

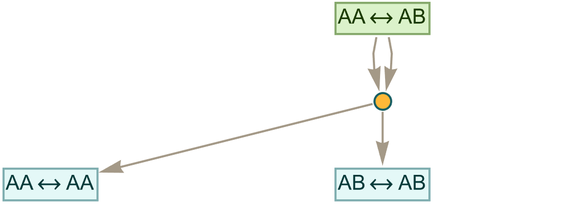

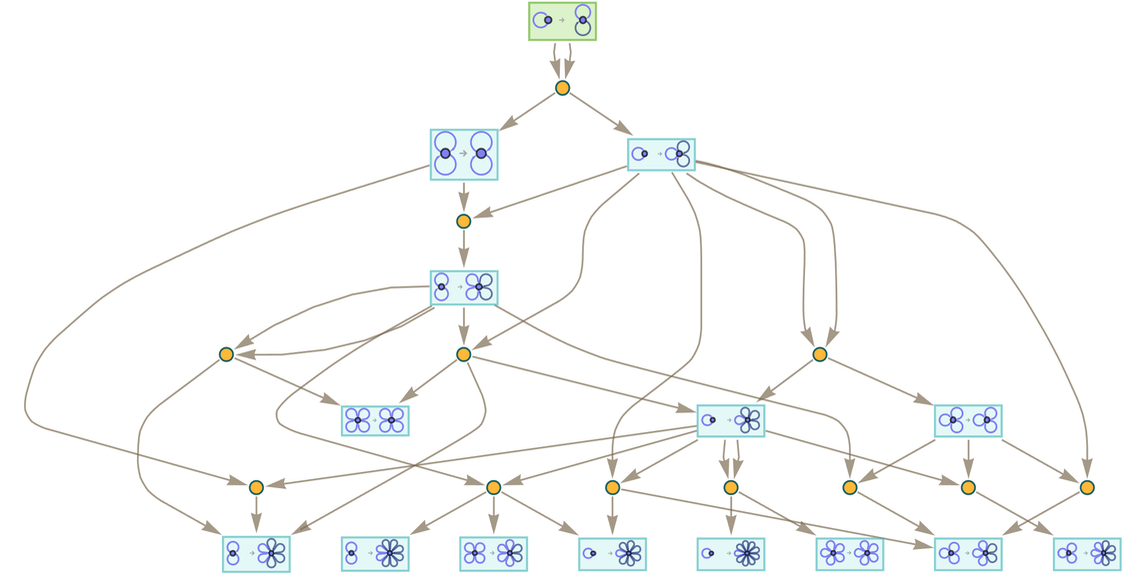

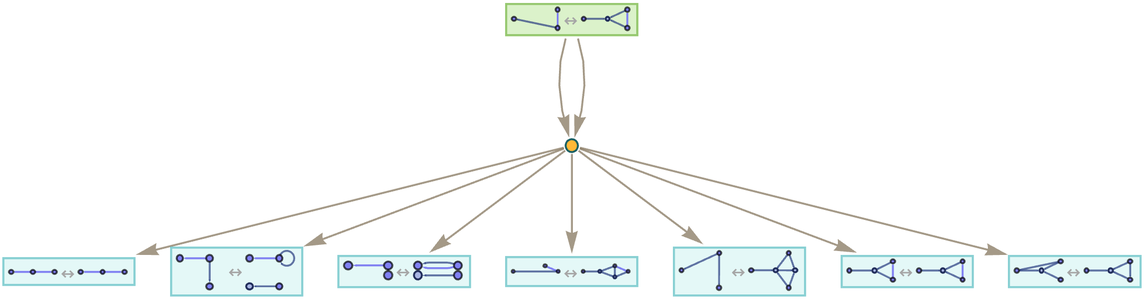

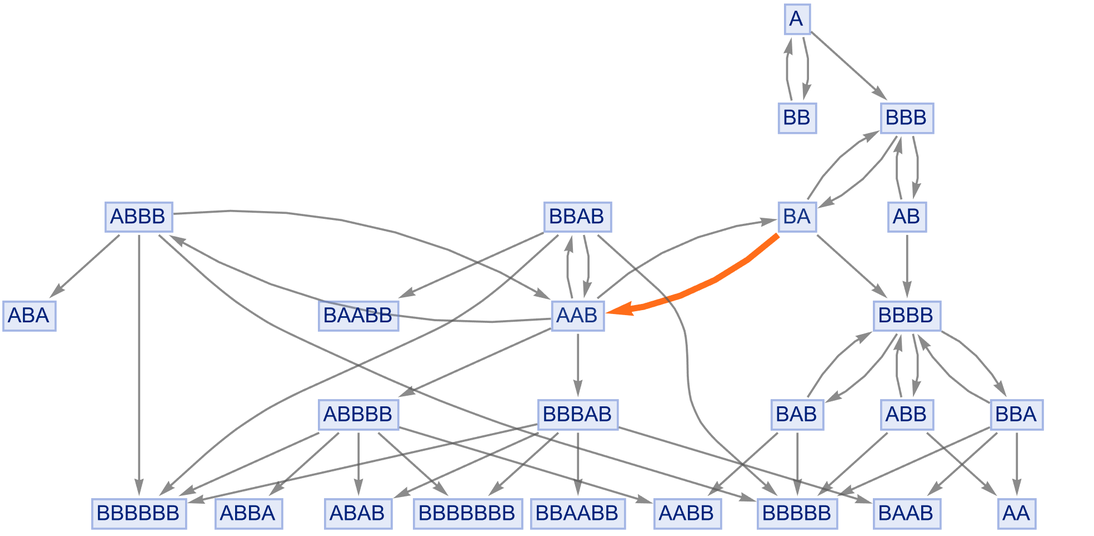

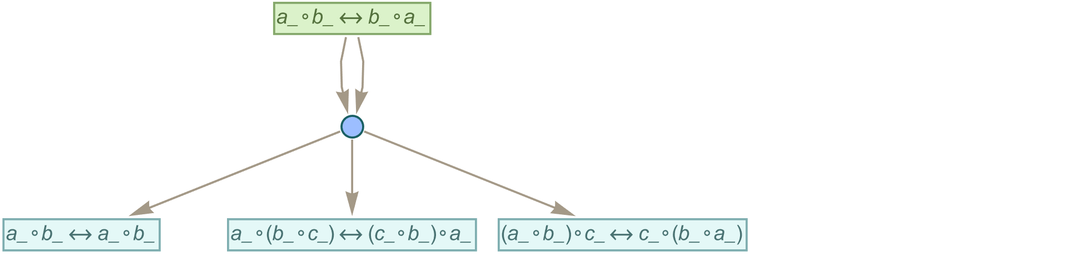

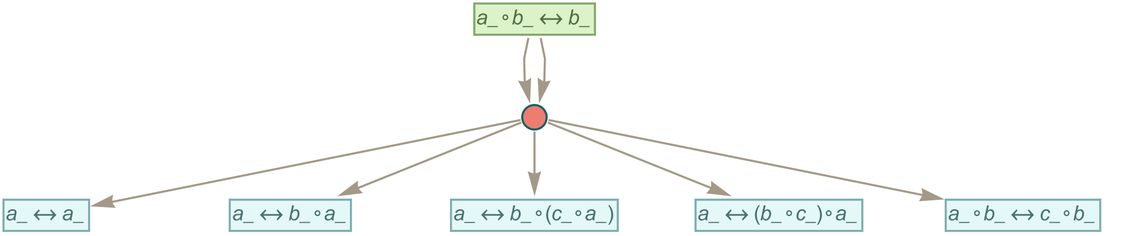

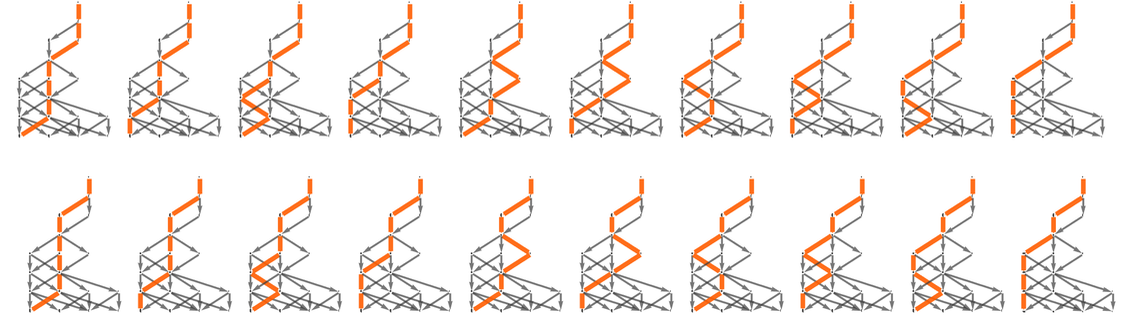

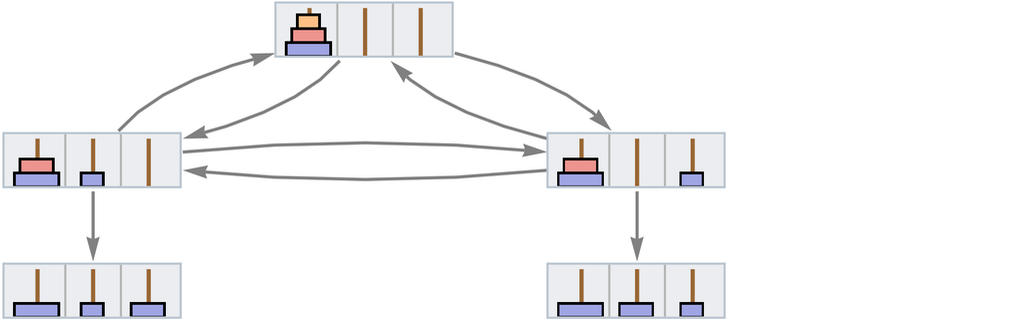

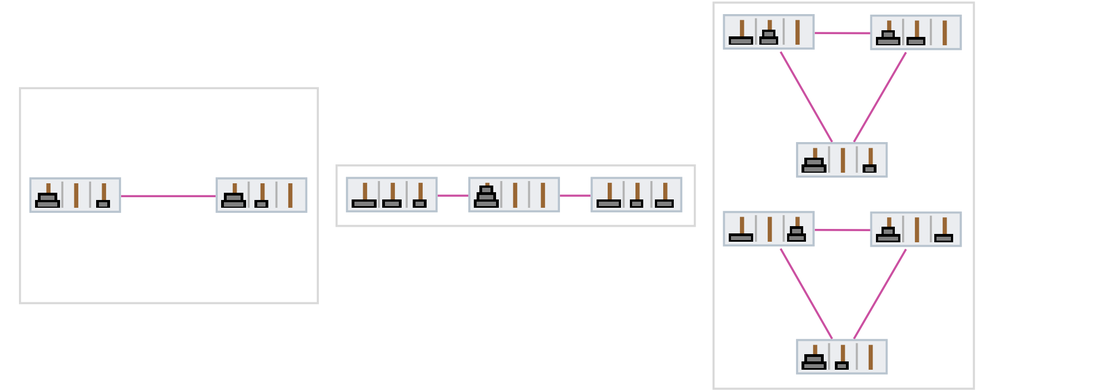

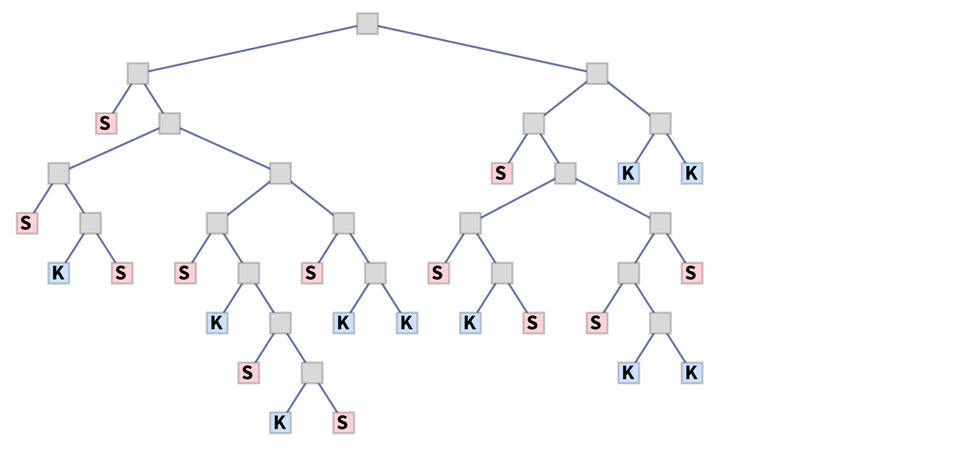

|

And here we see, for example, that ![]() can be transformed to

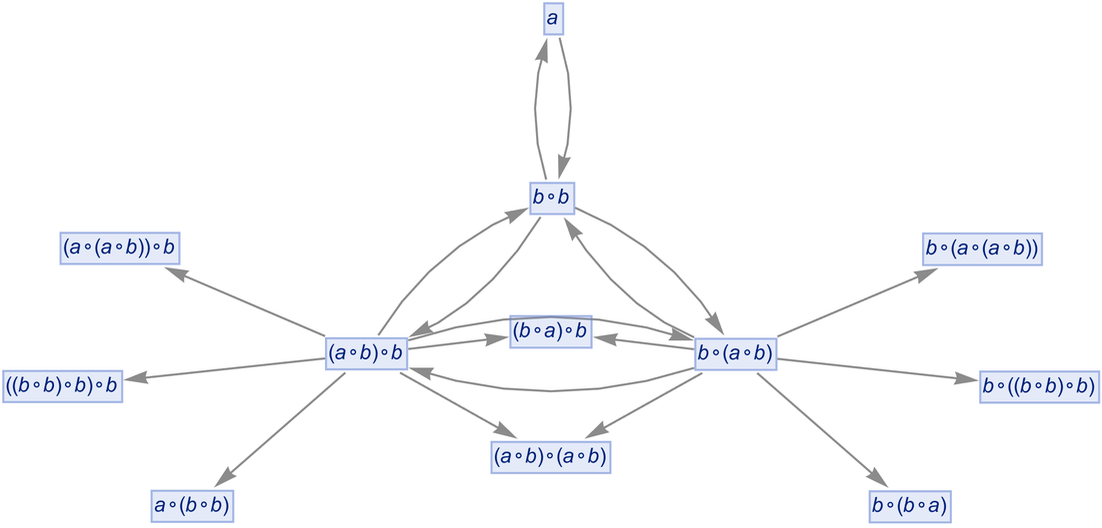

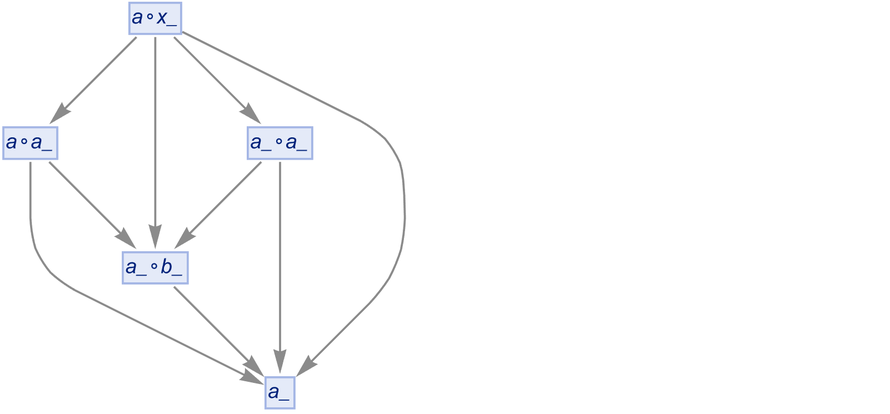

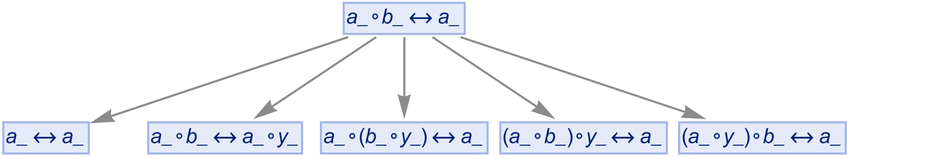

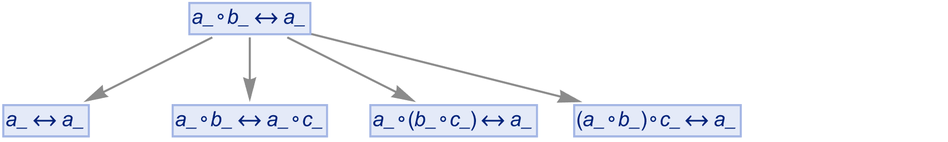

can be transformed to ![]() . Continuing this we build up a whole multiway graph. After just one more step we get:

. Continuing this we build up a whole multiway graph. After just one more step we get:

|

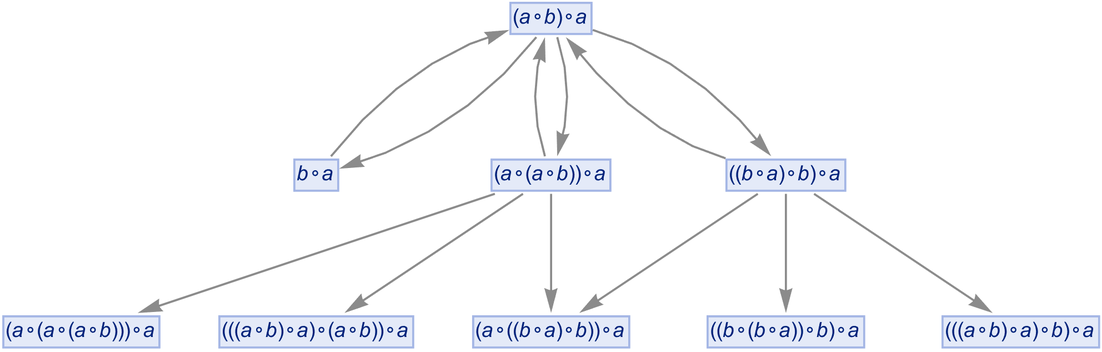

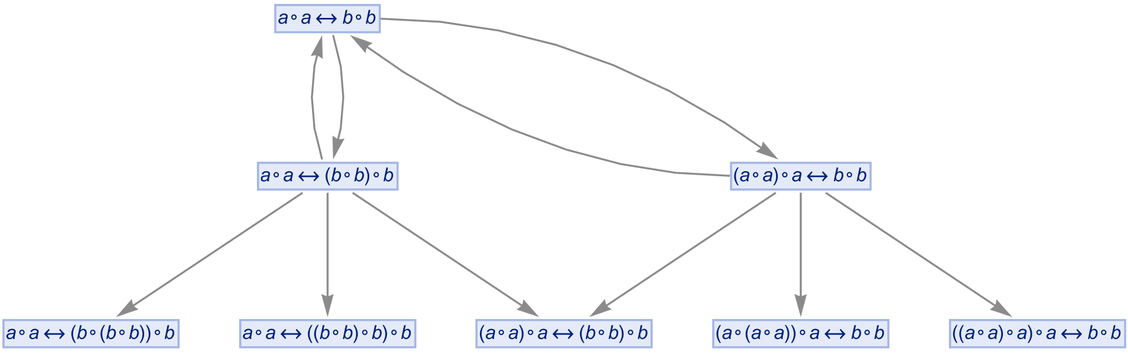

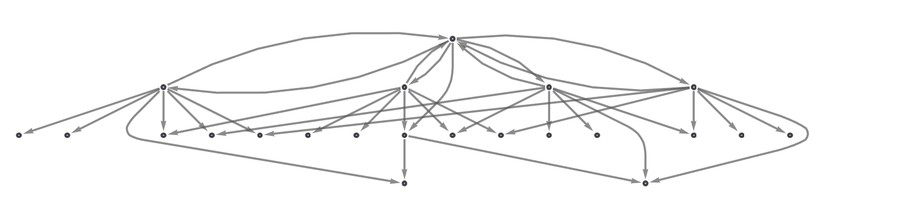

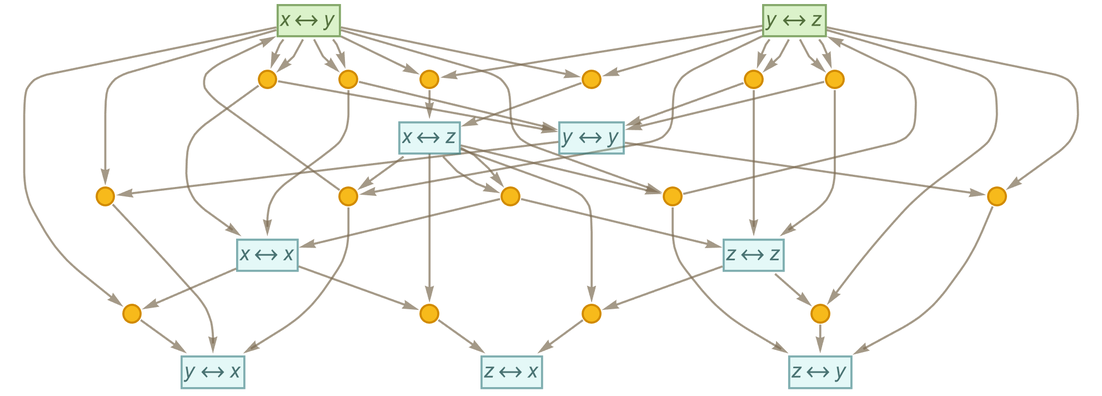

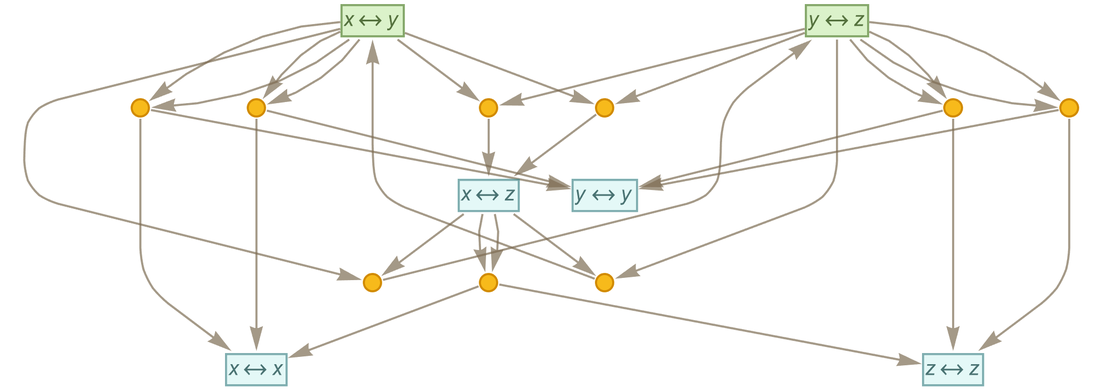

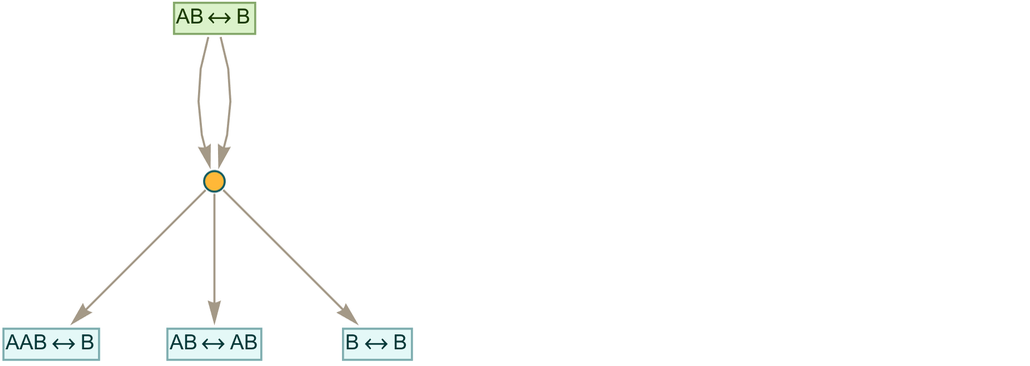

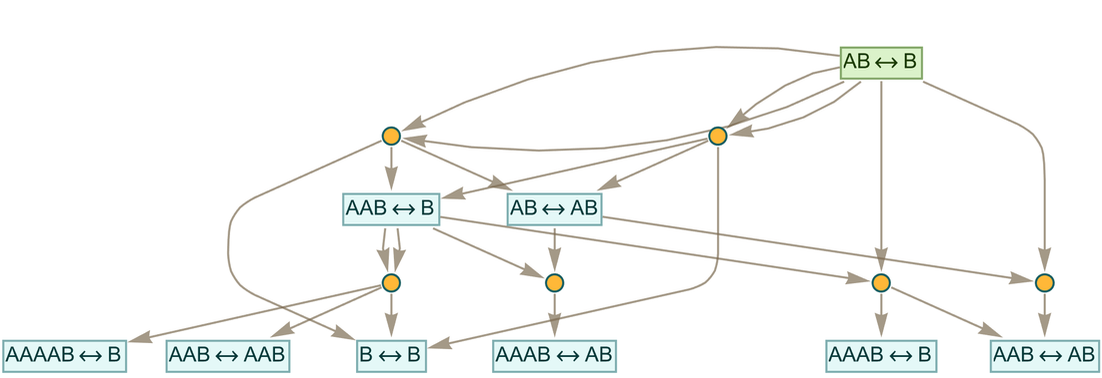

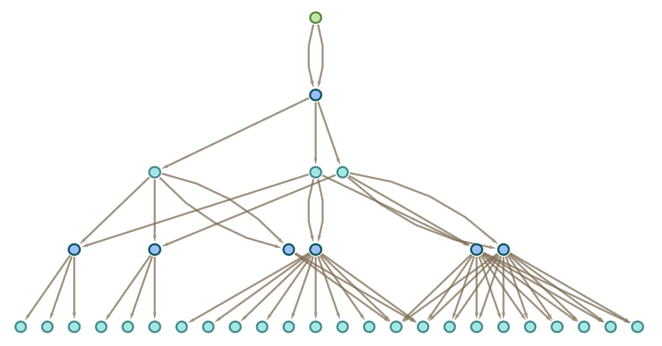

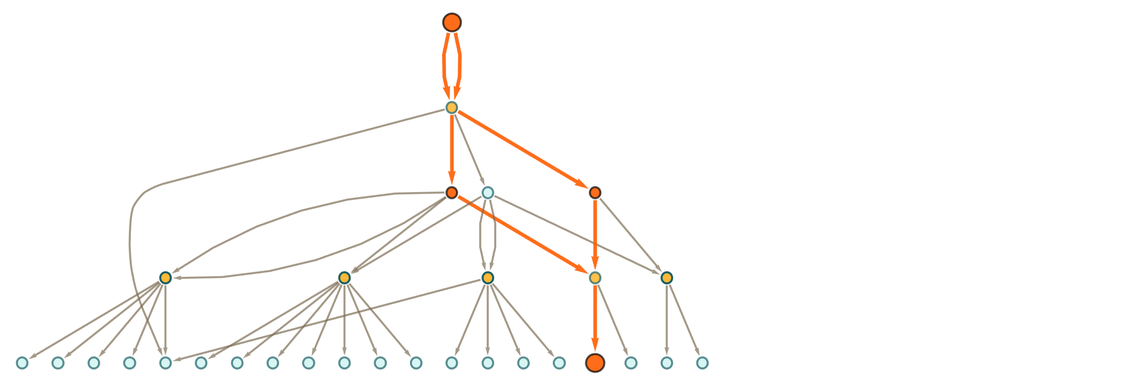

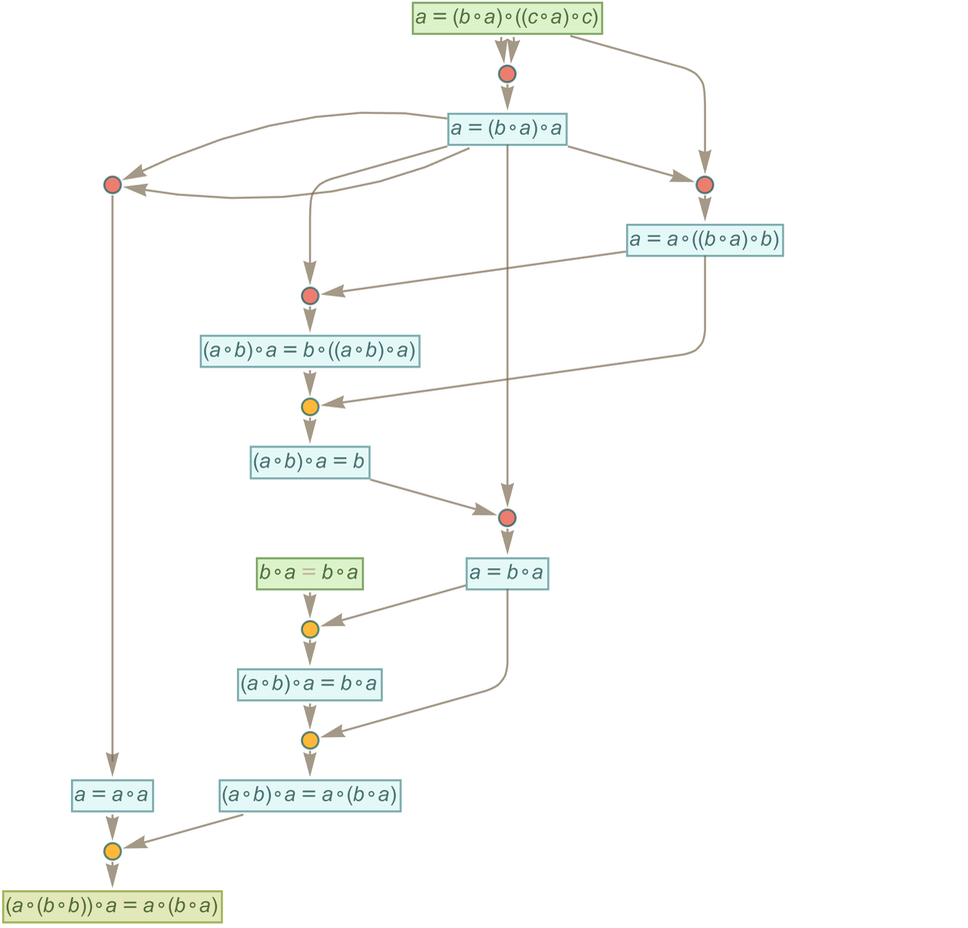

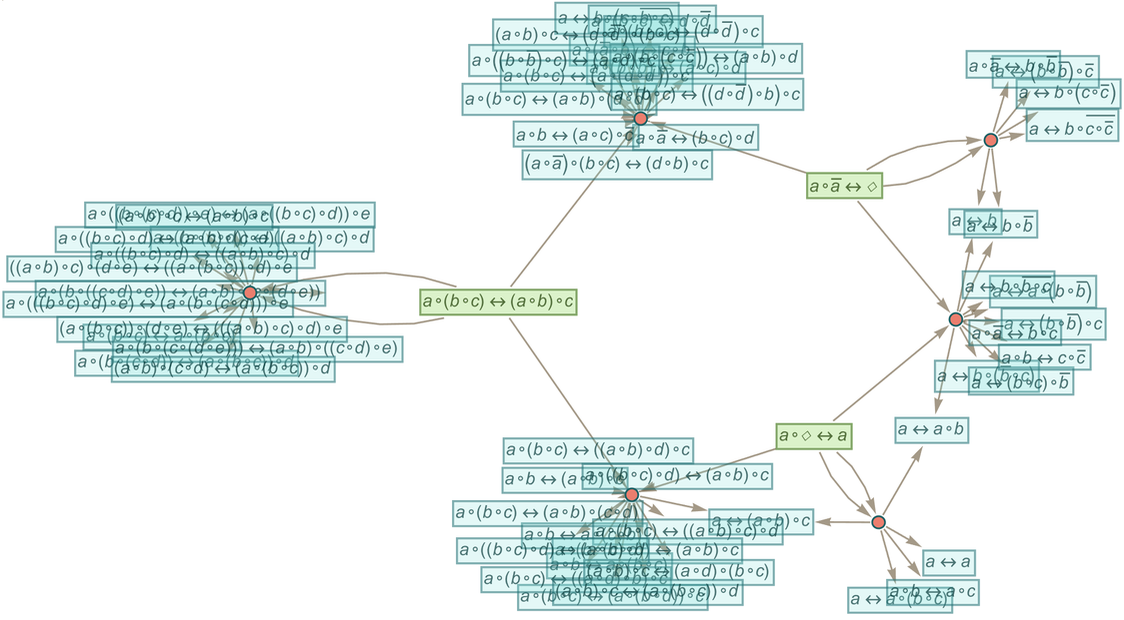

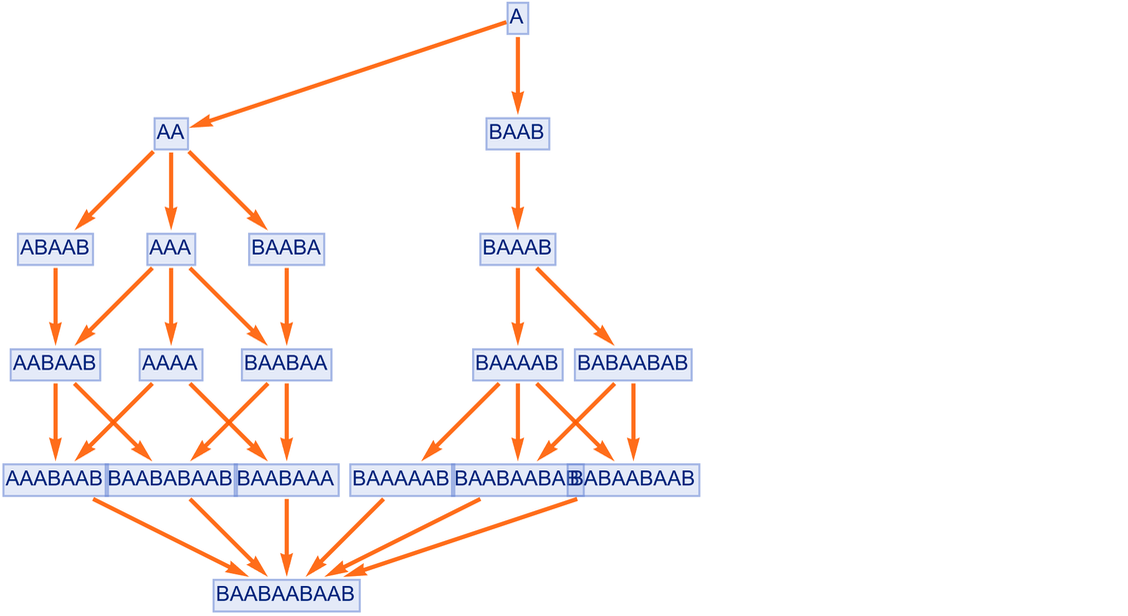

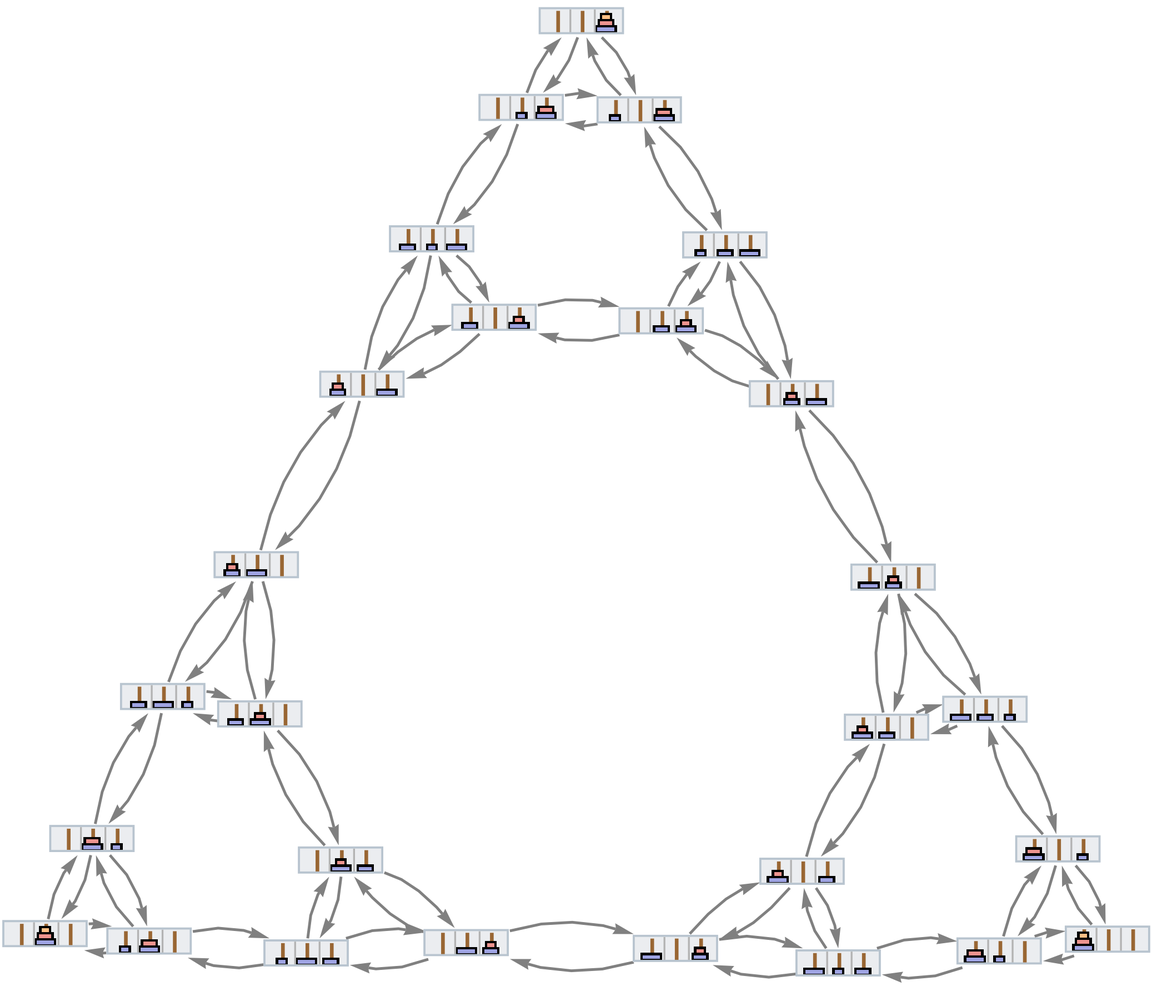

Continuing for a few more steps we then get

|

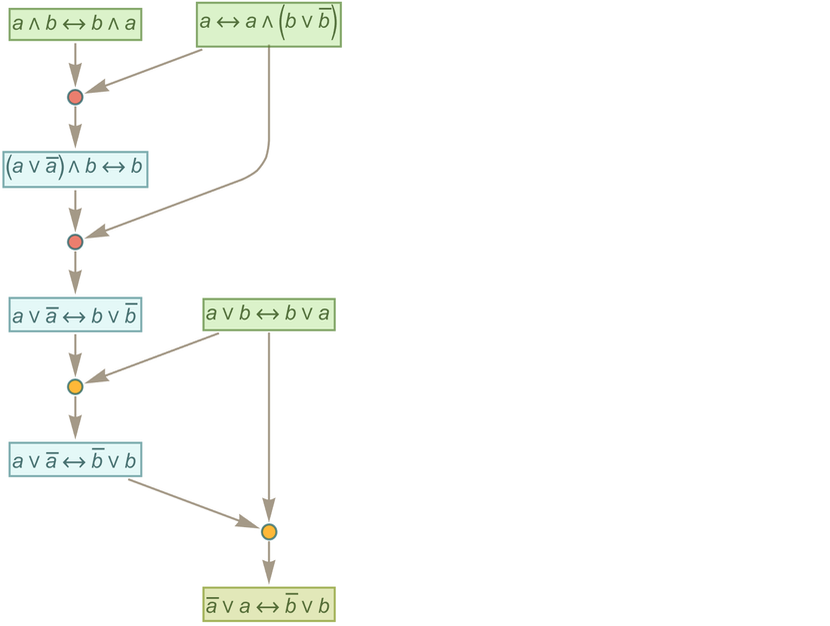

or in a different rendering:

|

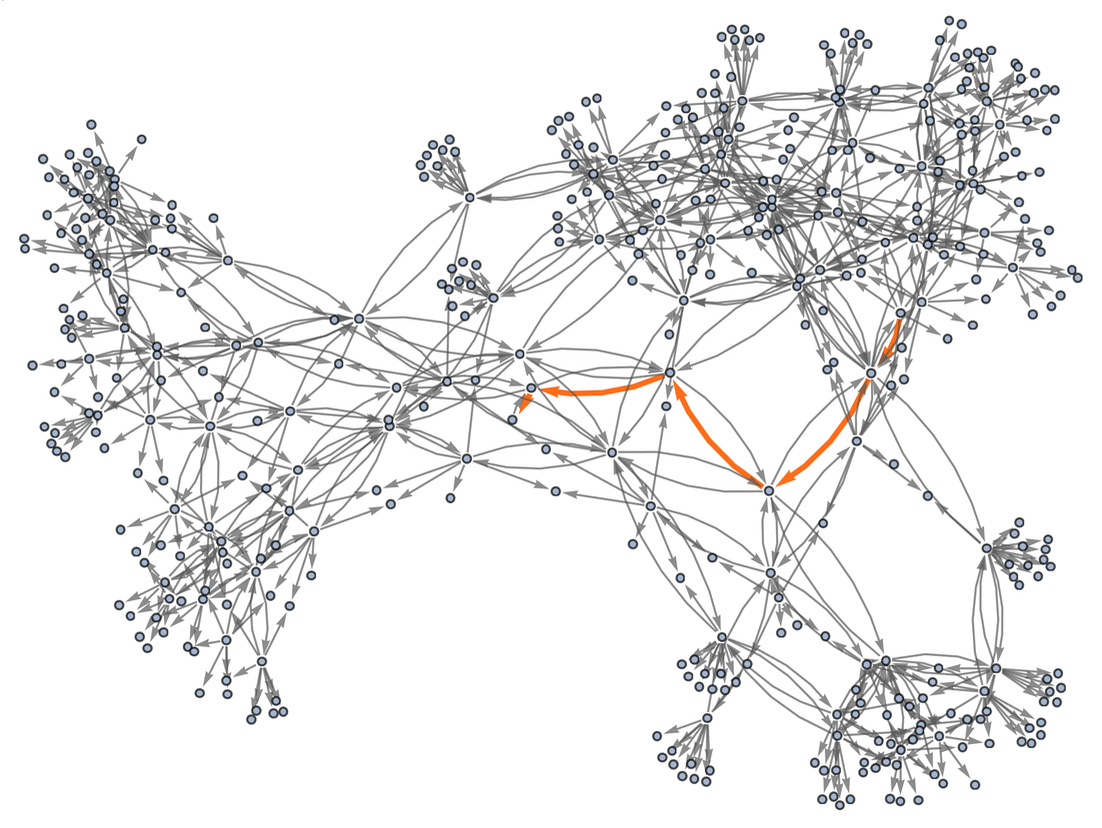

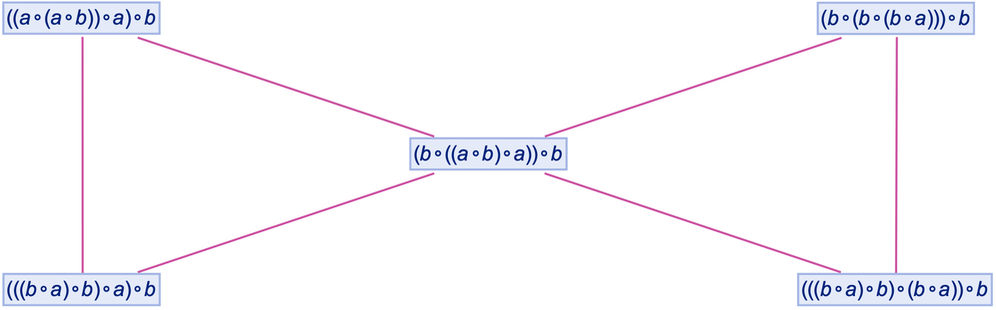

But what does this graph mean? Essentially it gives us a map of equivalences between expressions—with any pair of expressions that are connected being equivalent. So, for example, it turns out that the expressions ![]() and

and ![]() are equivalent, and we can “prove this” by exhibiting a path between them in the graph:

are equivalent, and we can “prove this” by exhibiting a path between them in the graph:

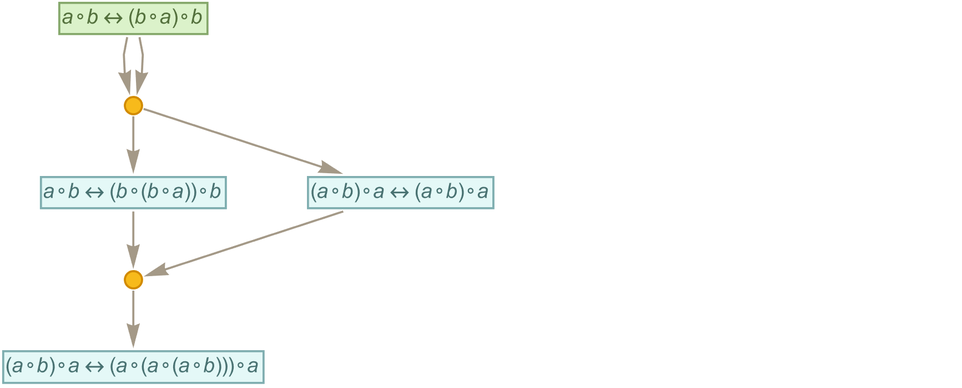

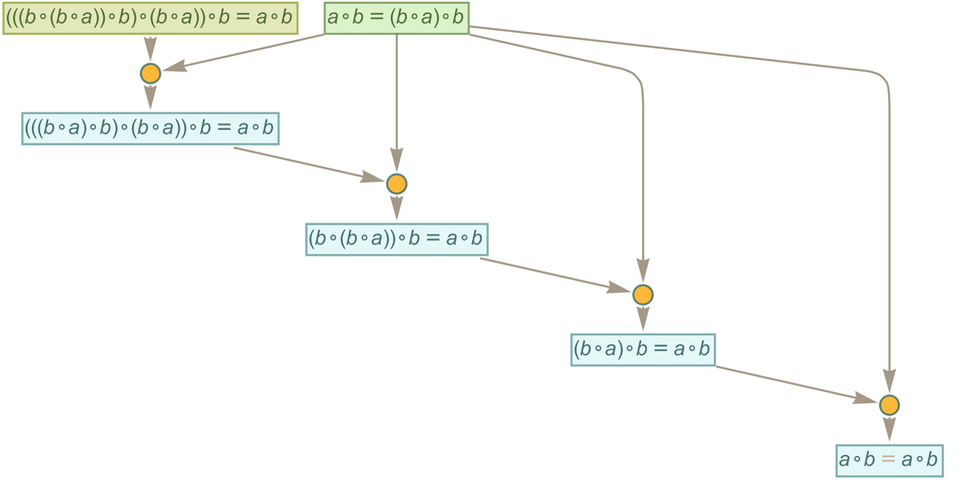

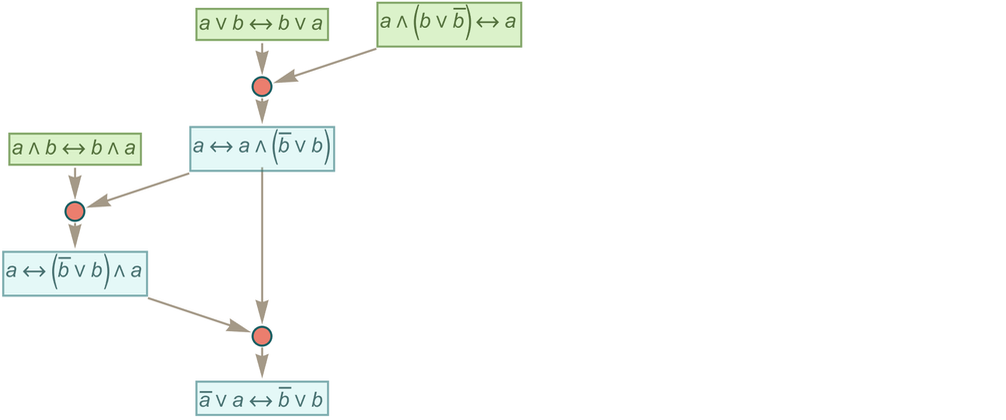

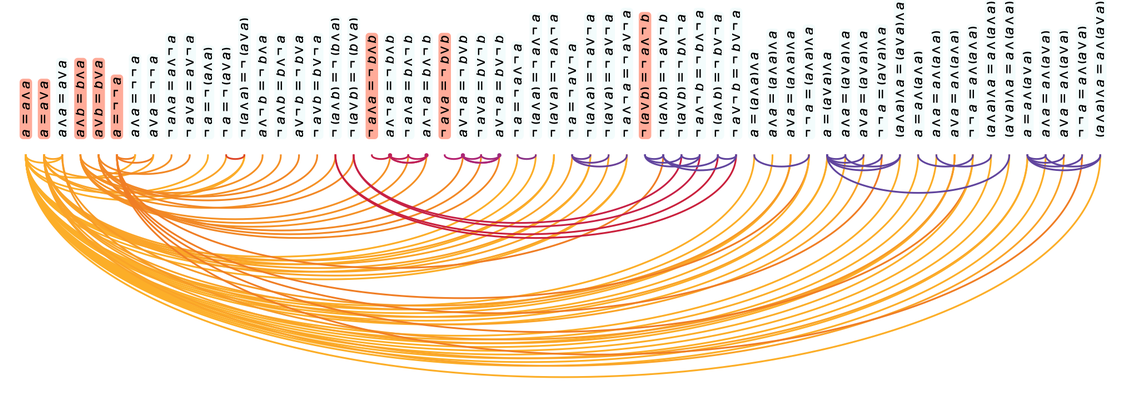

|

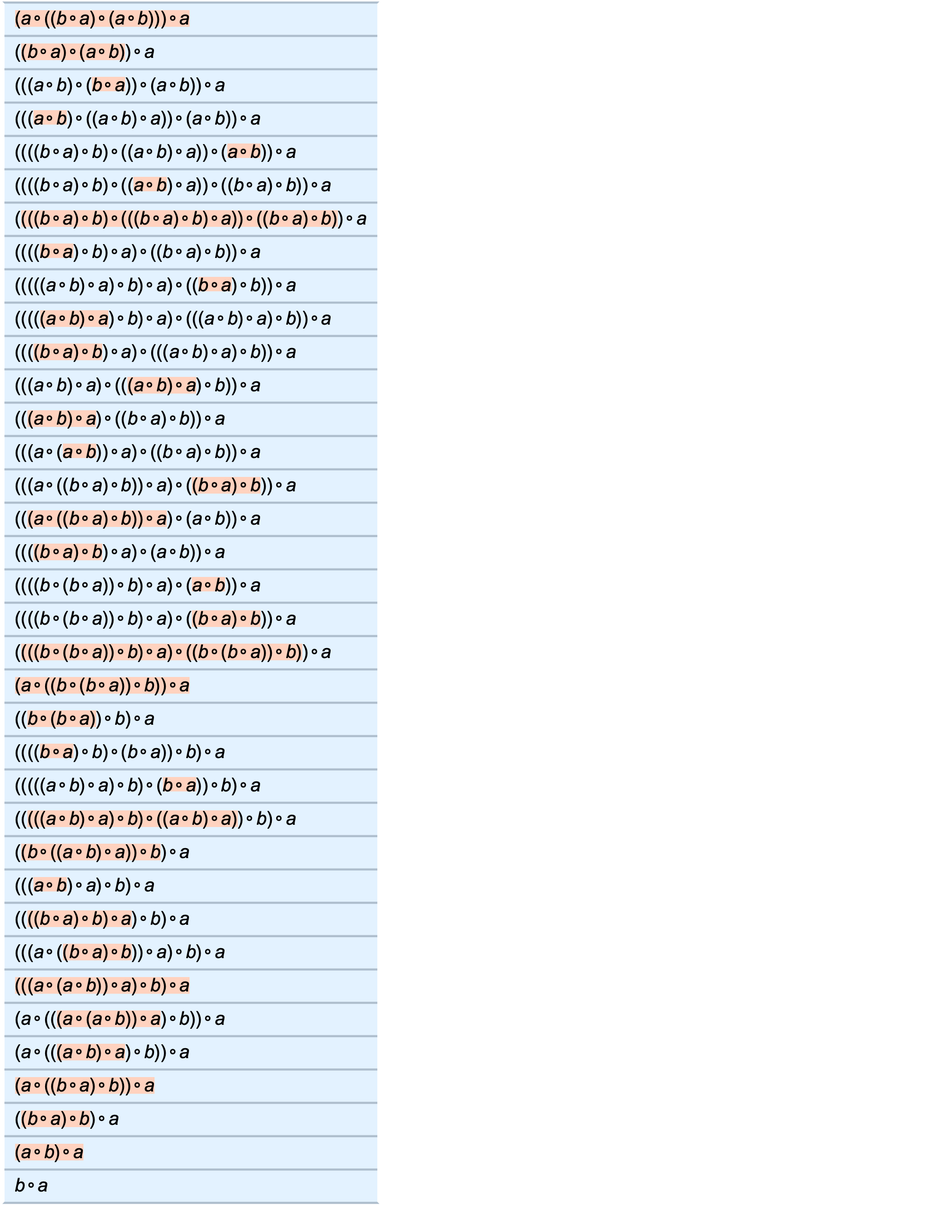

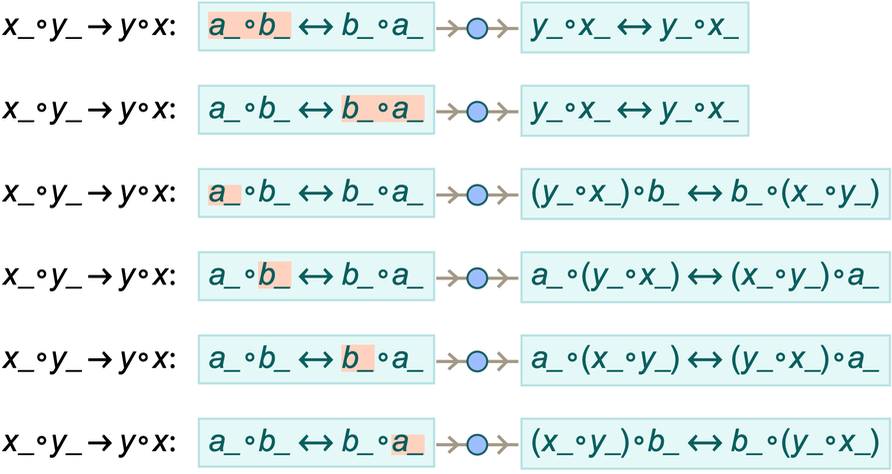

The steps on the path can then be viewed as steps in the proof, where here at each step we’ve indicated where the transformation in the expression took place:

|

In mathematical terms, we can then say that starting from the “axiom” ![]() we were able to prove a certain equivalence theorem between two expressions. We gave a particular proof. But there are others, for example the “less efficient” 35-step one

we were able to prove a certain equivalence theorem between two expressions. We gave a particular proof. But there are others, for example the “less efficient” 35-step one

|

corresponding to the path:

|

For our later purposes it’s worth talking in a little bit more detail here about how the steps in these proofs actually proceed. Consider the expression:

|

|

We can think of this as a tree:

|

Our axiom can then be represented as:

|

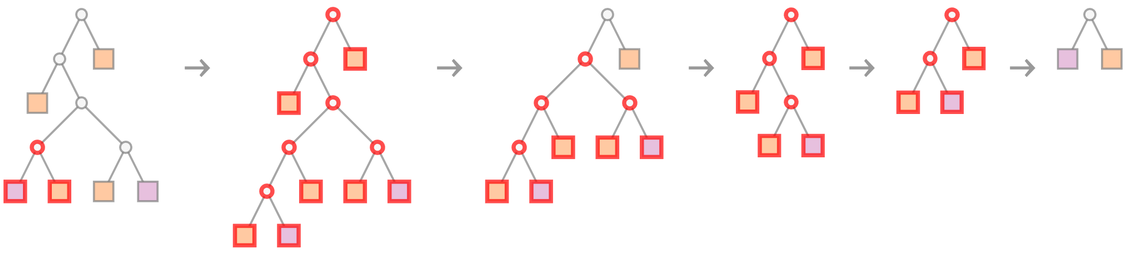

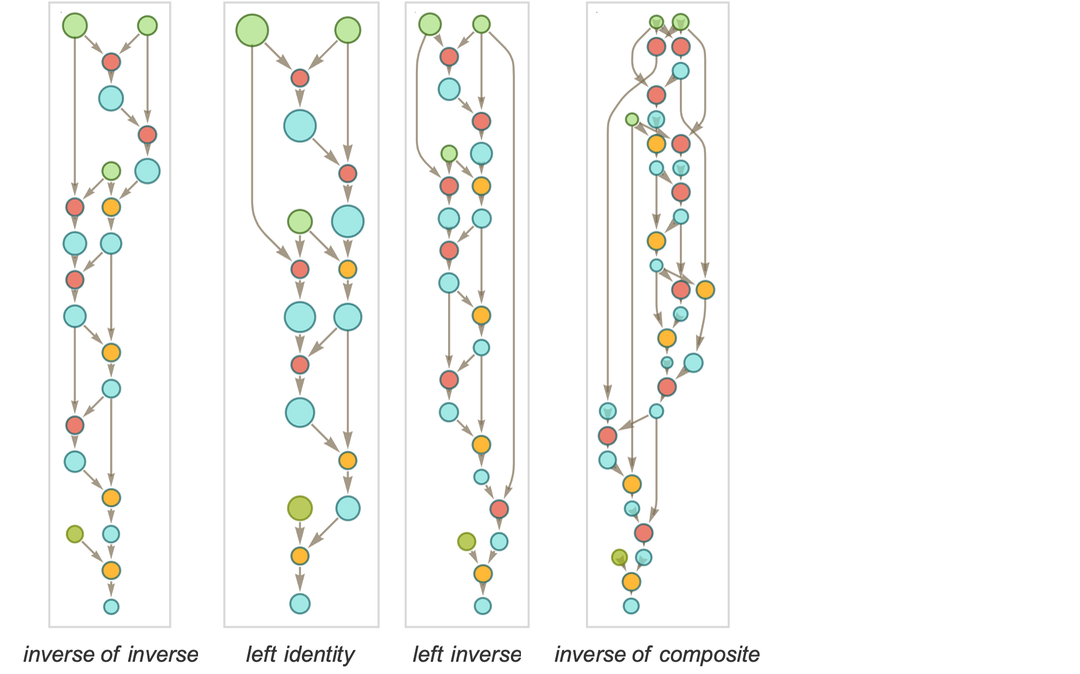

In terms of trees, our first proof becomes

|

where we’re indicating at each step which piece of tree gets “substituted for” using the axiom.

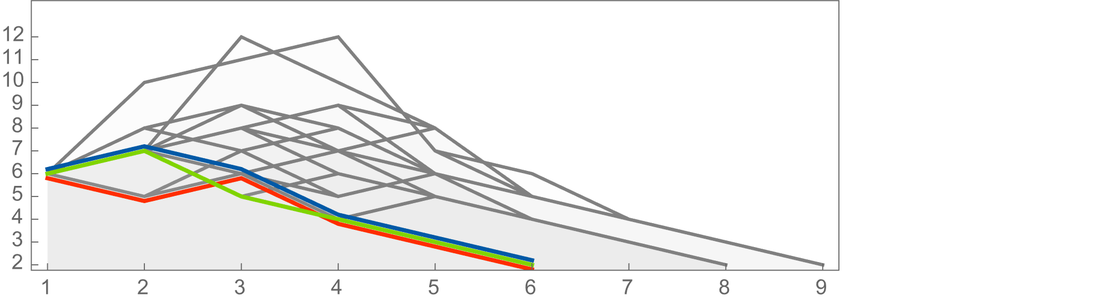

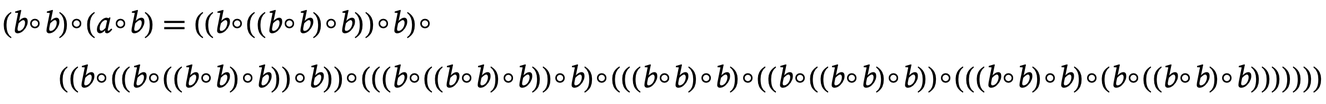

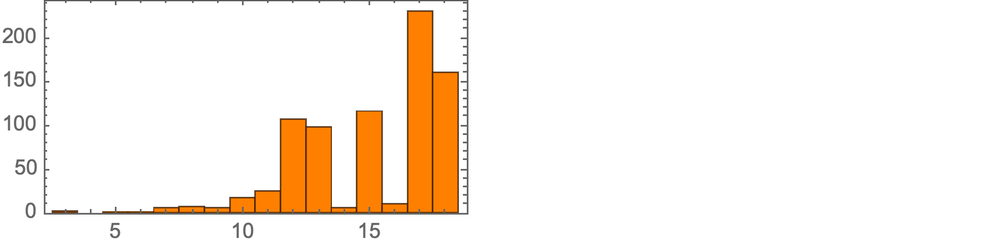

What we’ve done so far is to generate a multiway graph for a certain number of steps, and then to see if we can find a “proof path” in it for some particular statement. But what if we are given a statement, and asked whether it can be proved within the specified axiom system? In effect this asks whether if we make a sufficiently large multiway graph we can find a path of any length that corresponds to the statement.

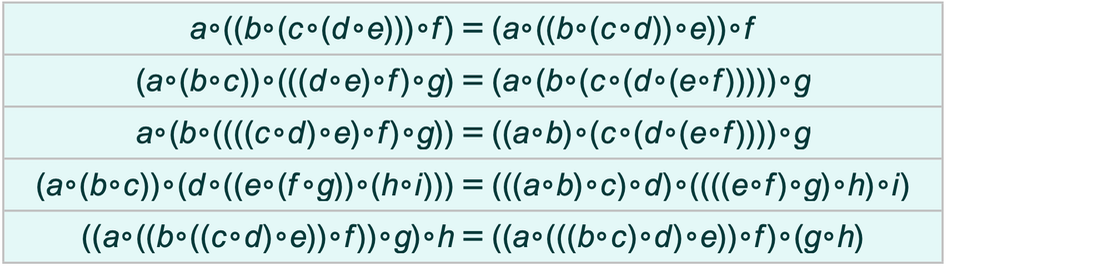

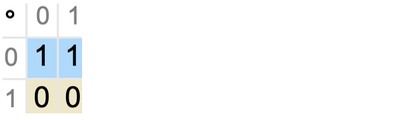

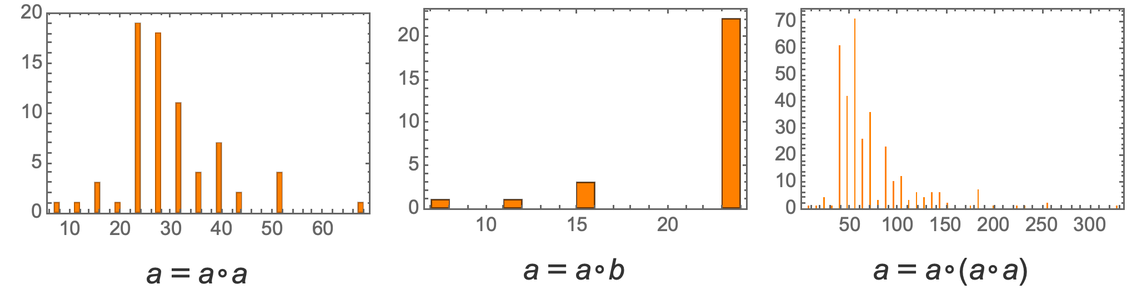

If our system was computationally reducible we could expect always to be able to find a finite answer to this question. But in general—with the Principle of Computational Equivalence and the ubiquitous presence of computational irreducibility—it’ll be common that there is no fundamentally better way to determine whether a path exists than effectively to try explicitly generating it. If we knew, for example, that the intermediate expressions generated always remained of bounded length, then this would still be a bounded problem. But in general the expressions can grow to any size—with the result that there is no general upper bound on the length of path necessary to prove even a statement about equivalence between small expressions.

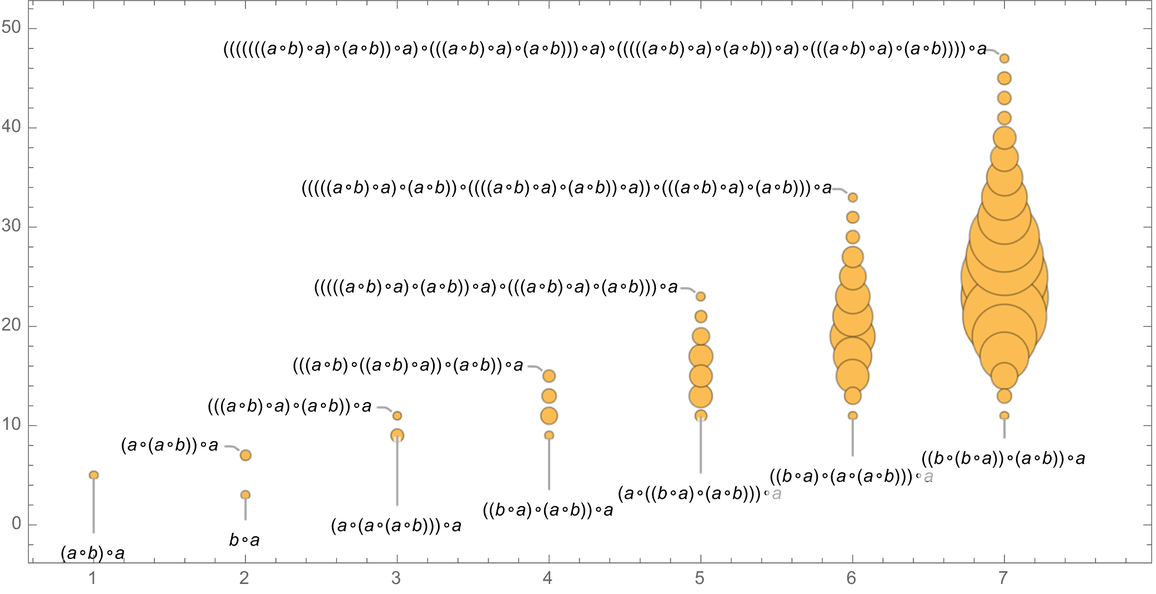

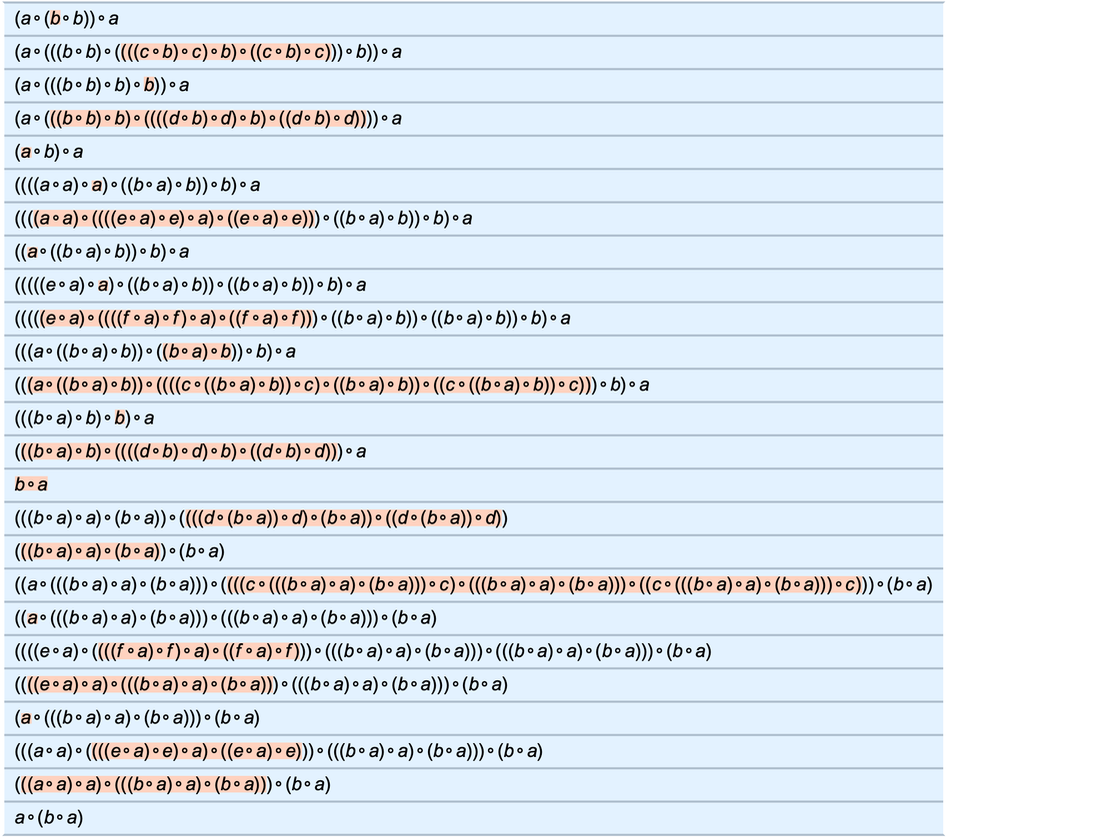

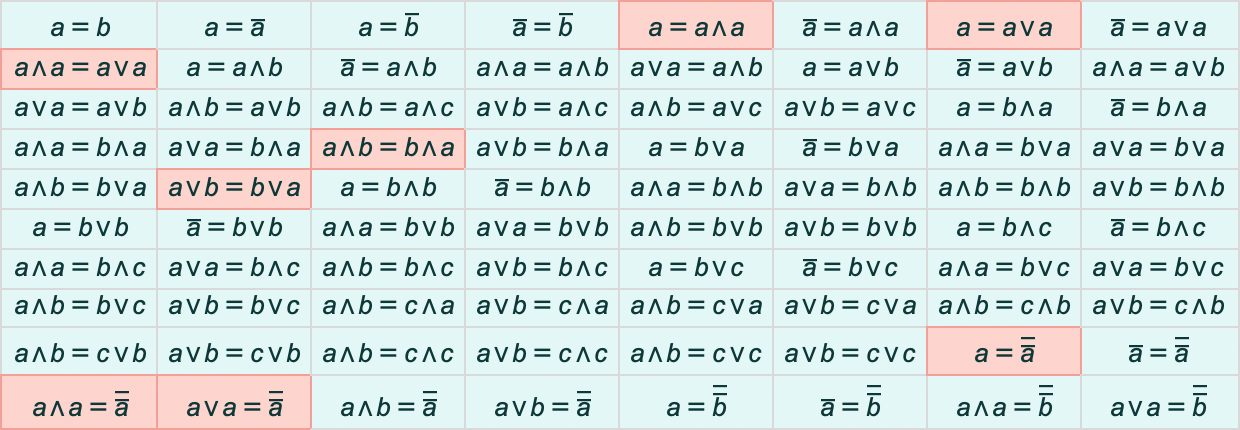

For example, for the axiom we are using here, we can look at statements of the form ![]() . Then this shows how many expressions expr of what sizes have shortest proofs of

. Then this shows how many expressions expr of what sizes have shortest proofs of ![]() with progressively greater lengths:

with progressively greater lengths:

|

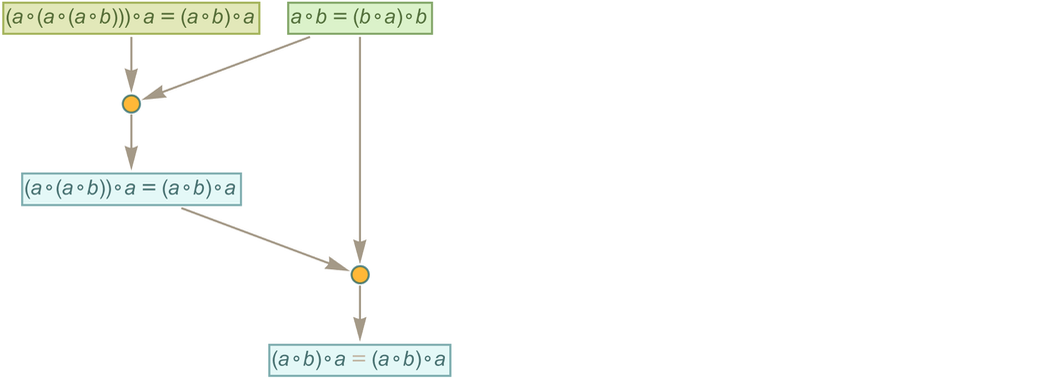

And for example if we look at the statement

|

|

its shortest proof is

|

where, as is often the case, there are intermediate expressions that are longer than the final result.

4 | Some Simple Examples with Mathematical Interpretations

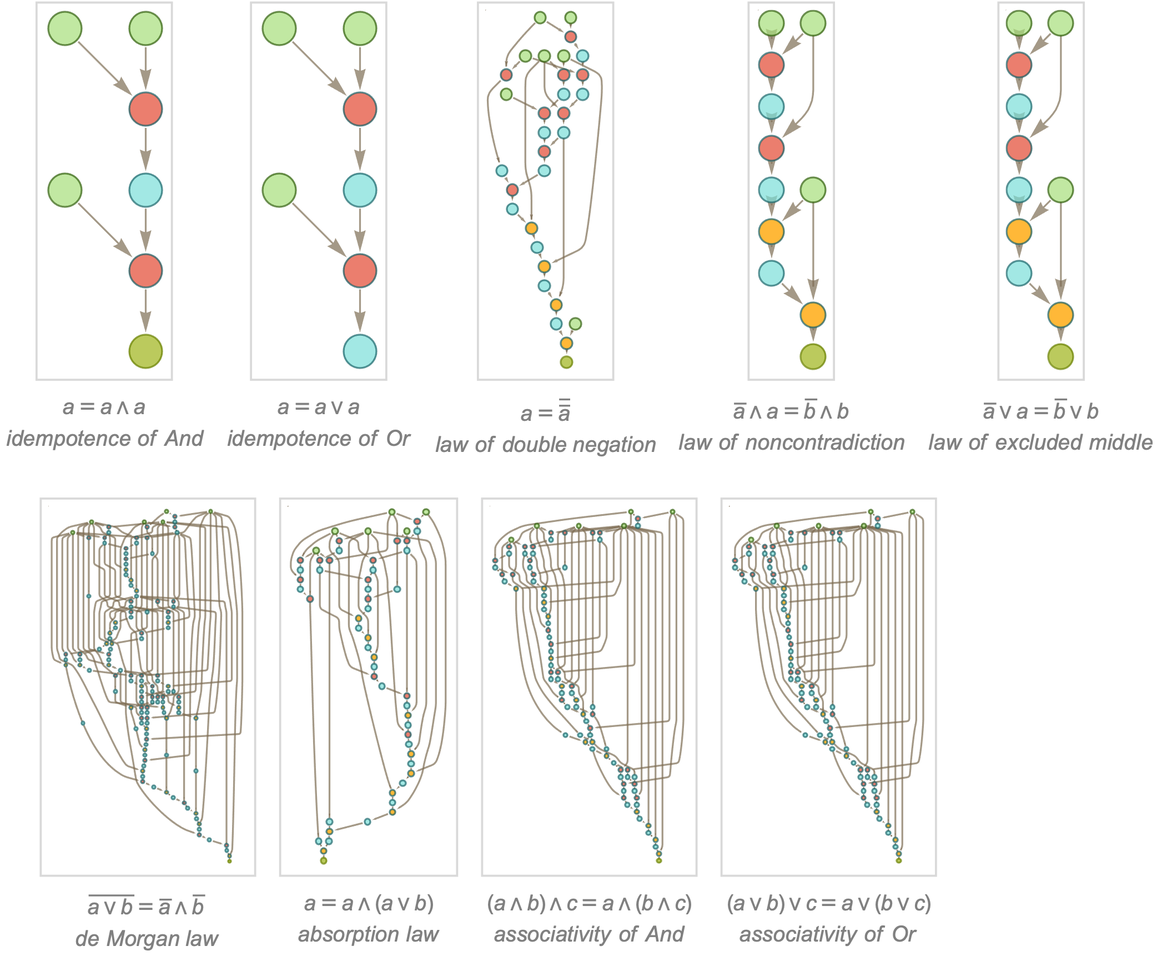

The multiway graphs in the previous section are in a sense fundamentally metamathematical. Their “raw material” is mathematical statements. But what they represent are the results of operations—like substitution—that are defined at a kind of meta level, that “talks about mathematics” but isn’t itself immediately “representable as mathematics”. But to help understand this relationship it’s useful to look at simple cases where it’s possible to make at least some kind of correspondence with familiar mathematical concepts.

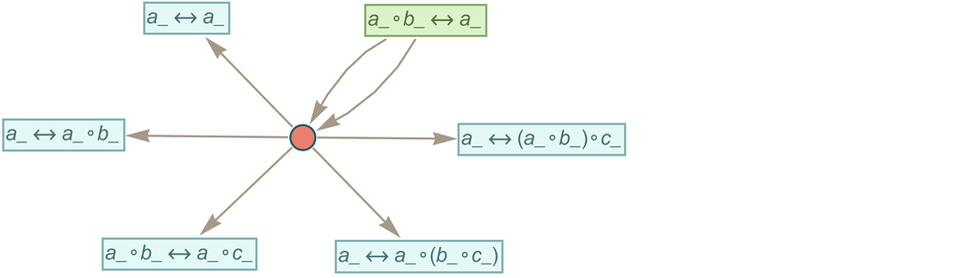

Consider for example the axiom

|

|

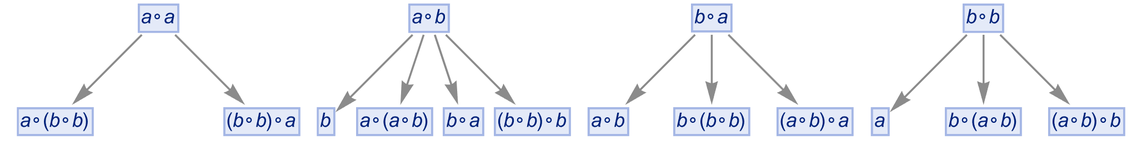

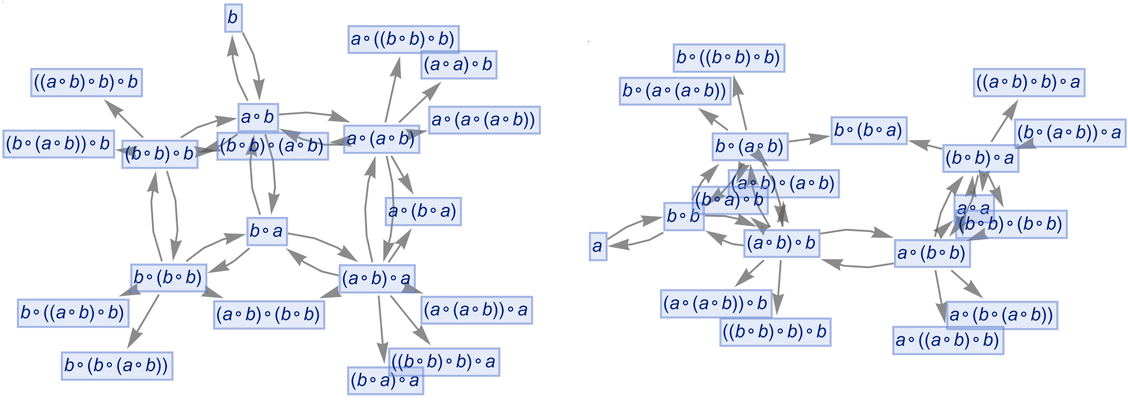

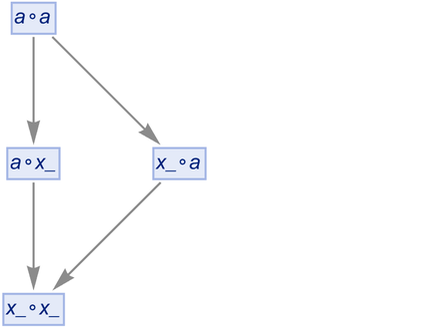

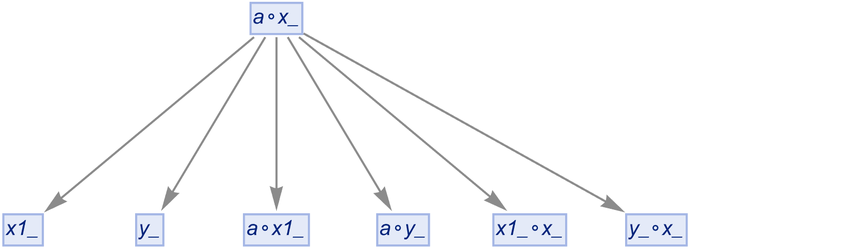

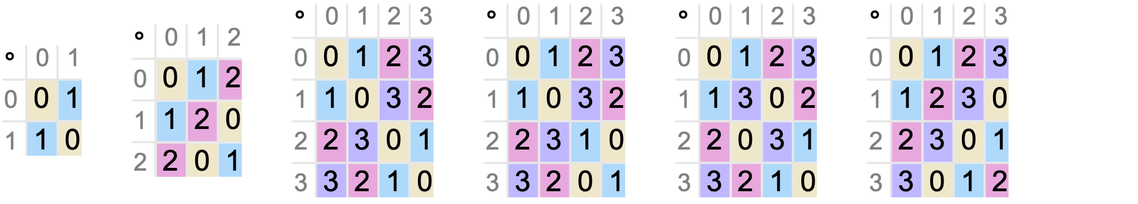

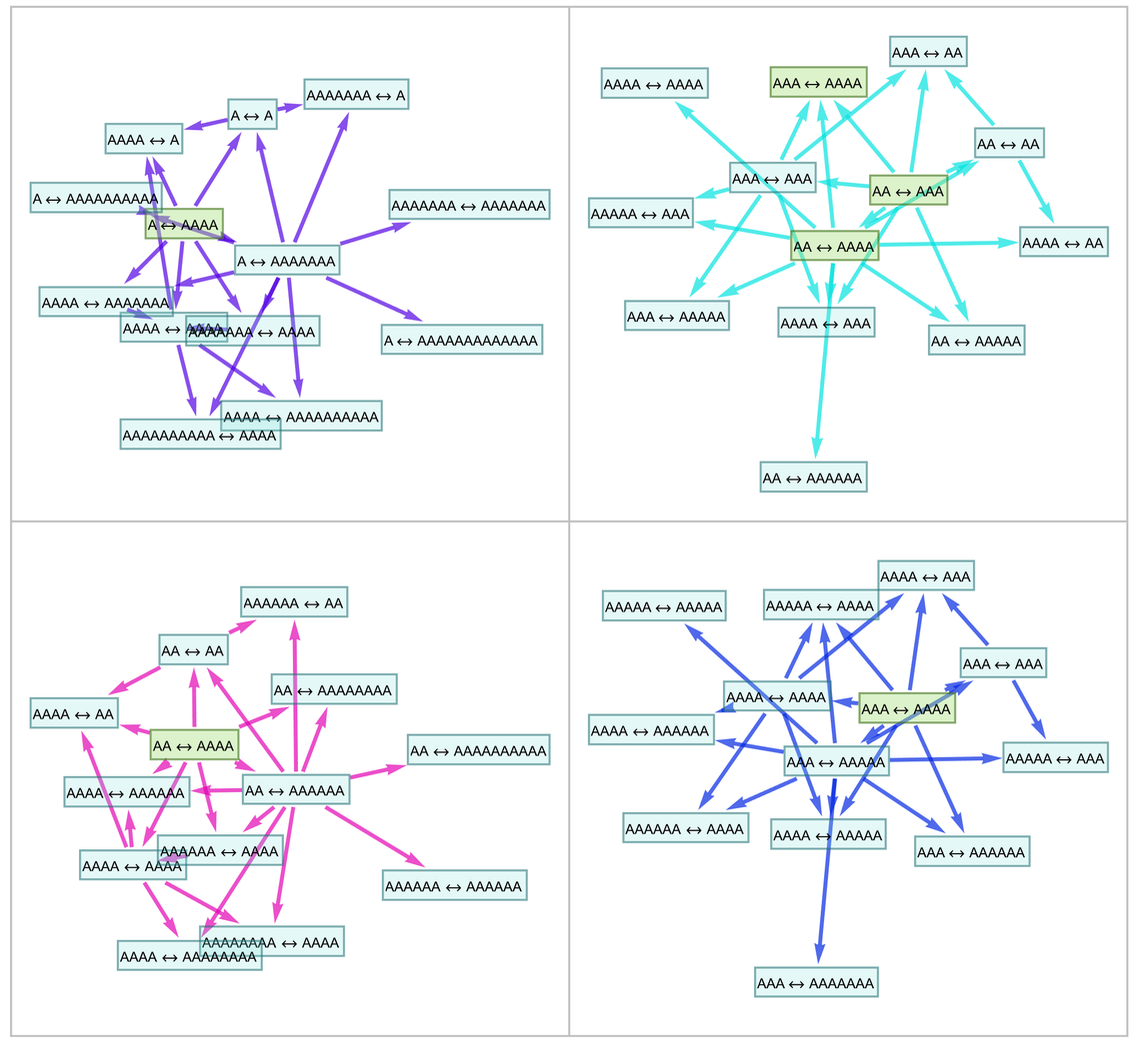

that we can think of as representing commutativity of the binary operator ∘. Now consider using substitution to “apply this axiom”, say starting from the expression ![]() . The result is the (finite) multiway graph:

. The result is the (finite) multiway graph:

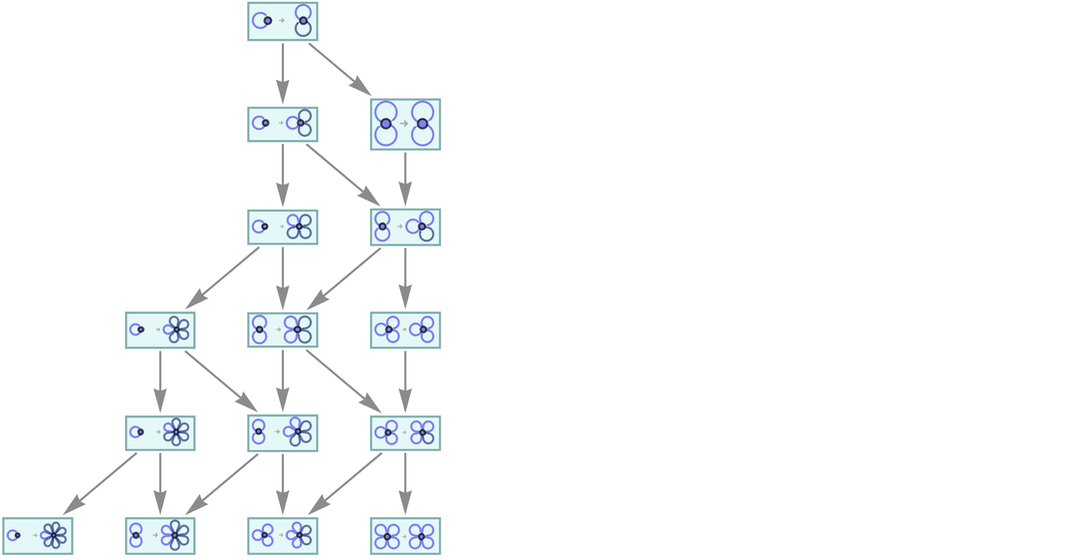

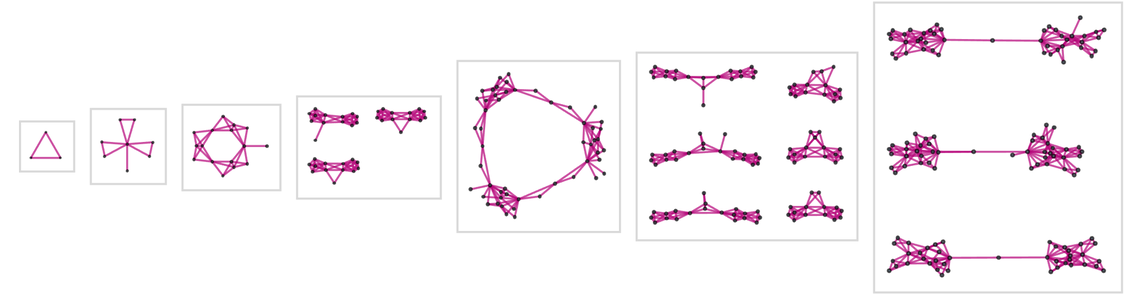

|

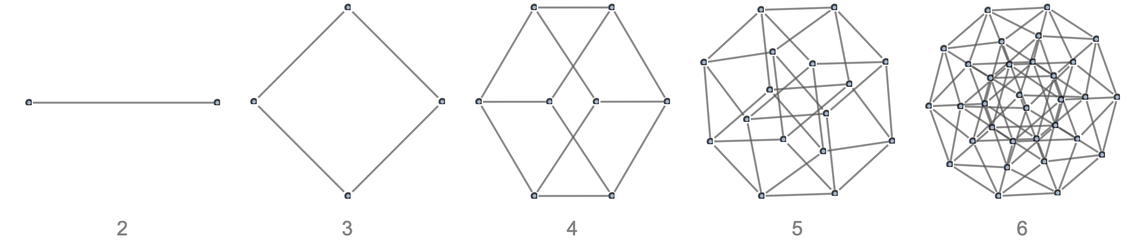

Conflating the pairs of edges going in opposite directions, the resulting graphs starting from any expression involving s ∘’s (and ![]() distinct variables) are:

distinct variables) are:

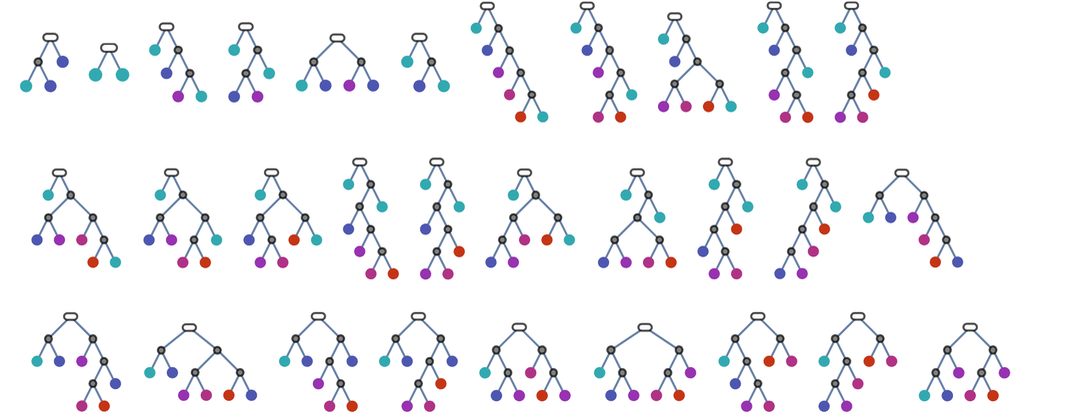

|

And these are just the Boolean hypercubes, each with ![]() nodes.

nodes.

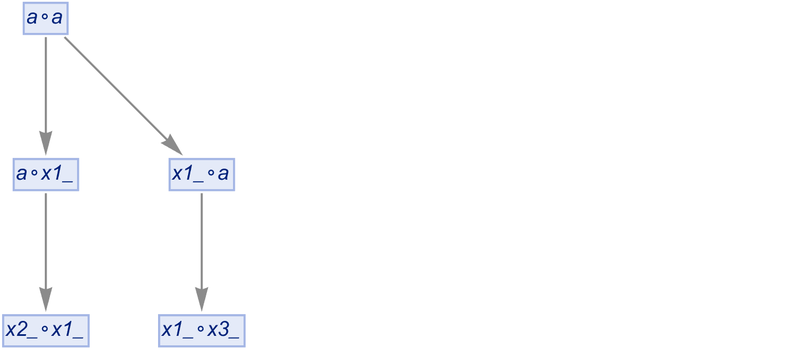

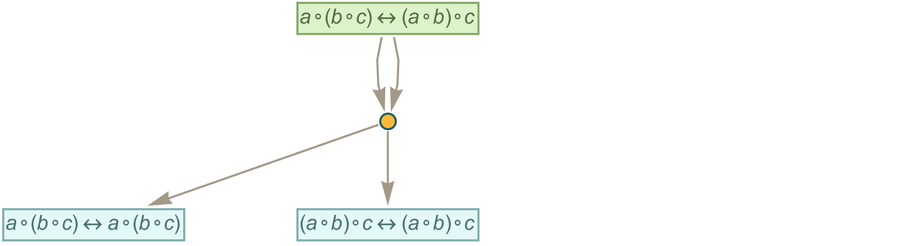

If instead of commutativity we consider the associativity axiom

|

|

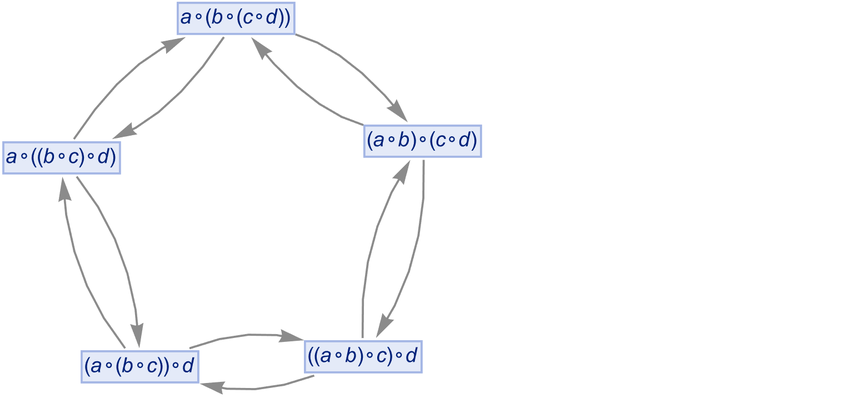

then we get a simple “ring” multiway graph:

|

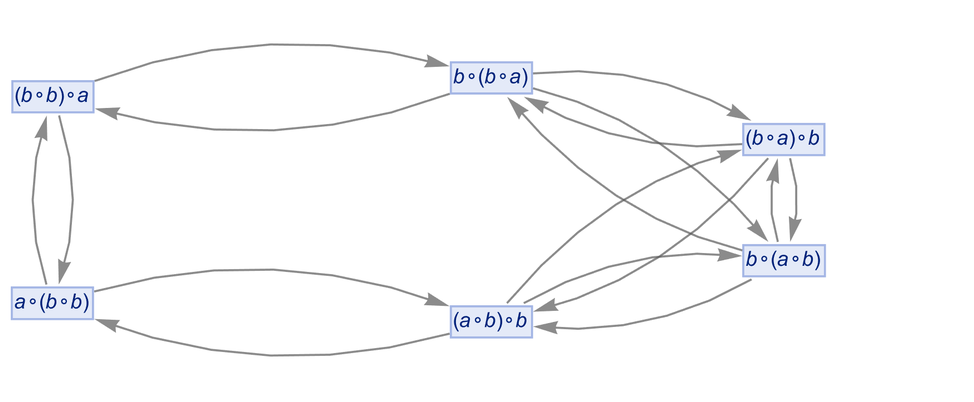

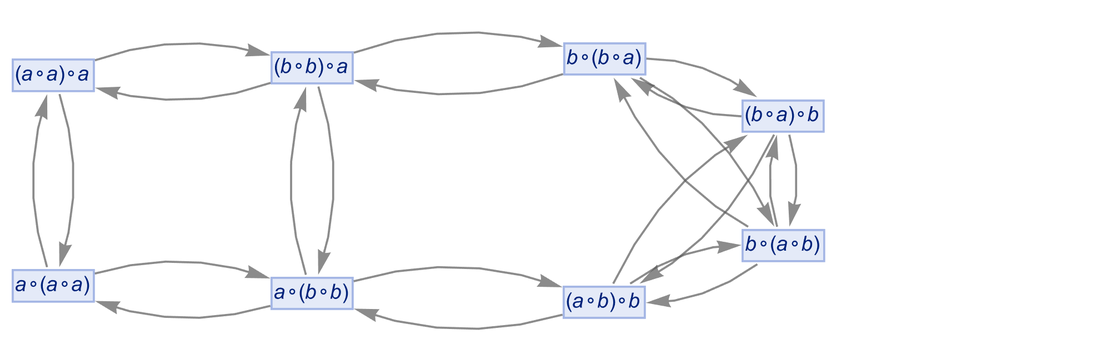

With both associativity and commutativity we get:

|

What is the mathematical significance of this object? We can think of our axioms as being the general axioms for a commutative semigroup. And if we build a multiway graph—say starting with ![]() —we’ll find out what expressions are equivalent to

—we’ll find out what expressions are equivalent to ![]() in any commutative semigroup—or, in other words, we’ll get a collection of theorems that are “true for any commutative semigroup”:

in any commutative semigroup—or, in other words, we’ll get a collection of theorems that are “true for any commutative semigroup”:

|

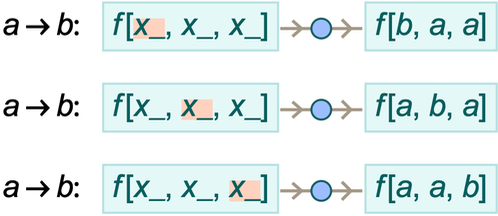

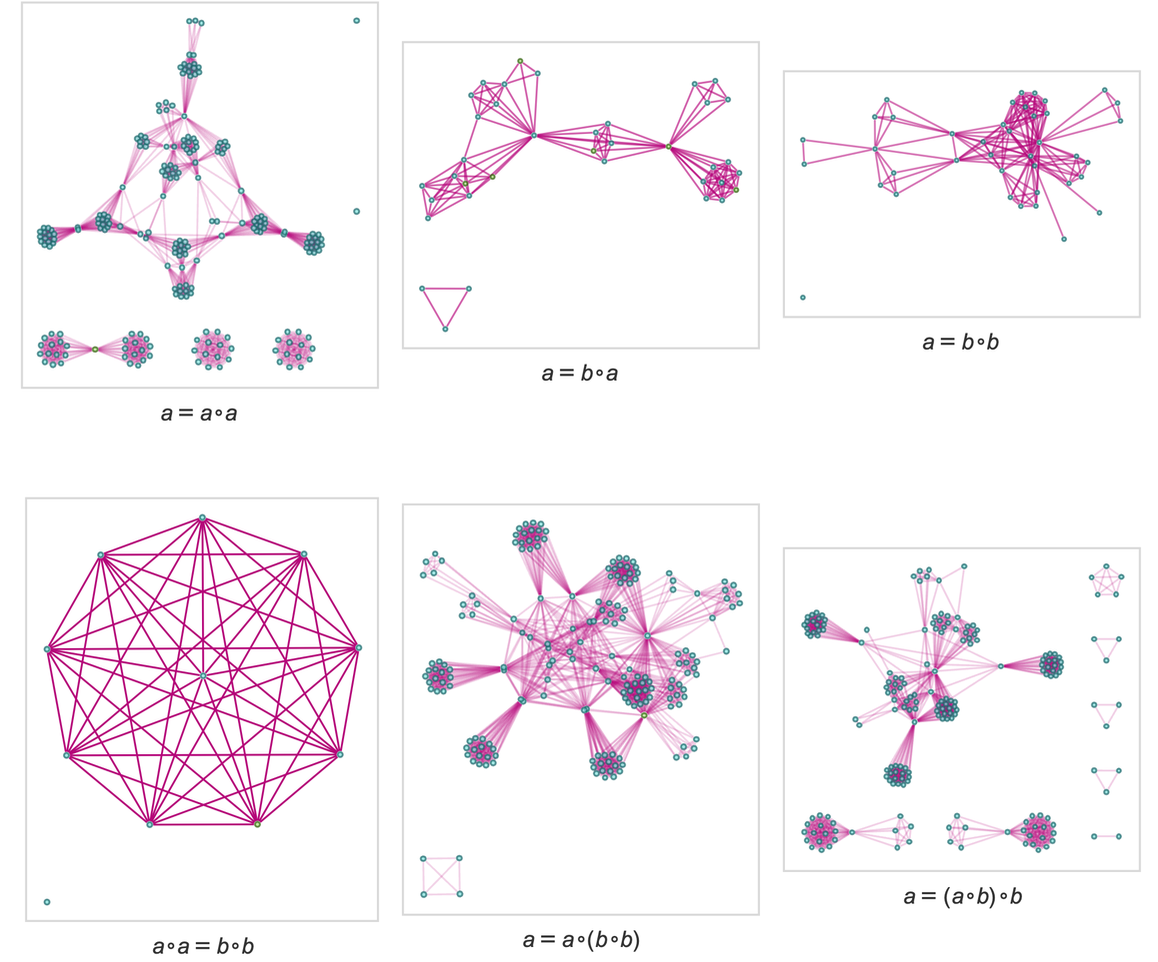

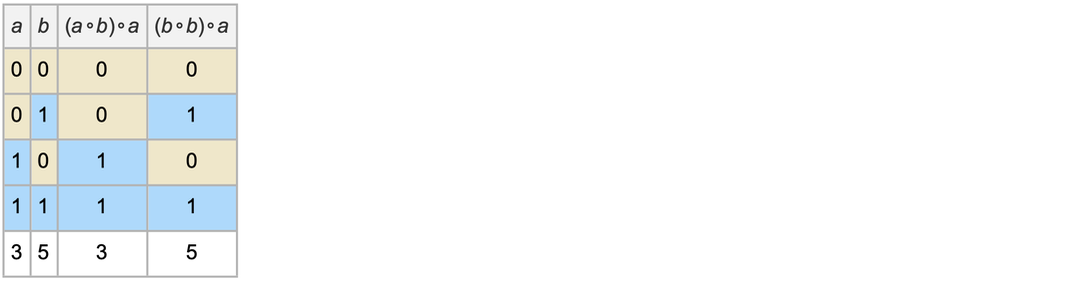

But what if we want to deal with a “specific semigroup” rather than a generic one? We can think of our symbols a and b as generators of the semigroup, and then we can add relations, as in:

|

|

And the result of this will be that we get more equivalences between expressions:

|

The multiway graph here is still finite, however, giving a finite number of equivalences. But let’s say instead that we add the relations:

|

|

Then if we start from a we get a multiway graph that begins like

|

but just keeps growing forever (here shown after 6 steps):

|

And what this then means is that there are an infinite number of equivalences between expressions. We can think of our basic symbols ![]() and

and ![]() as being generators of our semigroup. Then our expressions correspond to “words” in the semigroup formed from these generators. The fact that the multiway graph is infinite then tells us that there are an infinite number of equivalences between words.

as being generators of our semigroup. Then our expressions correspond to “words” in the semigroup formed from these generators. The fact that the multiway graph is infinite then tells us that there are an infinite number of equivalences between words.

But when we think about the semigroup mathematically we’re typically not so interested in specific words as in the overall “distinct elements” in the semigroup, or in other words, in those “clusters of words” that don’t have equivalences between them. And to find these we can imagine starting with all possible expressions, then building up multiway graphs from them. Many of the graphs grown from different expressions will join up. But what we want to know in the end is how many disconnected graph components are ultimately formed. And each of these will correspond to an element of the semigroup.

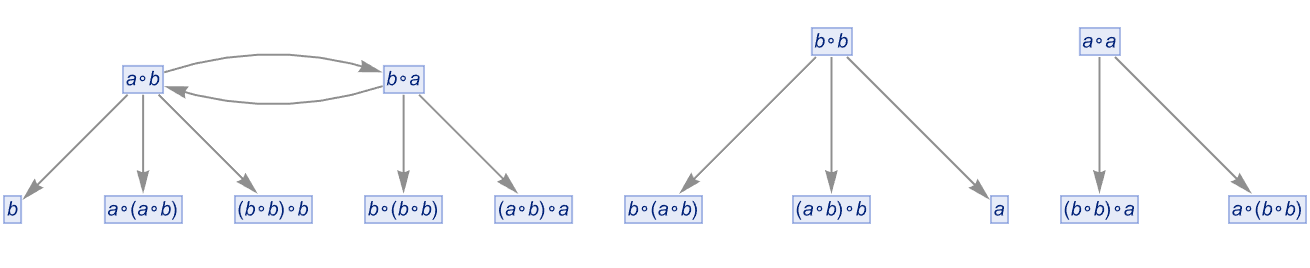

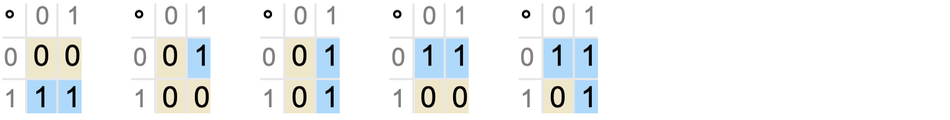

As a simple example, let’s start from all words of length 2:

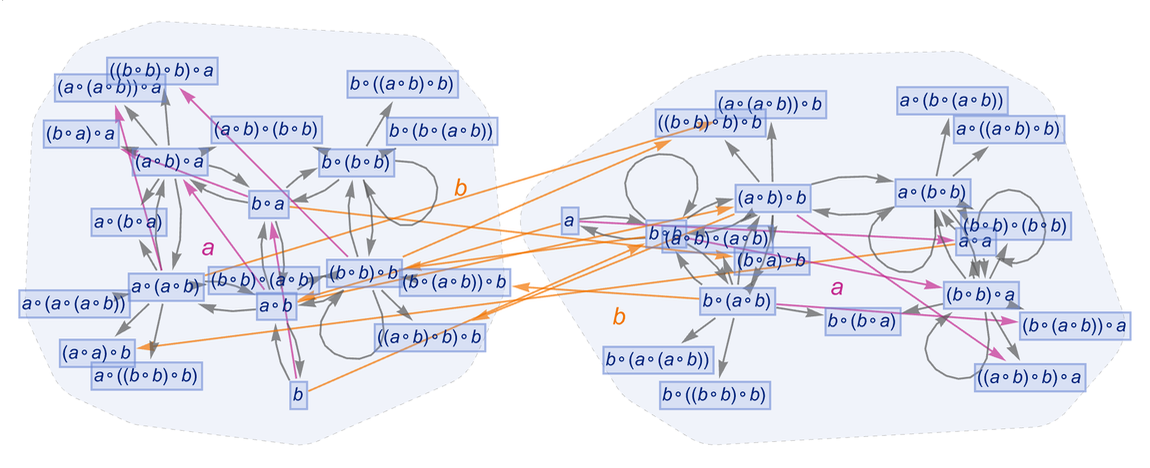

|

|

The multiway graphs formed from each of these after 1 step are:

|

But these graphs in effect “overlap”, leaving three disconnected components:

|

After 2 steps the corresponding result has two components:

|

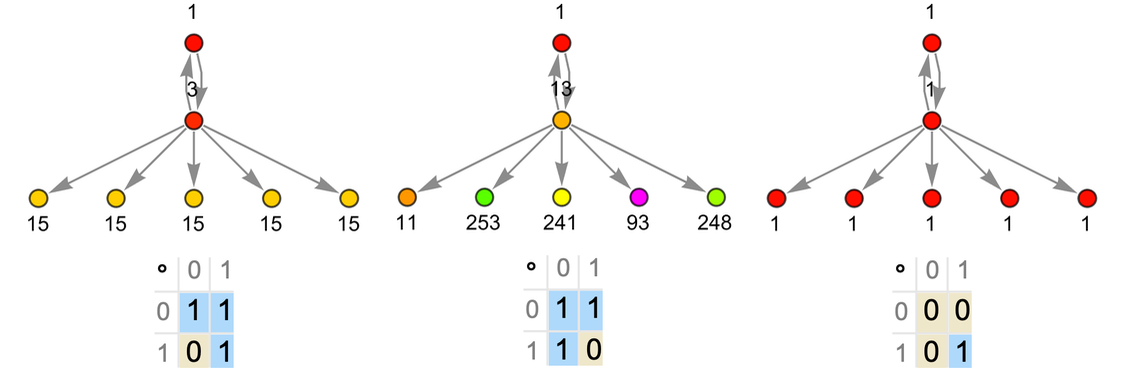

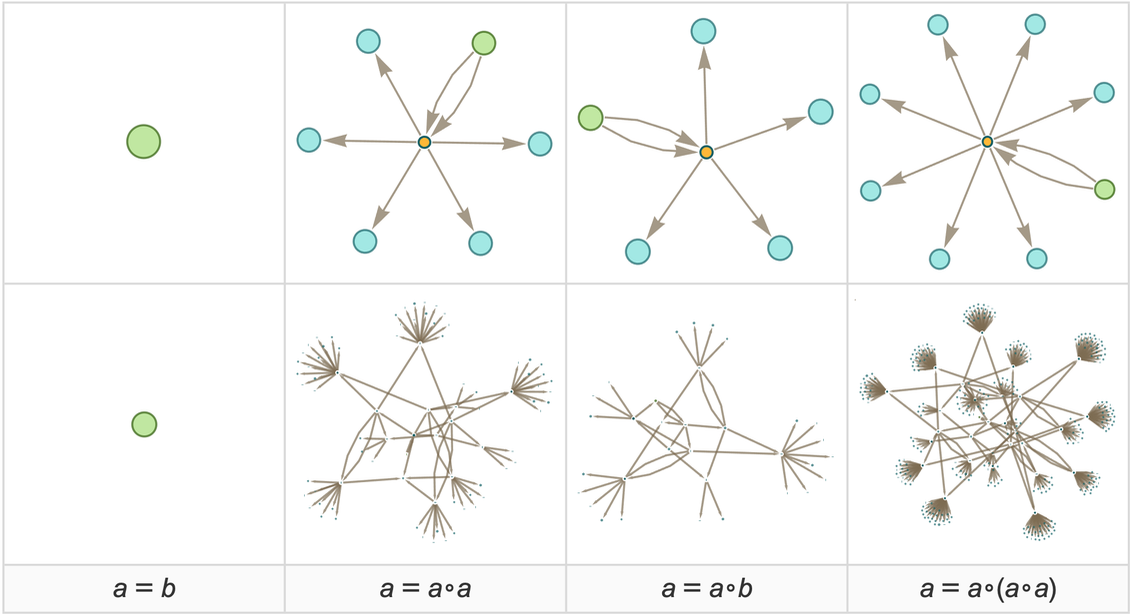

And if we start with longer (or shorter) words, and run for more steps, we’ll keep finding the same result: that there are just two disconnected “droplets” that “condense out” of the “gas” of all possible initial words:

|

And what this means is that our semigroup ultimately has just two distinct elements—each of which can be represented by any of the different (“equivalent”) words in each “droplet”. (In this particular case the droplets just contain respectively all words with an odd or even number of b’s.)

In the mathematical analysis of semigroups (as well as groups), it’s common ask what happens if one forms products of elements. In our setting what this means is in effect that one wants to “combine droplets using ∘”. The simplest words in our two droplets are respectively ![]() and

and ![]() . And we can use these as “representatives of the droplets”. Then we can see how multiplication by

. And we can use these as “representatives of the droplets”. Then we can see how multiplication by ![]() and by

and by ![]() transforms words from each droplet:

transforms words from each droplet:

|

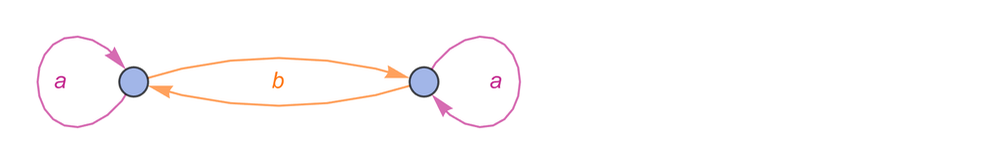

With only finite words the multiplications will sometimes not “have an immediate target” (so they are not indicated here). But in the limit of an infinite number of multiway steps, every multiplication will “have a target” and we’ll be able to summarize the effect of multiplication in our semigroup by the graph:

|

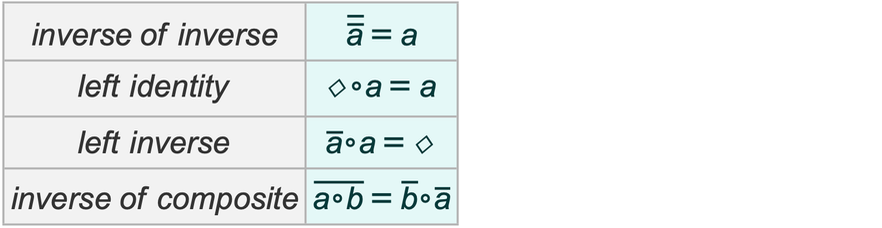

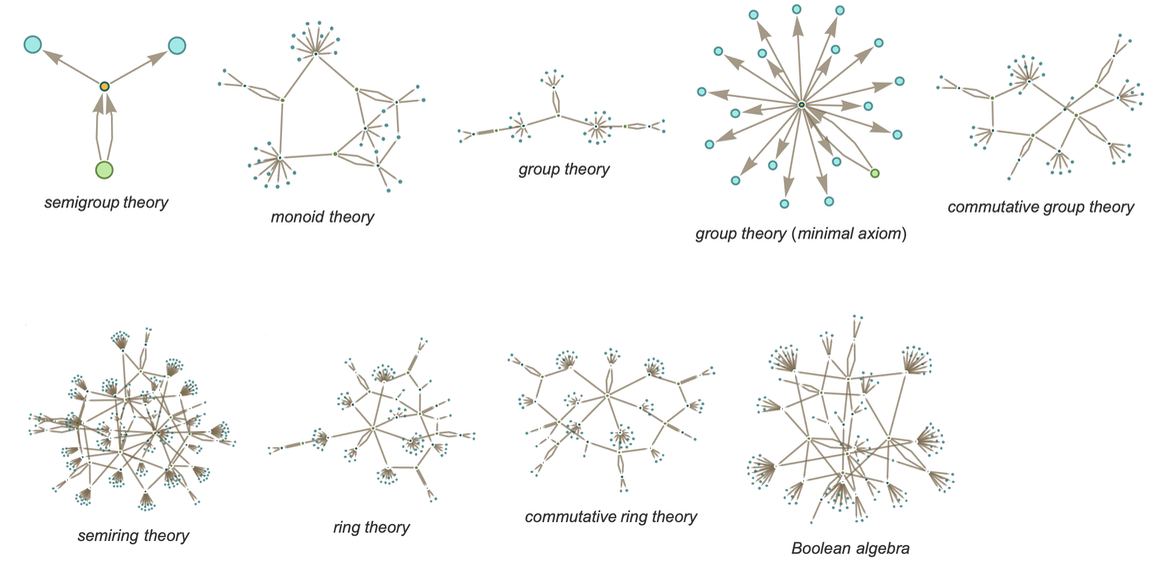

More familiar as mathematical objects than semigroups are groups. And while their axioms are slightly more complicated, the basic setup we’ve discussed for semigroups also applies to groups. And indeed the graph we’ve just generated for our semigroup is very much like a standard Cayley graph that we might generate for a group—in which the nodes are elements of the group and the edges define how one gets from one element to another by multiplying by a generator. (One technical detail is that in Cayley graphs identity-element self-loops are normally dropped.)

Consider the group ![]() (the “Klein four-group”). In our notation the axioms for this group can be written:

(the “Klein four-group”). In our notation the axioms for this group can be written:

|

|

Given these axioms we do the same construction as for the semigroup above. And what we find is that now four “droplets” emerge, corresponding to the four elements of ![]()

|

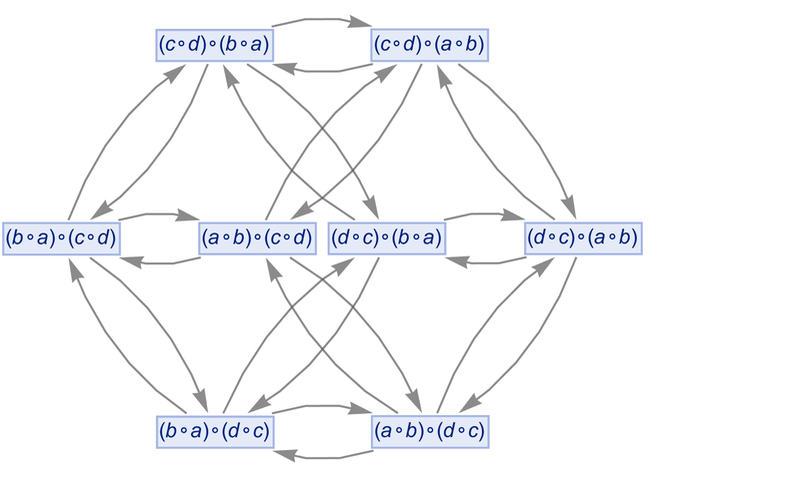

and the pattern of connections between them in the limit yields exactly the Cayley graph for ![]() :

:

|

We can view what’s happening here as a first example of something we’ll return to at length later: the idea of “parsing out” recognizable mathematical concepts (here things like elements of groups) from lower-level “purely metamathematical” structures.

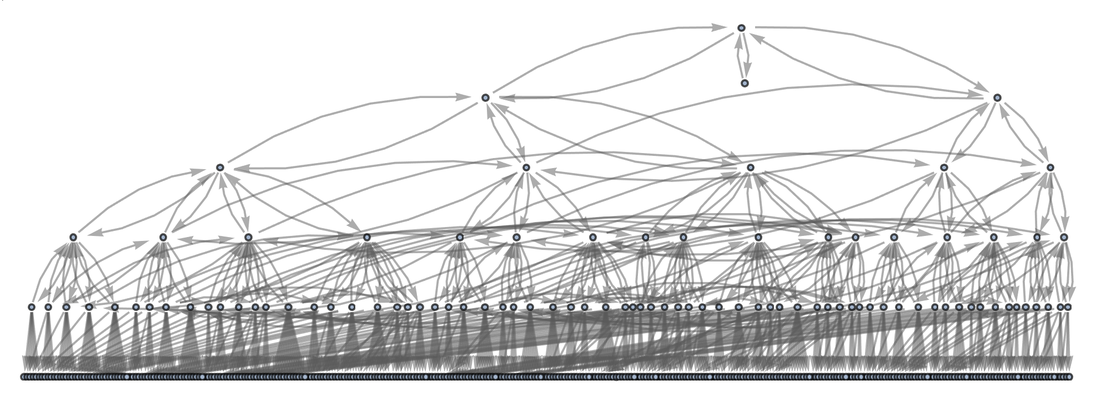

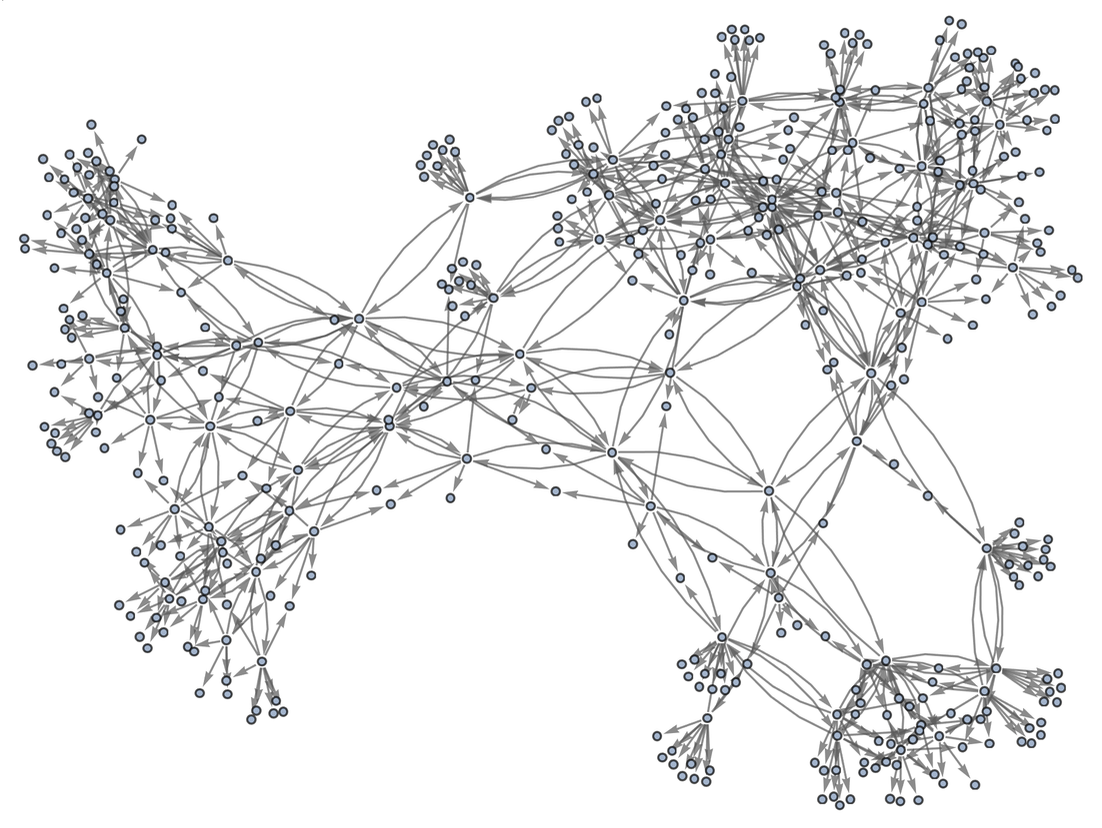

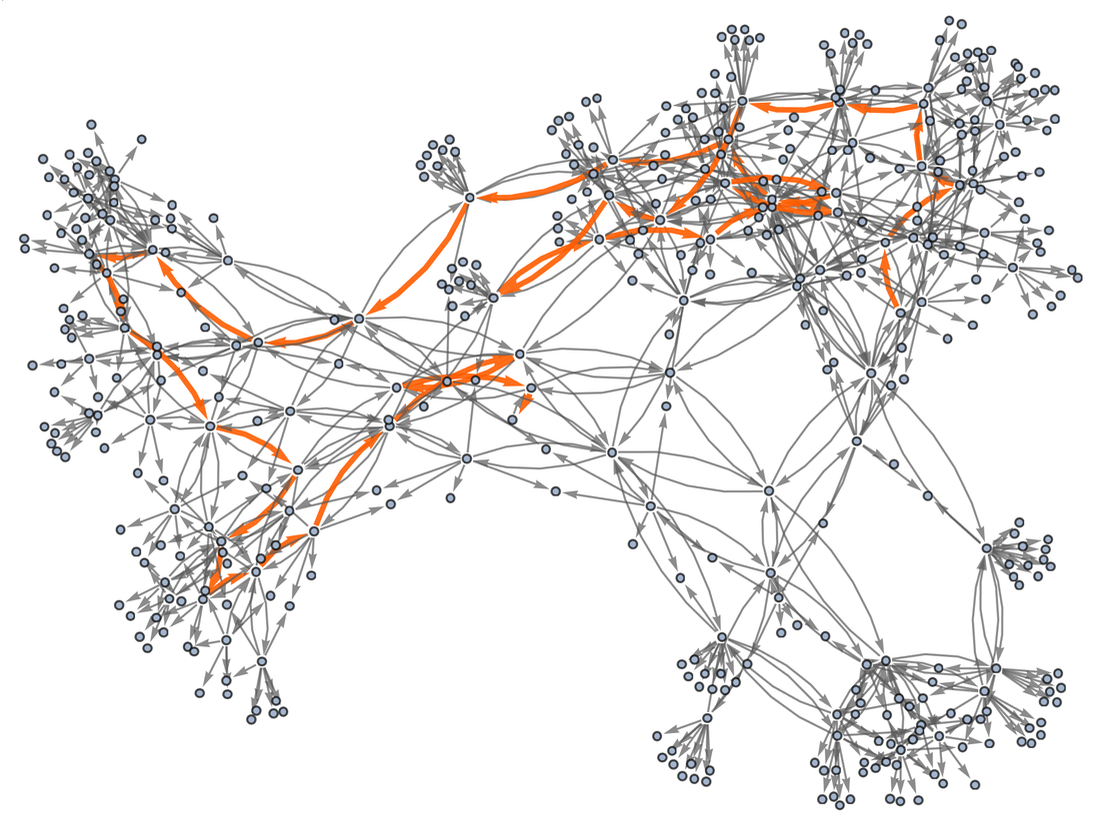

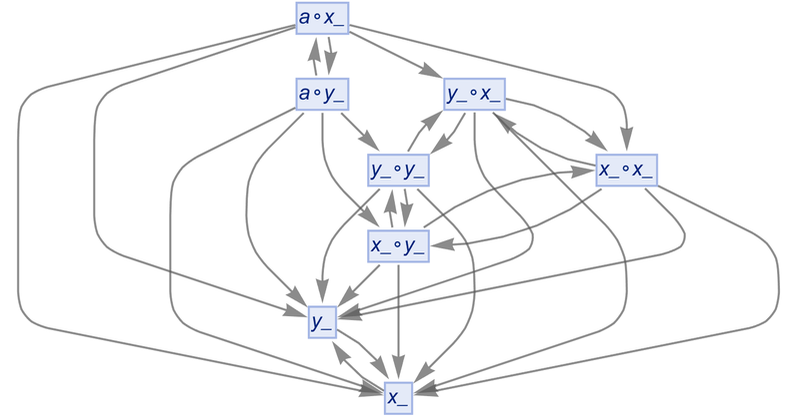

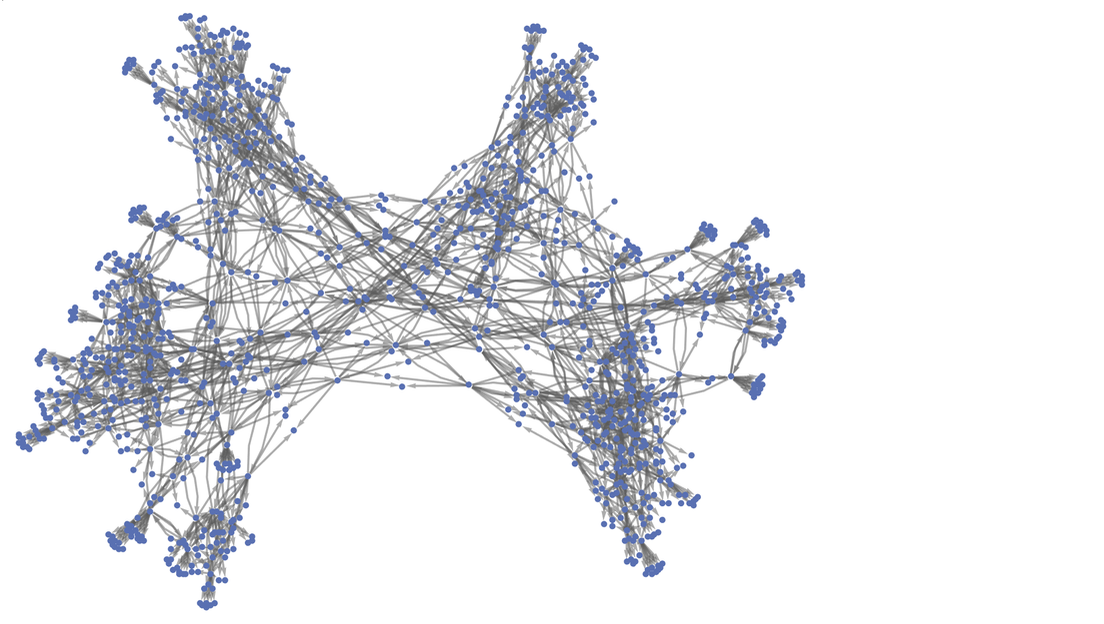

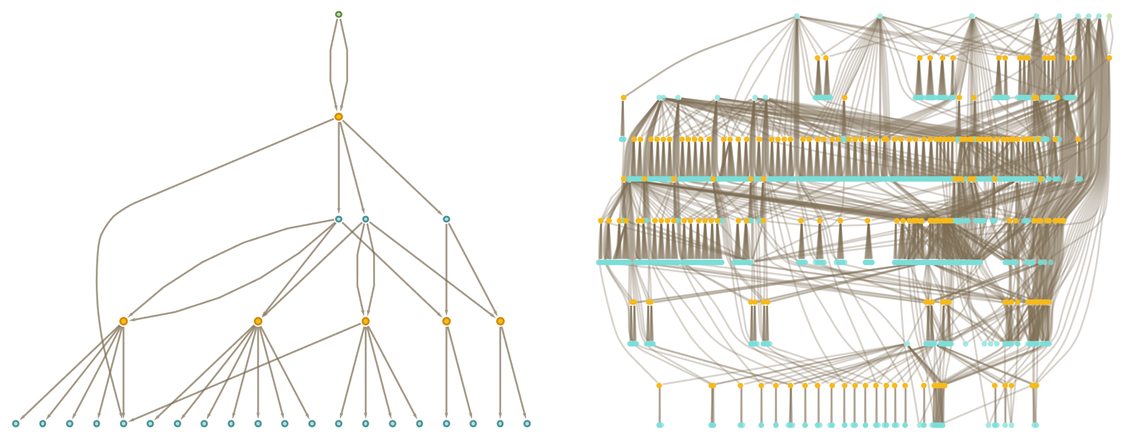

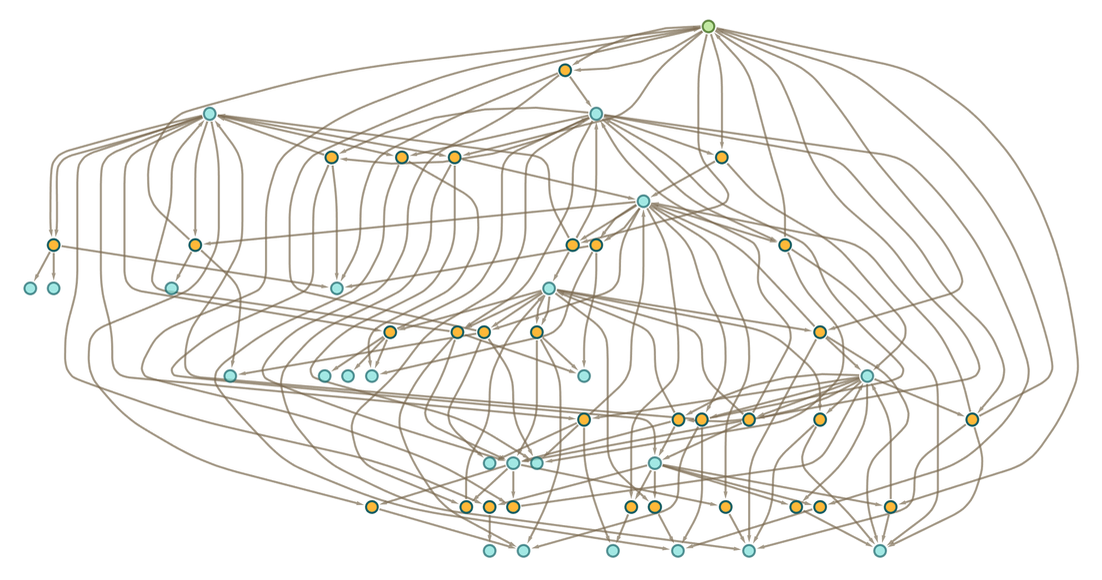

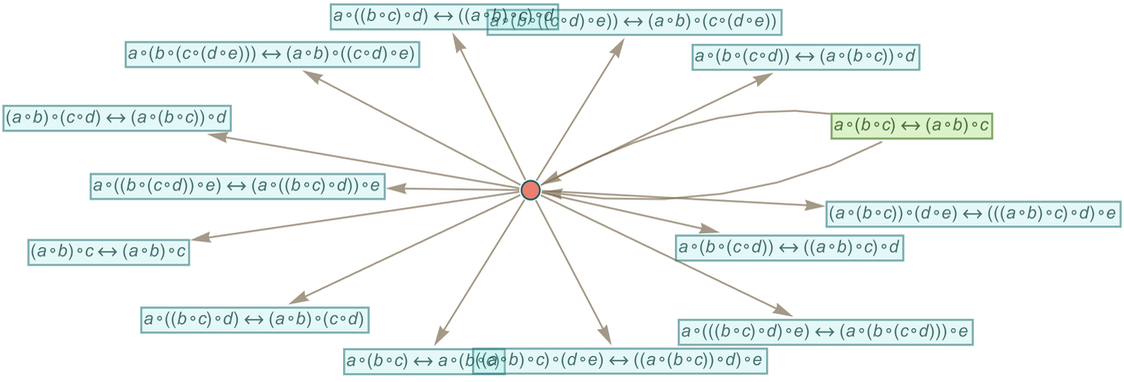

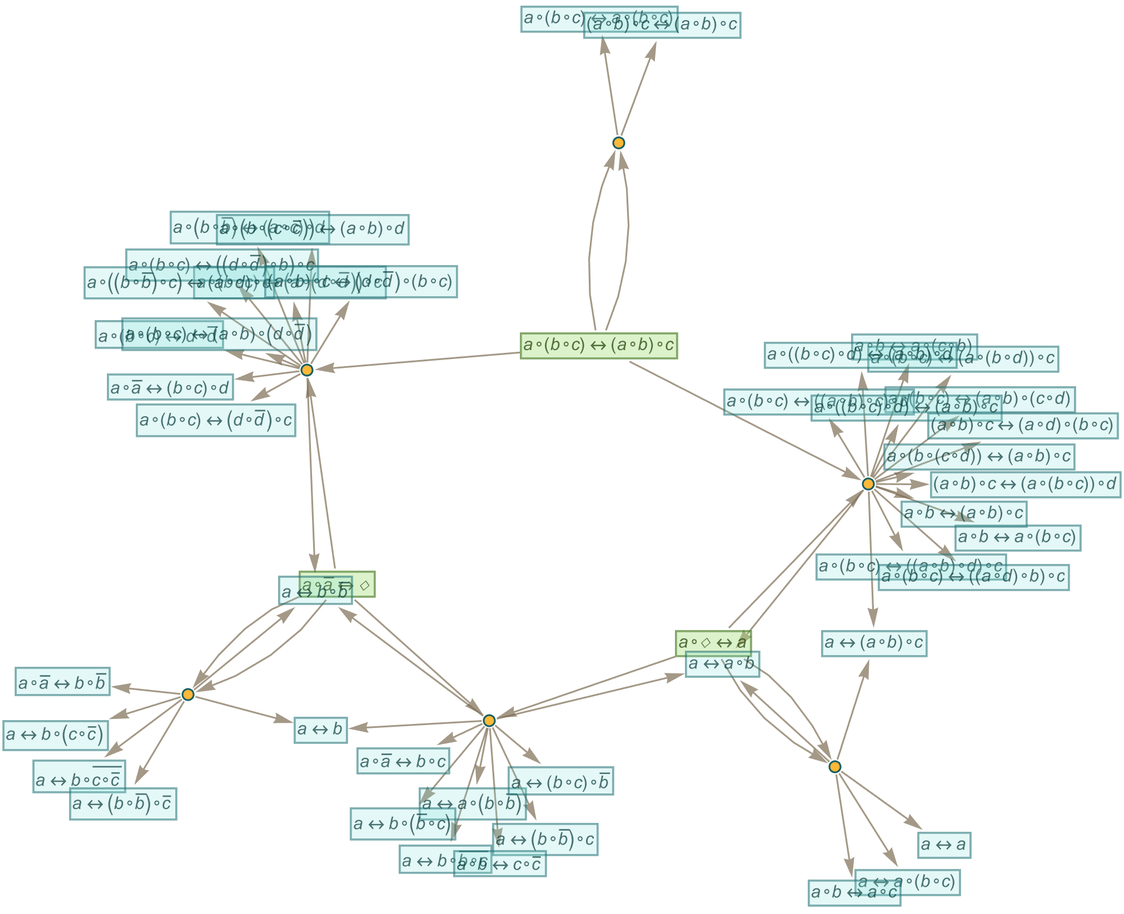

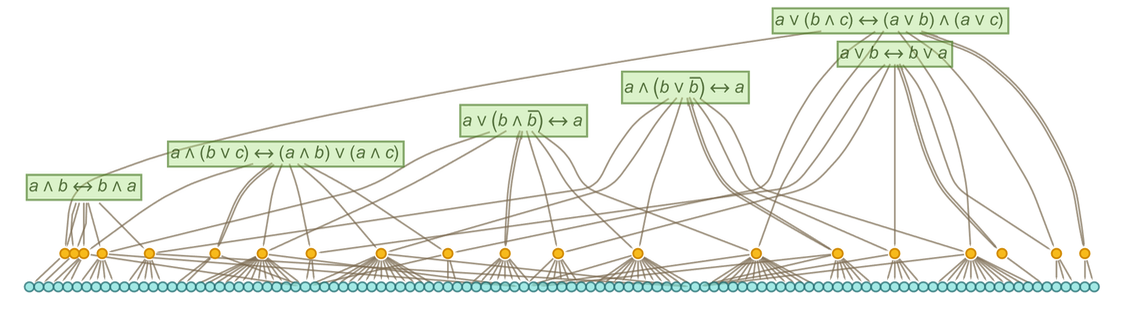

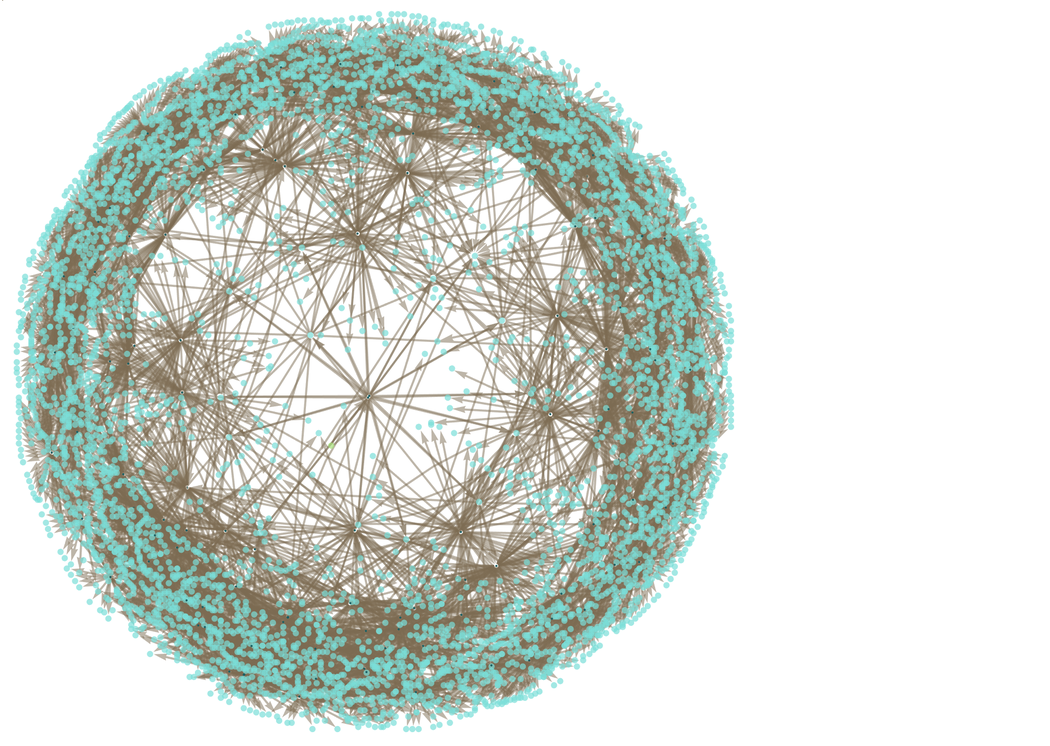

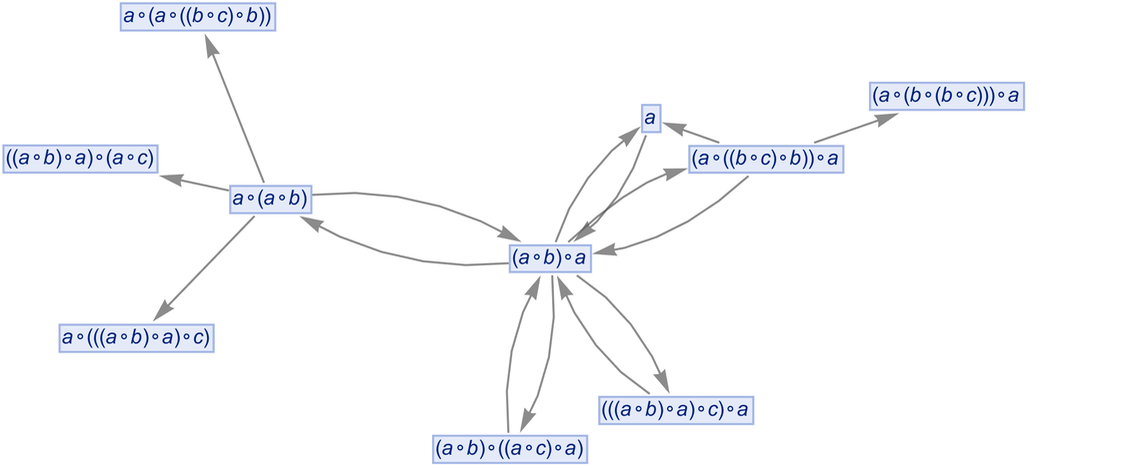

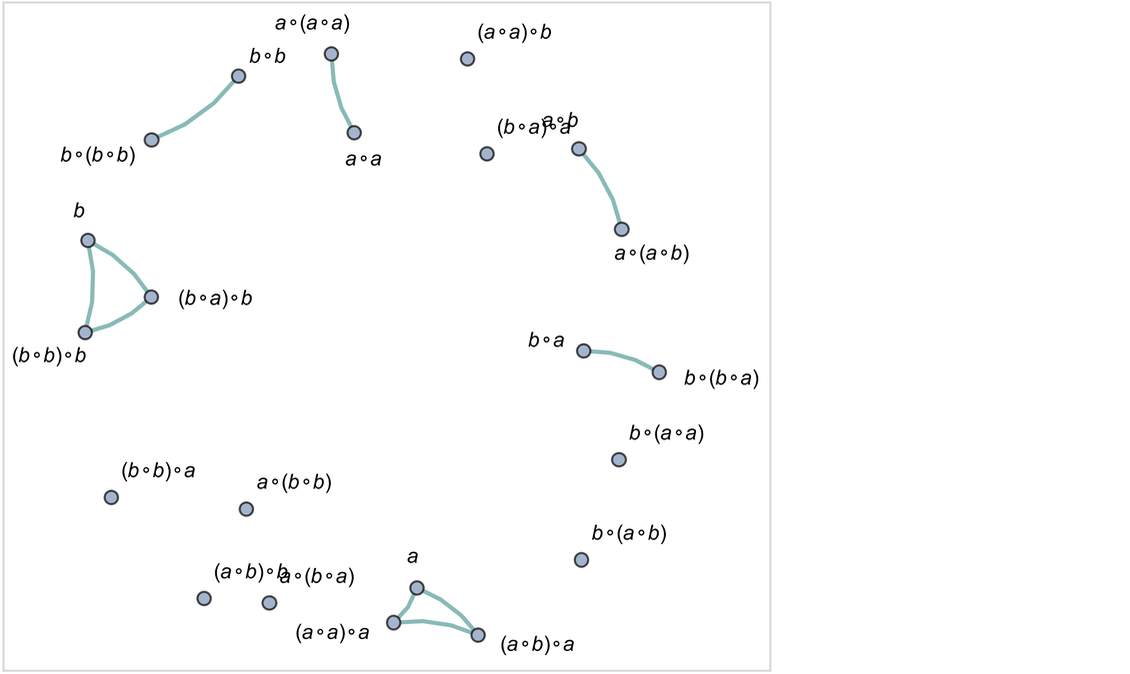

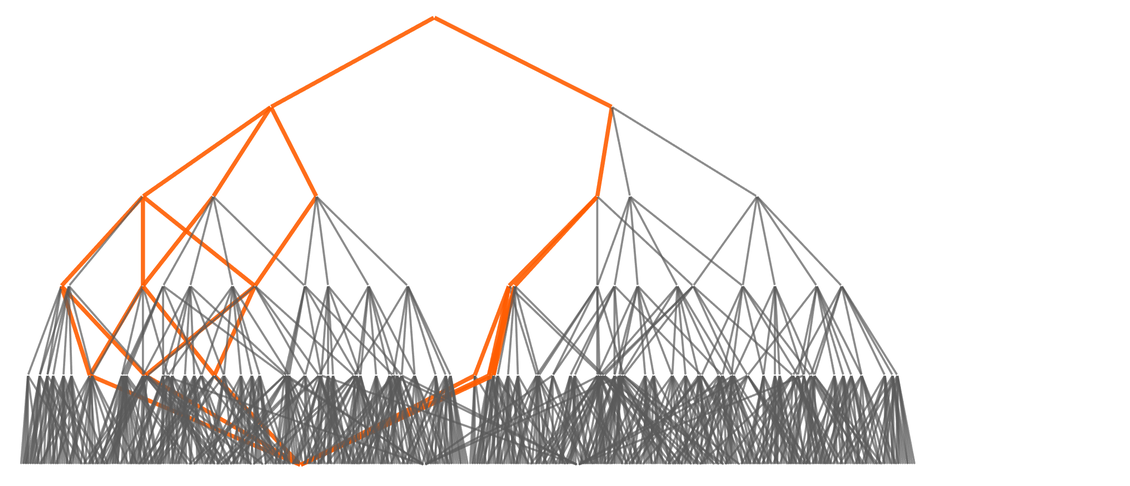

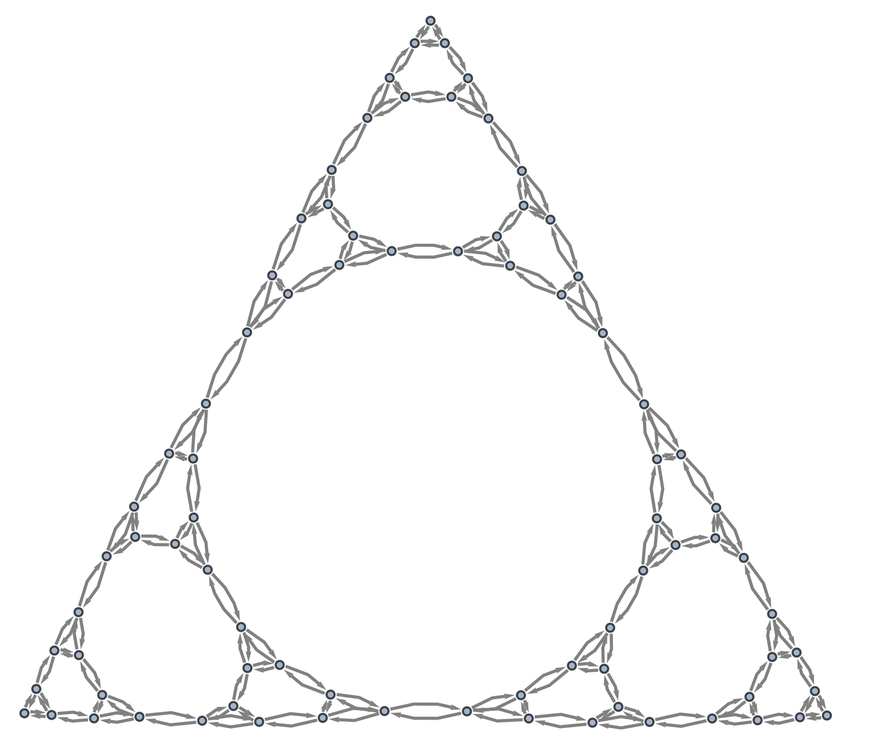

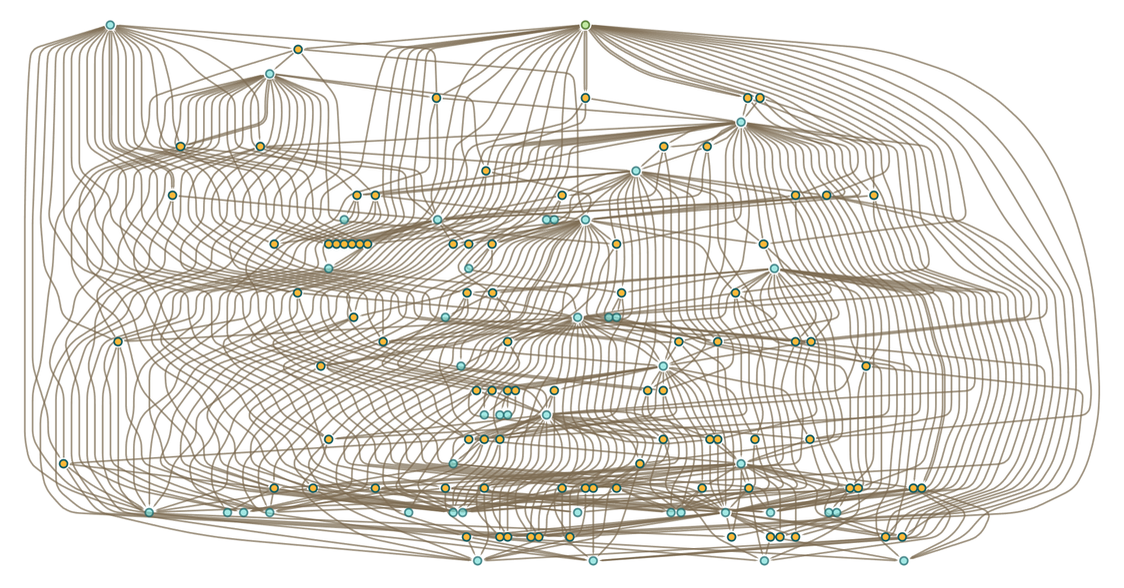

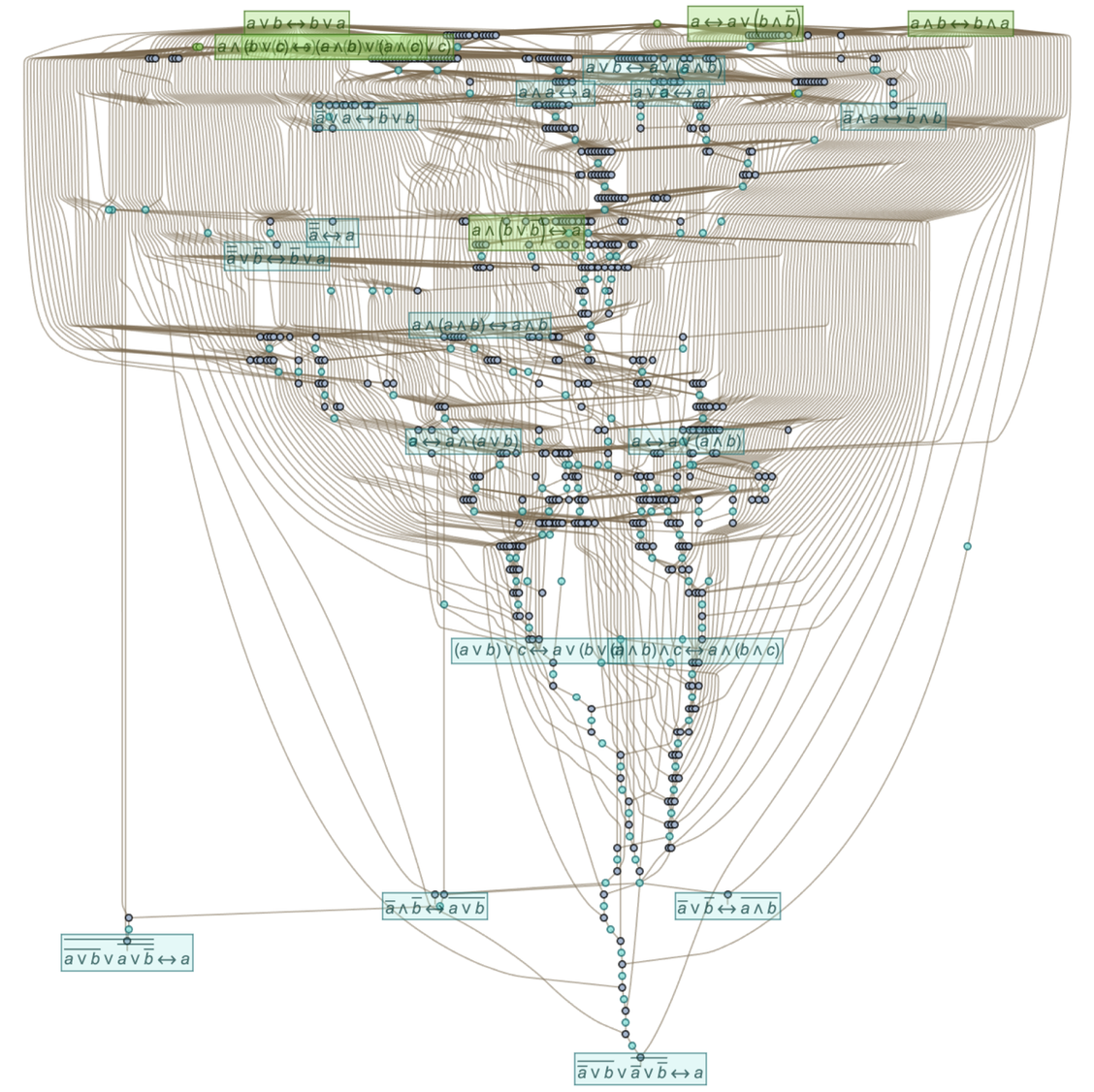

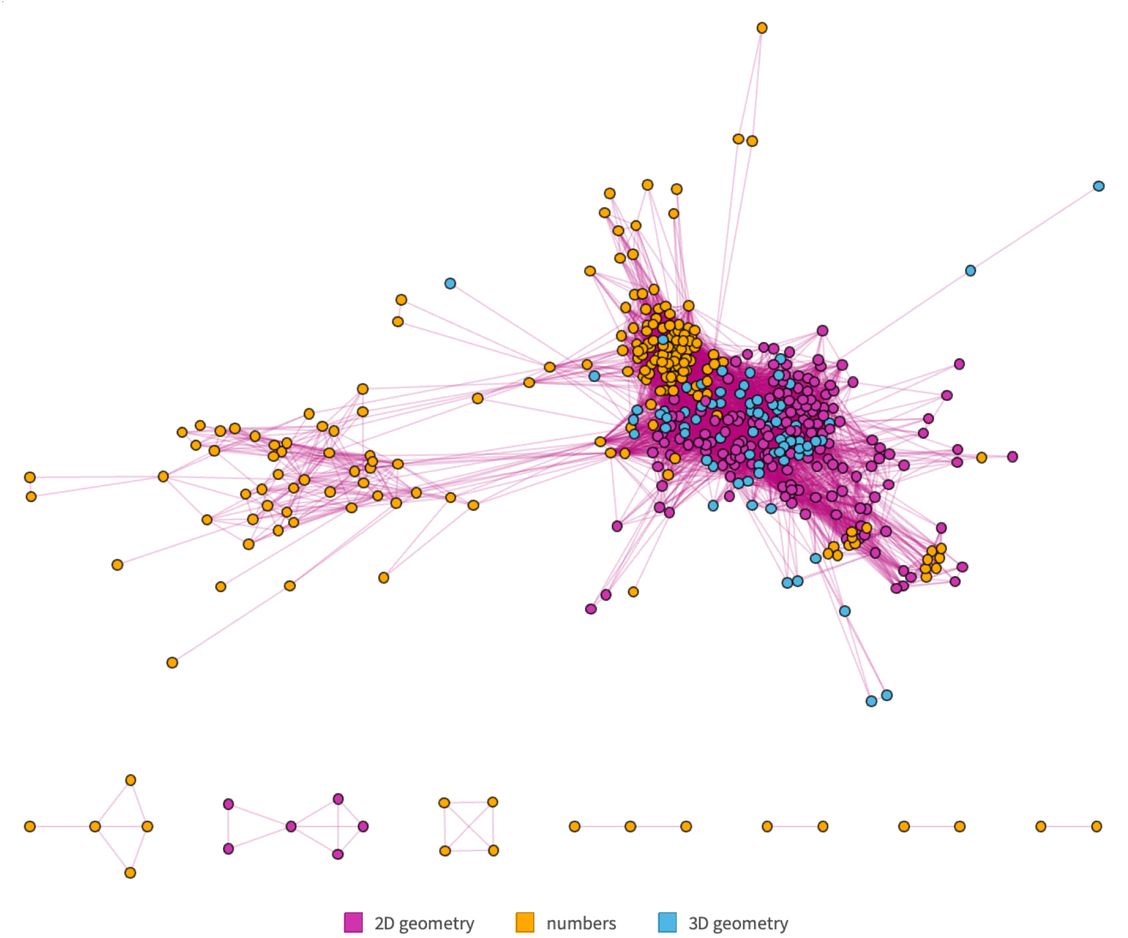

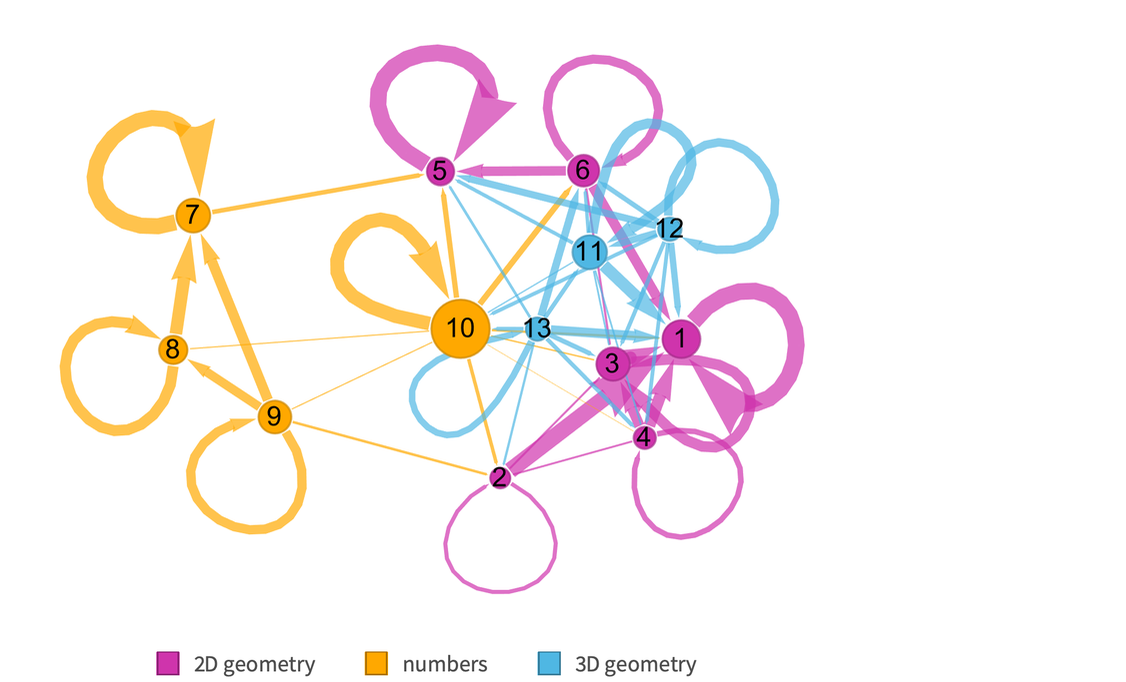

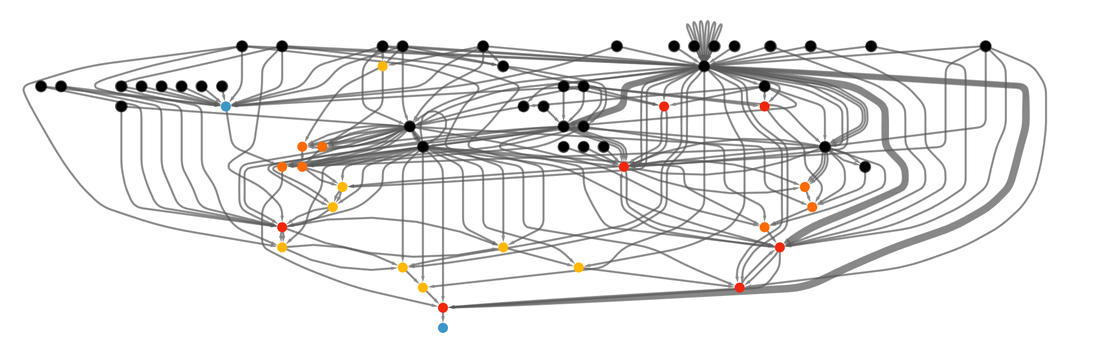

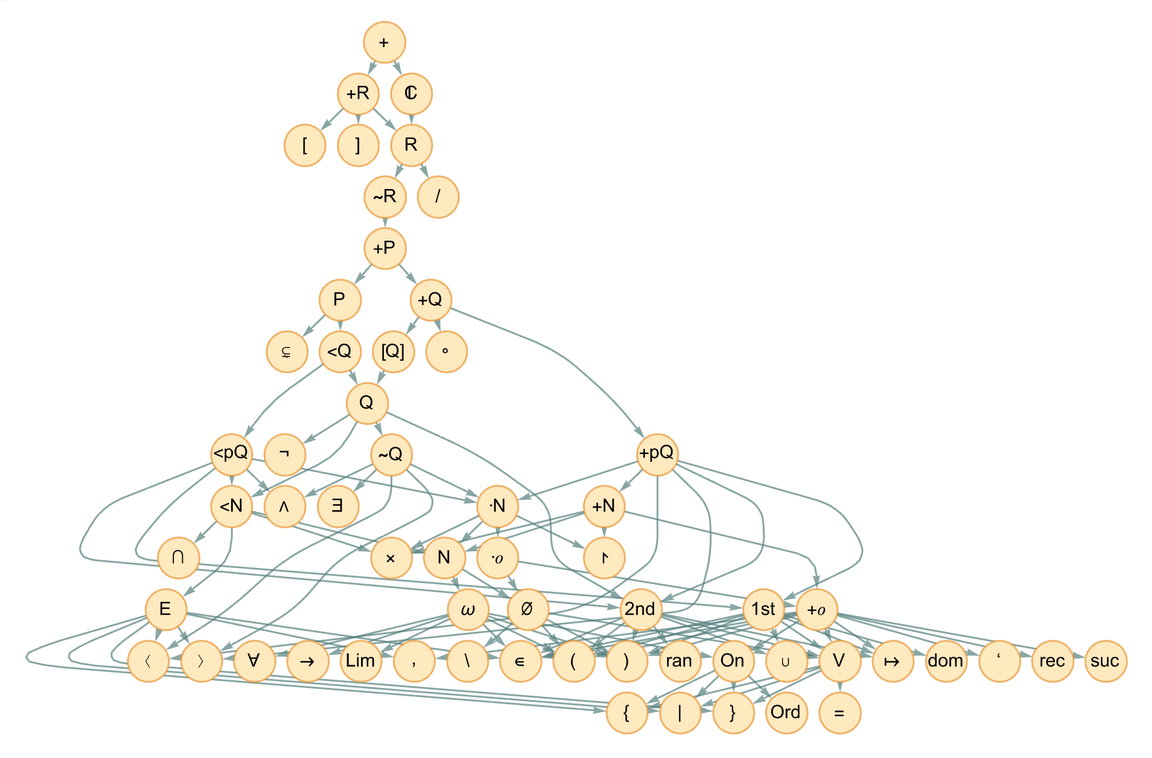

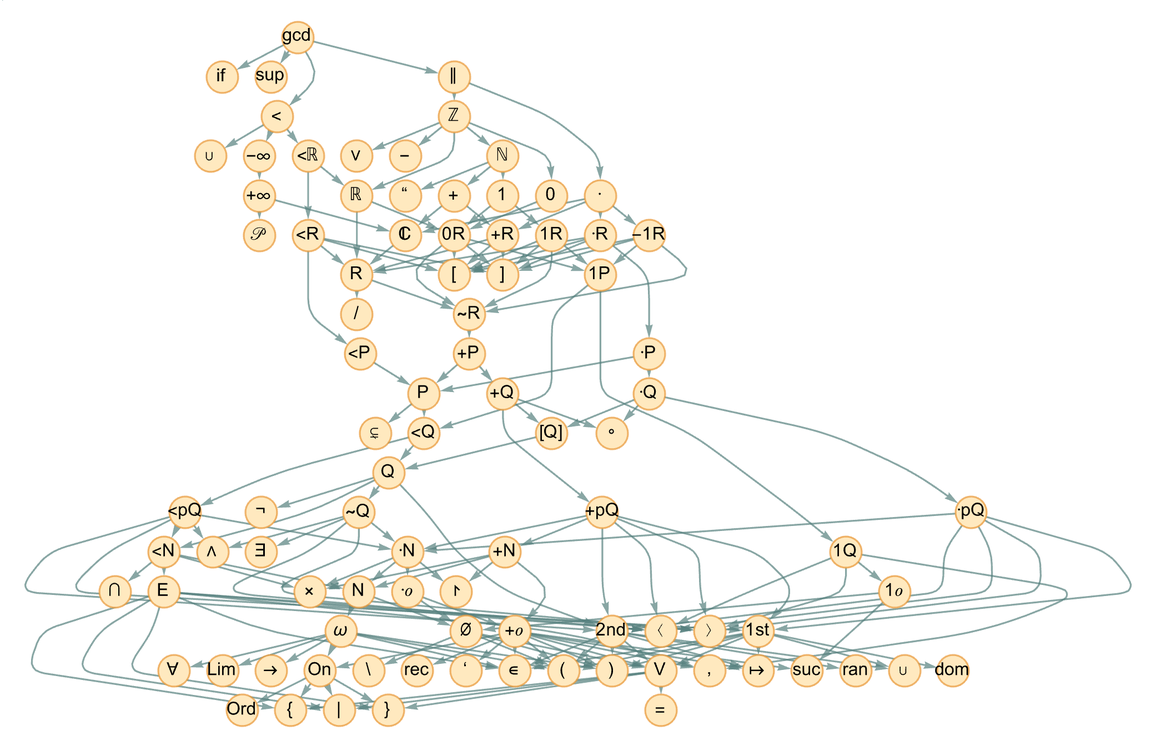

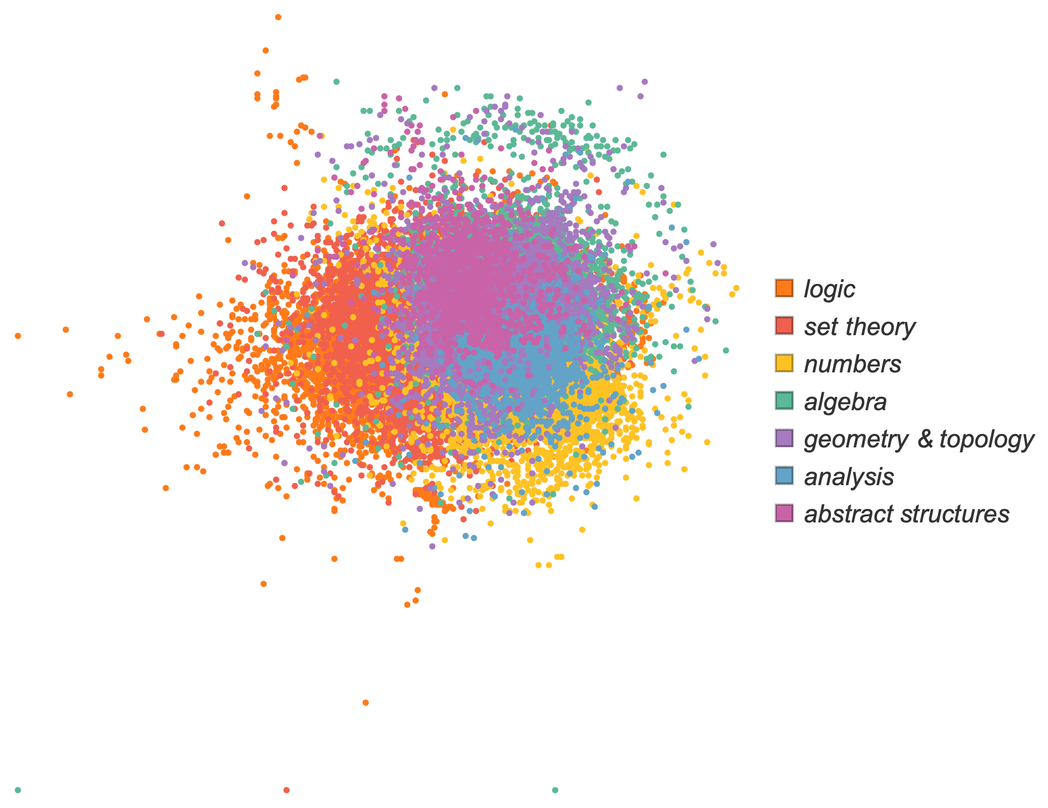

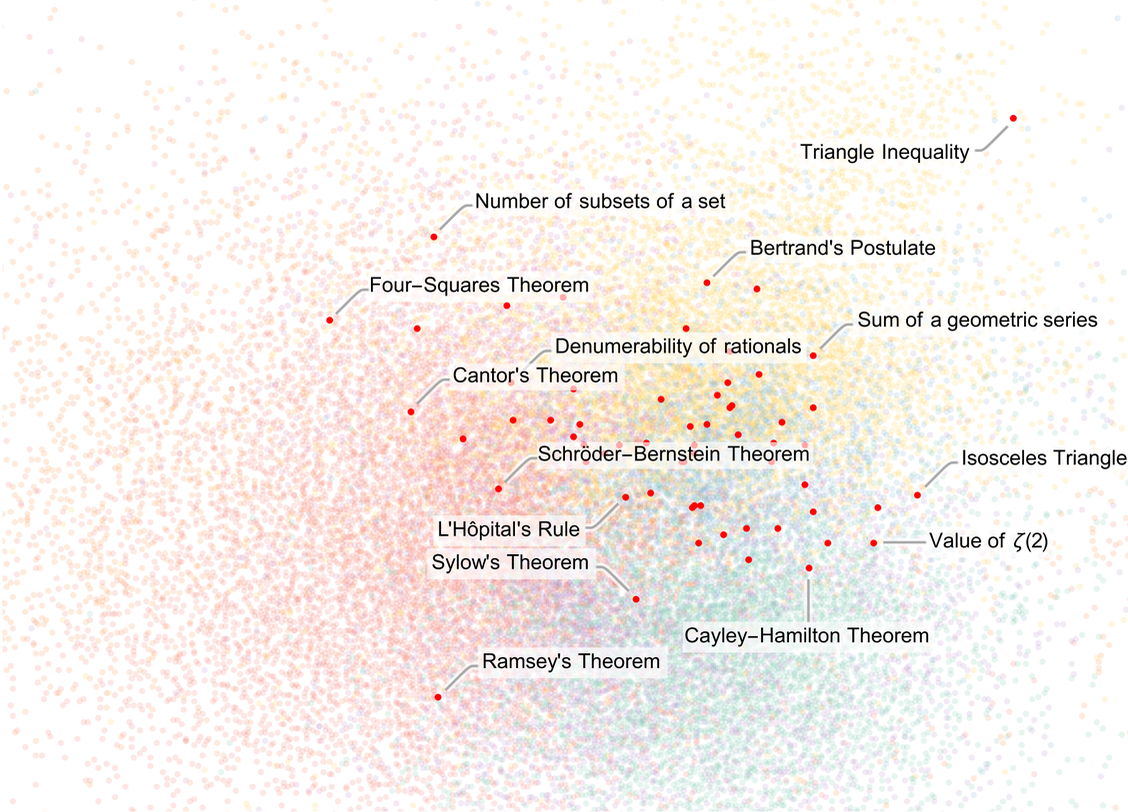

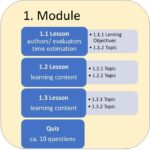

In multiway graphs like those we’ve shown in previous sections we routinely generate very large numbers of “mathematical” expressions. But how are these expressions related to each other? And in some appropriate limit can we think of them all being embedded in some kind of “metamathematical space”?

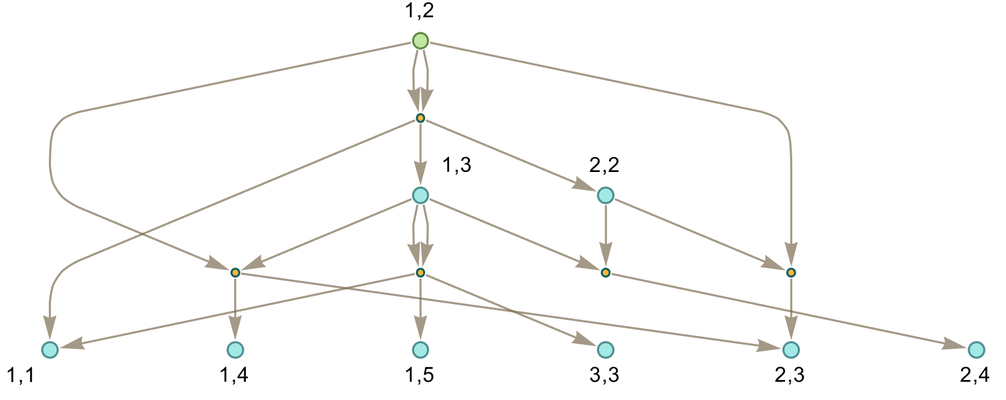

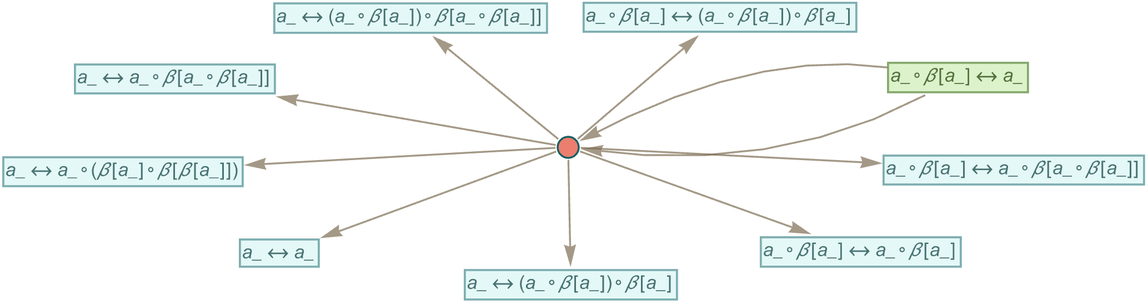

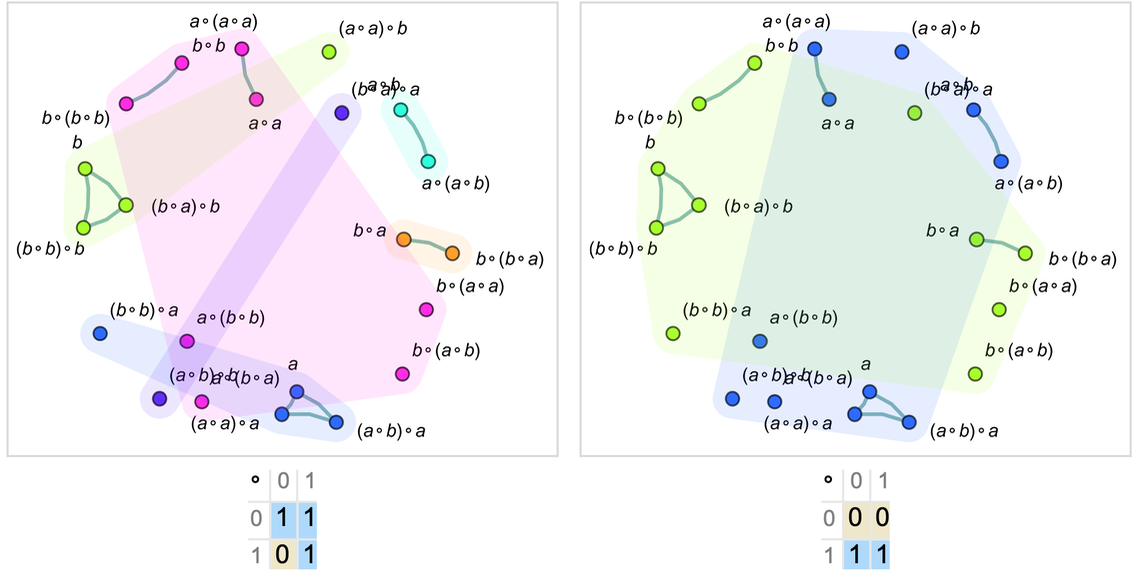

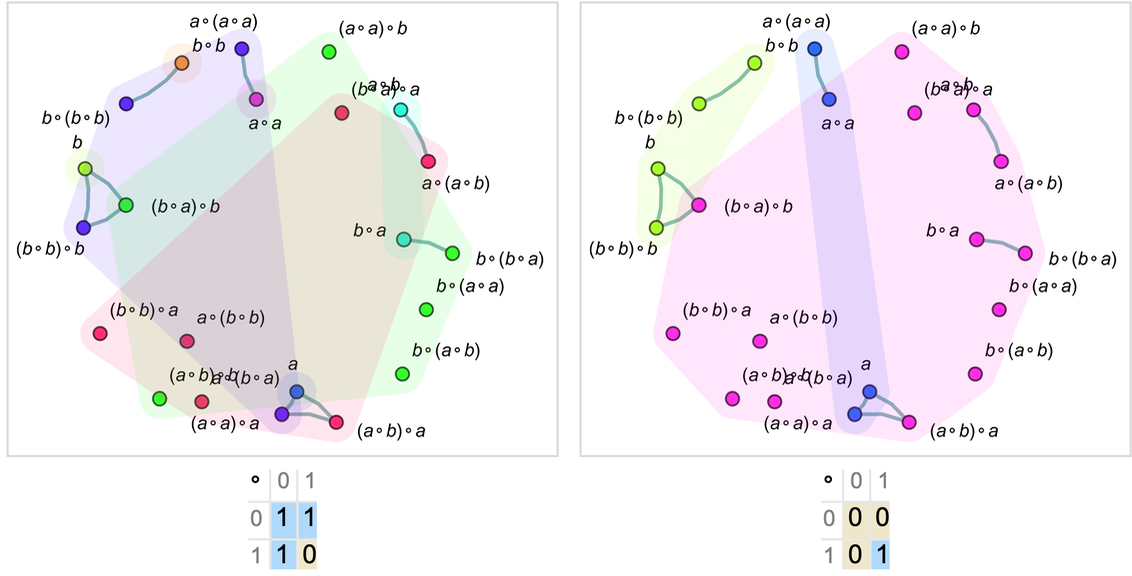

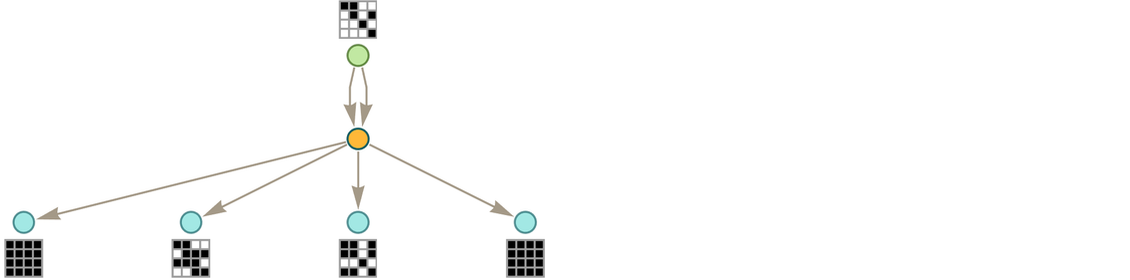

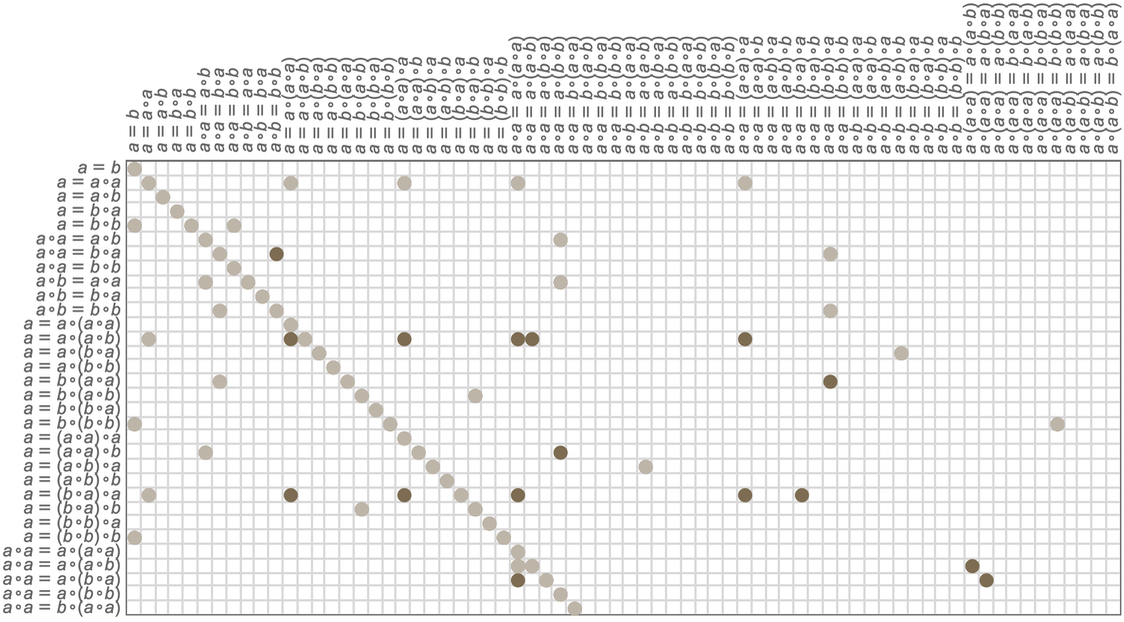

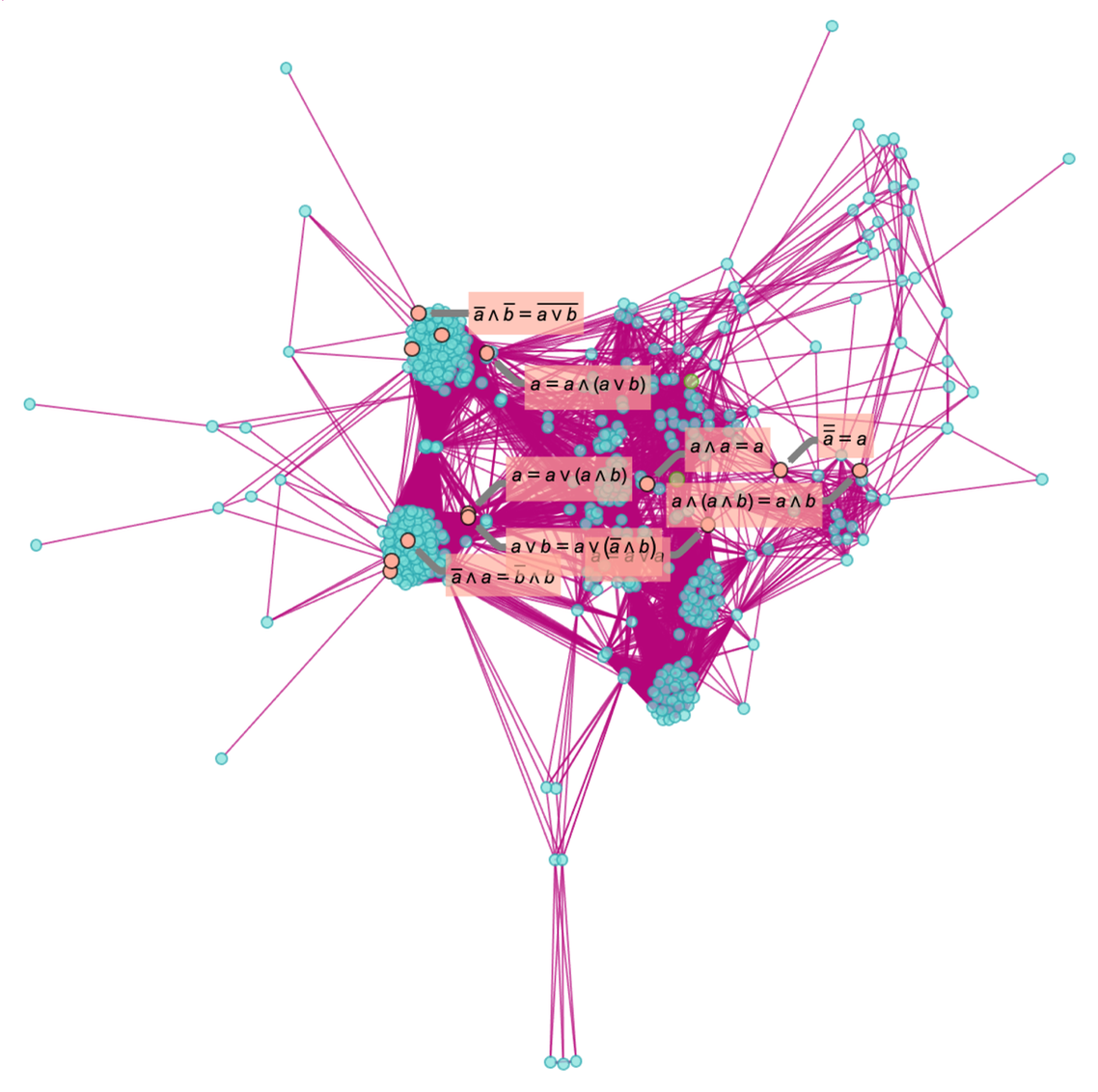

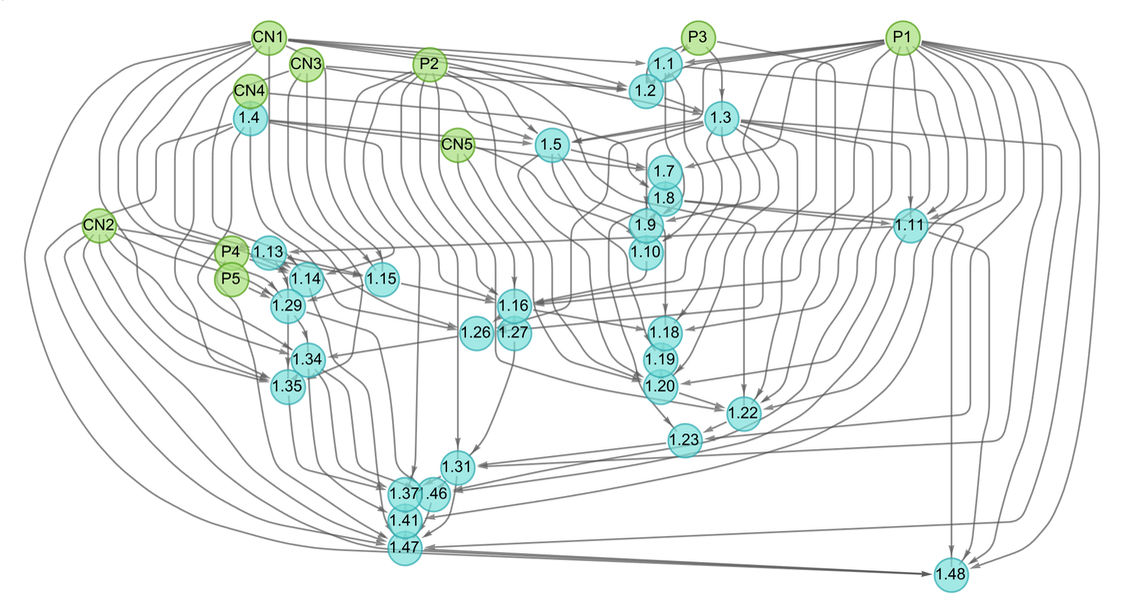

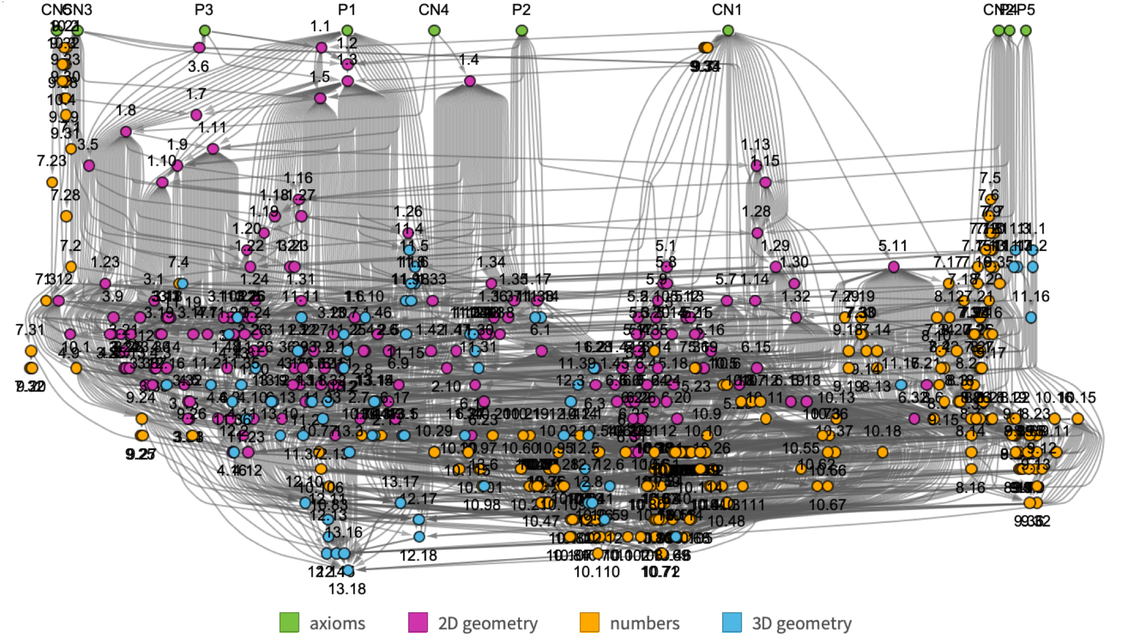

It turns out that this is the direct analog of what in our Physics Project we call branchial space, and what in that case defines a map of the entanglements between branches of quantum history. In the mathematical case, let’s say we have a multiway graph generated using the axiom:

|

|

After a few steps starting from ![]() we have:

we have:

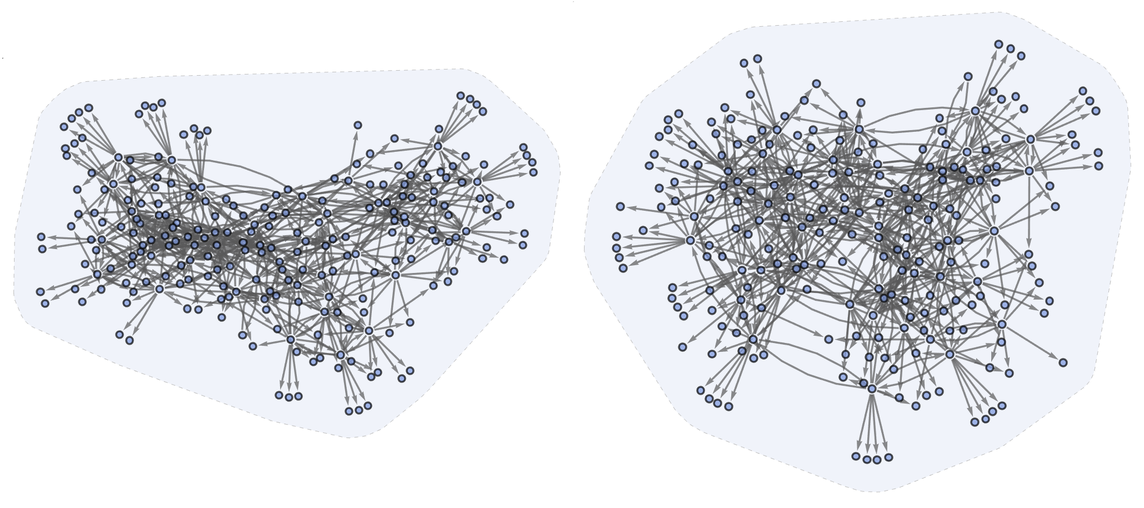

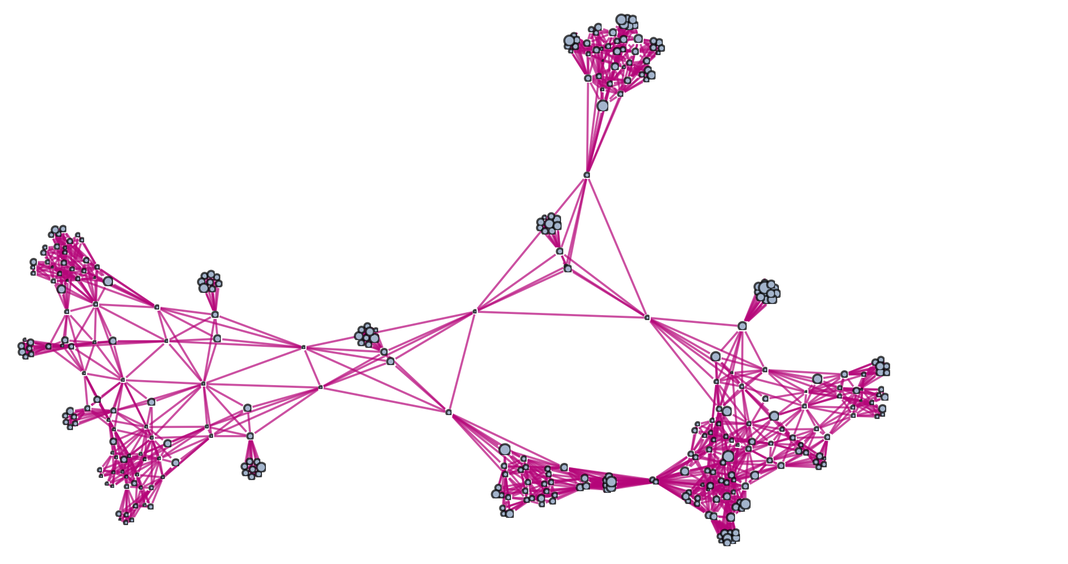

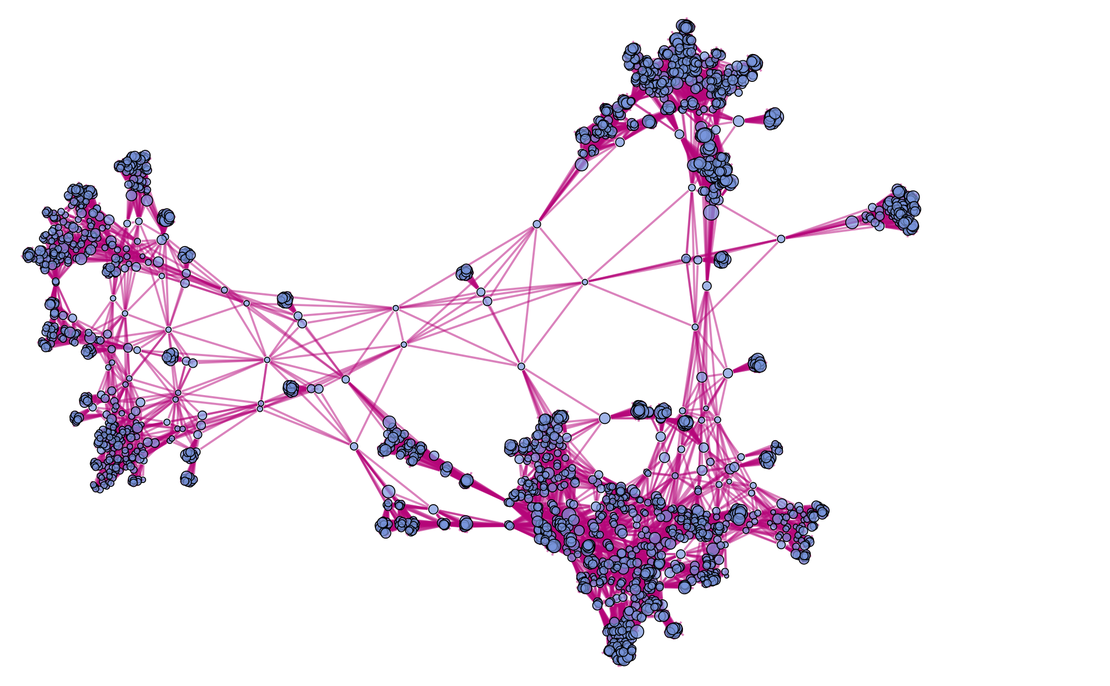

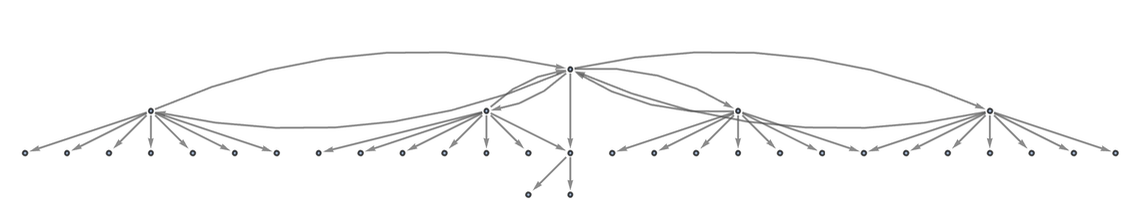

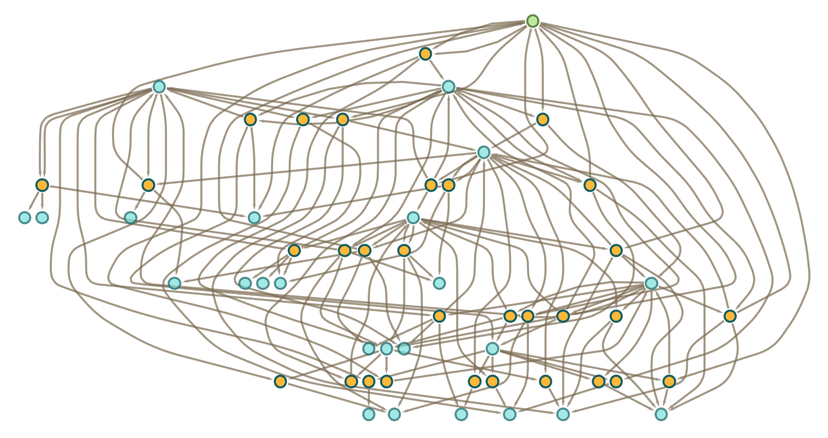

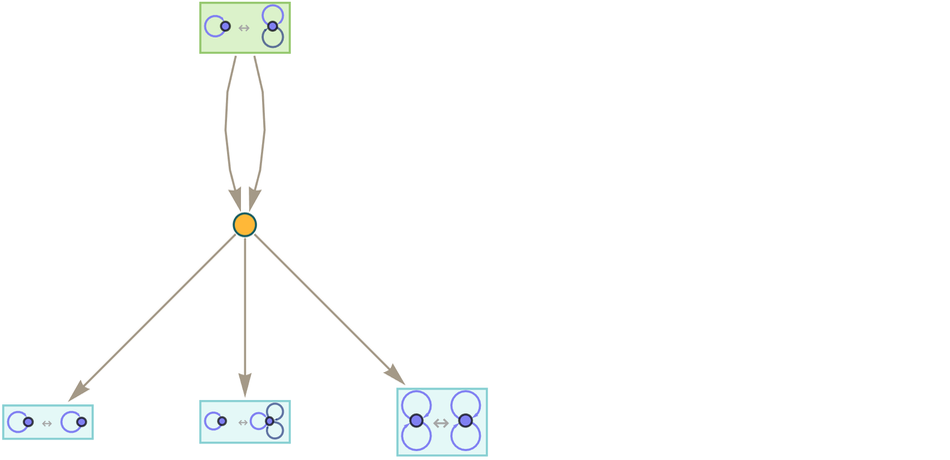

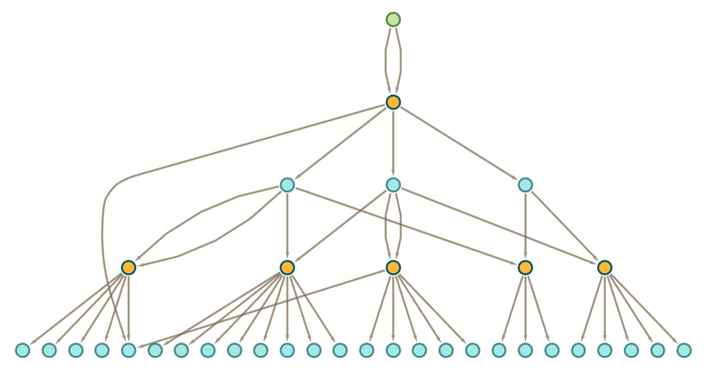

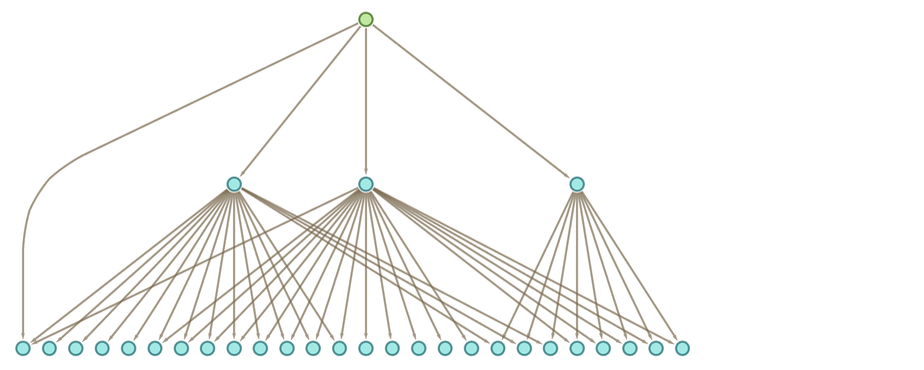

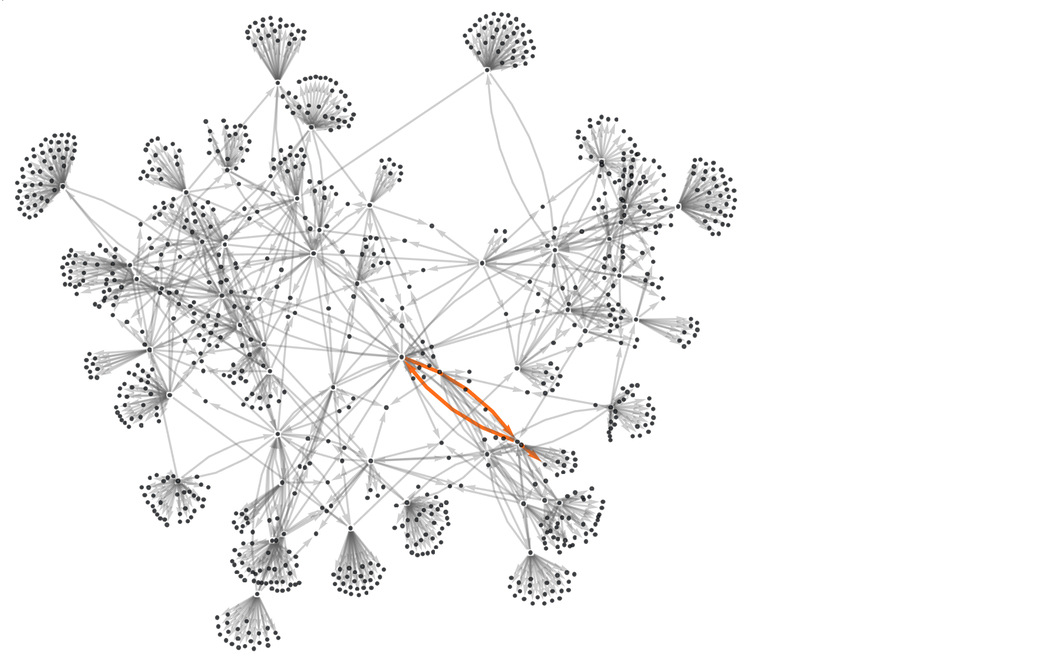

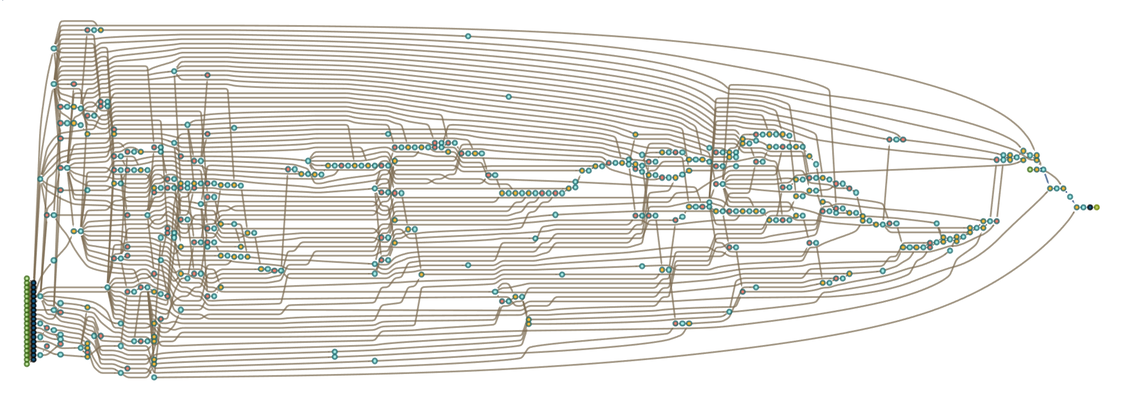

|

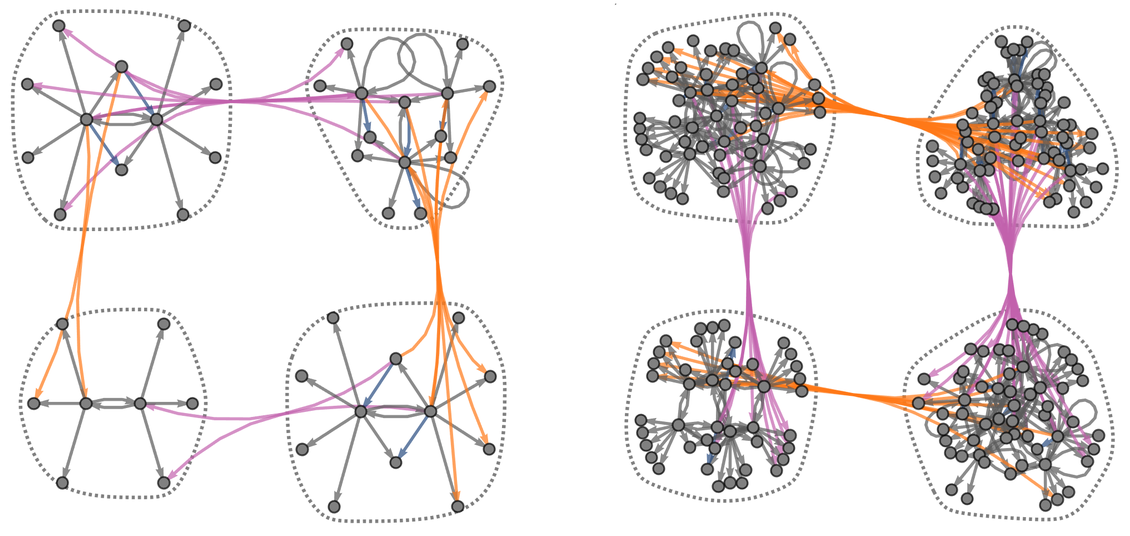

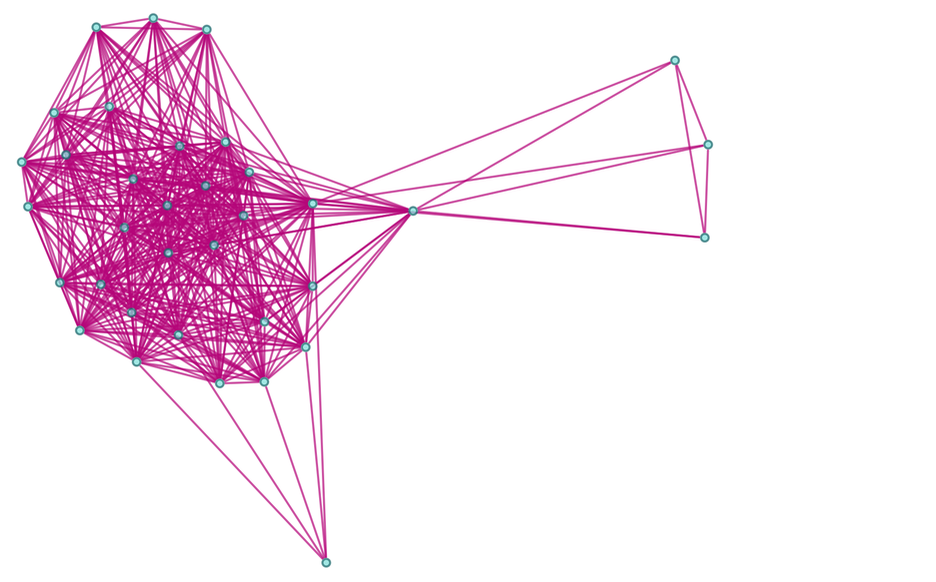

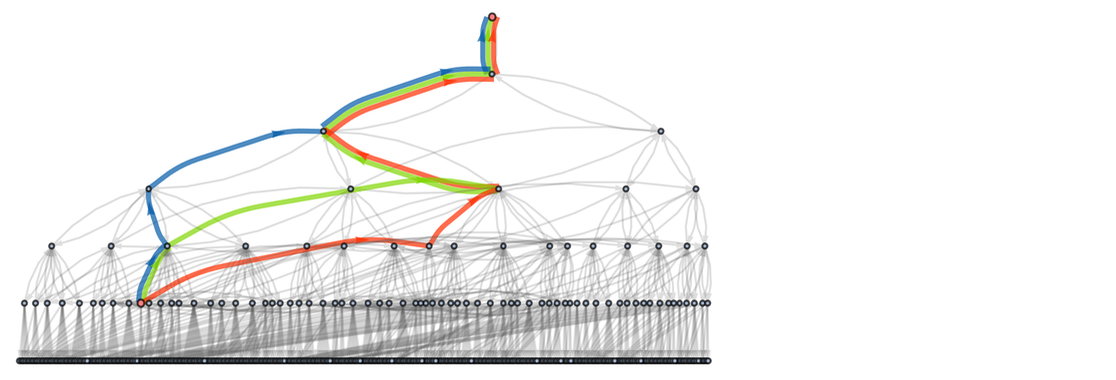

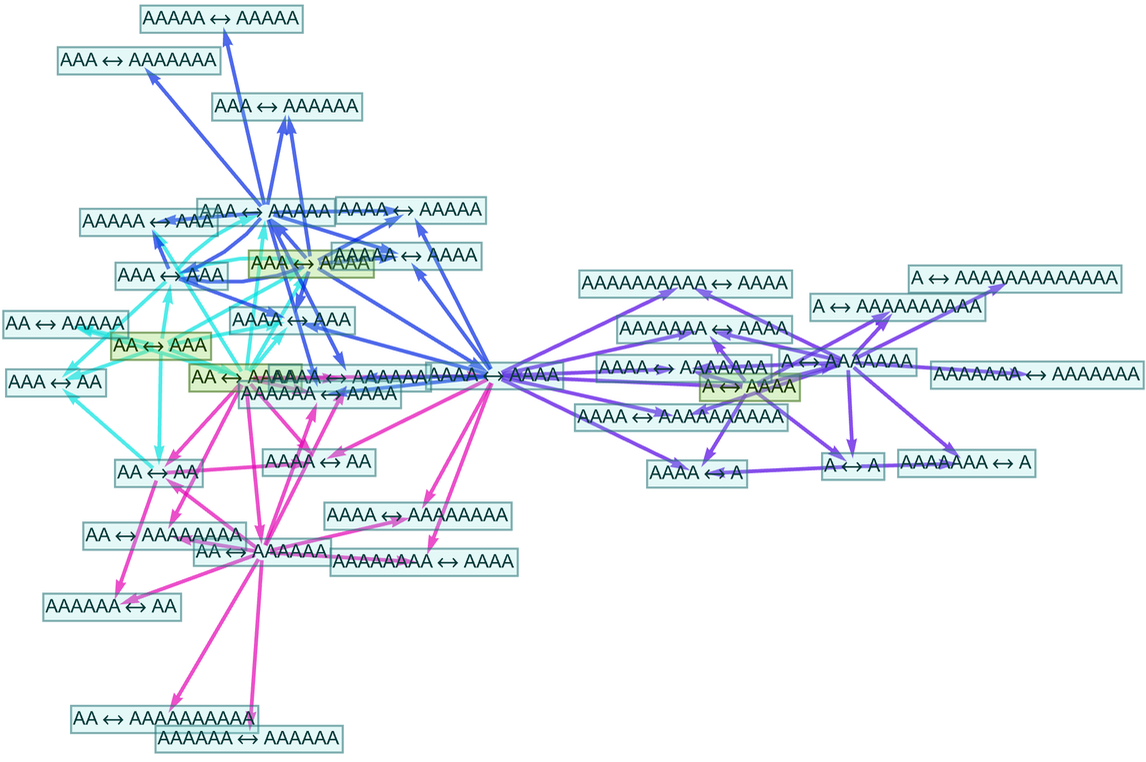

Now—just as in our Physics Project—let’s form a branchial graph by looking at the final expressions here and connecting them if they are “entangled” in the sense that they share an ancestor on the previous step:

|

There’s some trickiness here associated with loops in the multiway graph (which are the analog of closed timelike curves in physics) and what it means to define different “steps in evolution”. But just iterating once more the construction of the multiway graph, we get a branchial graph:

|

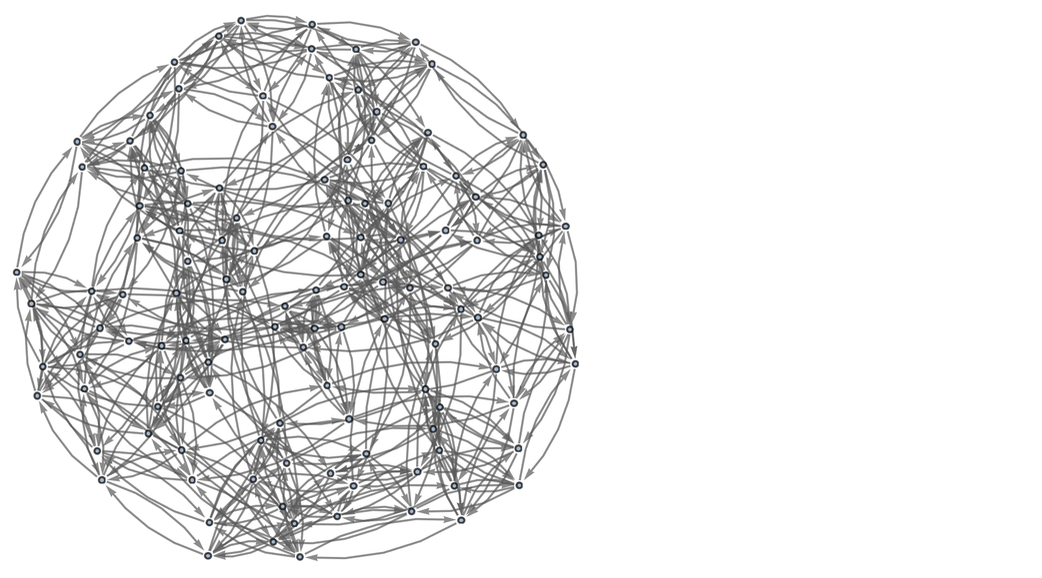

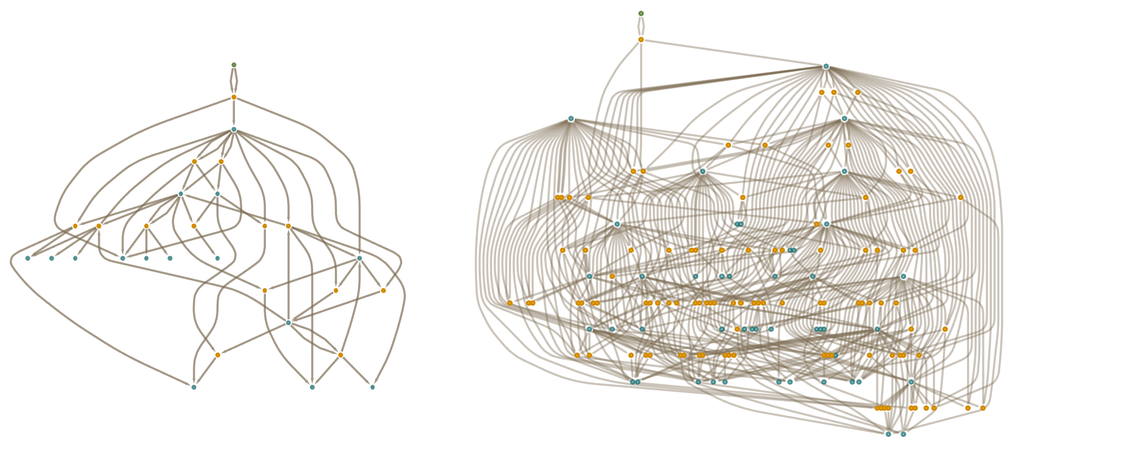

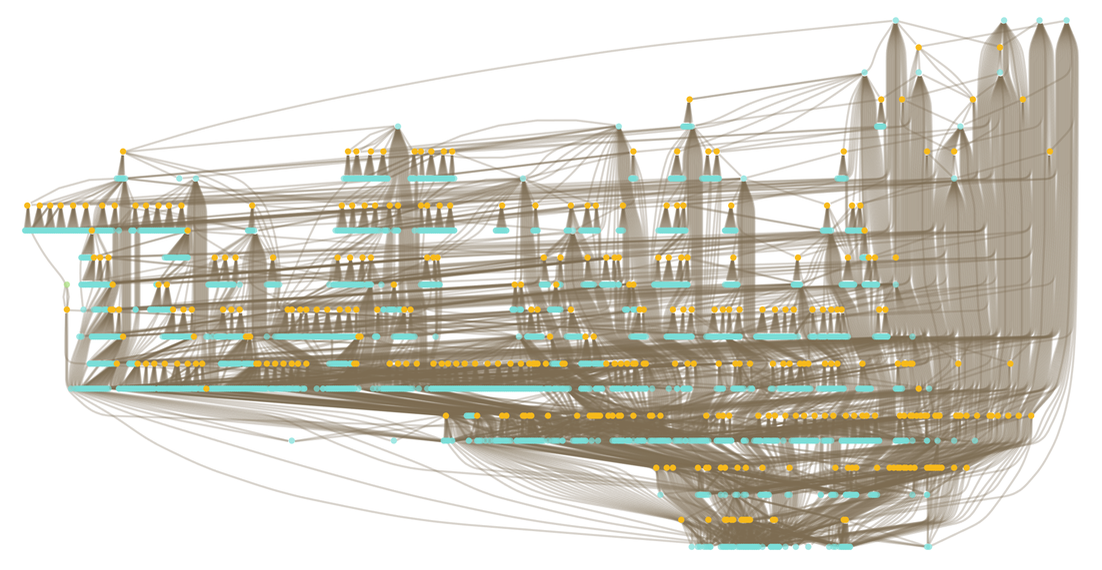

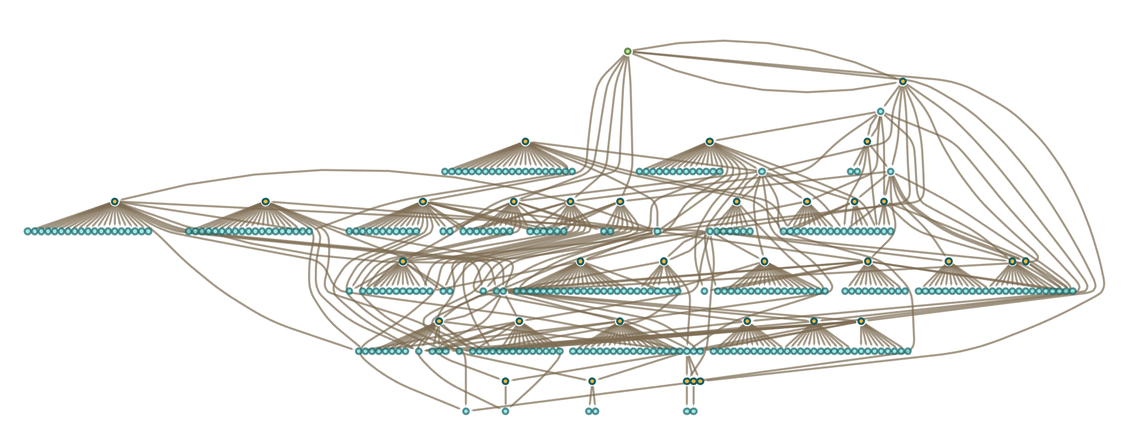

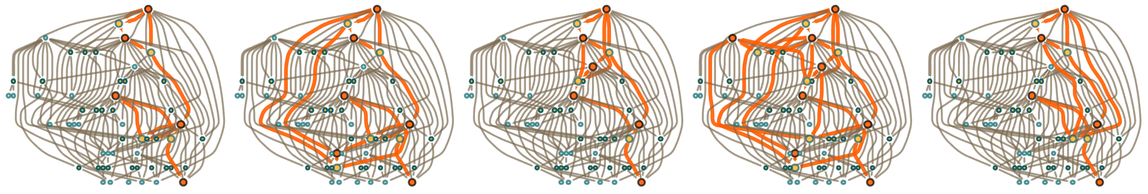

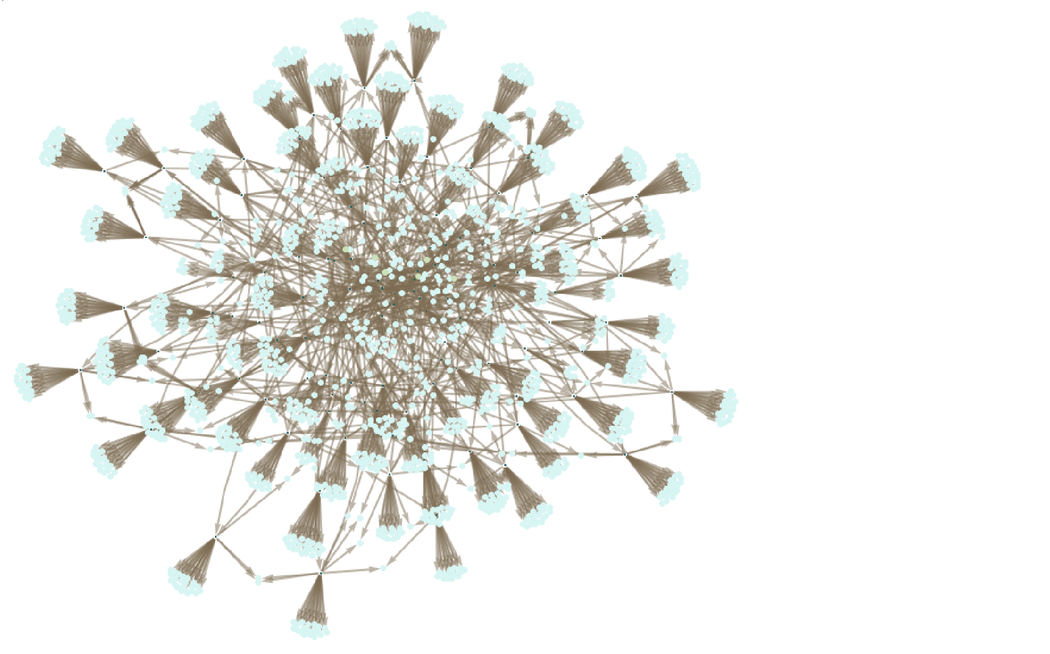

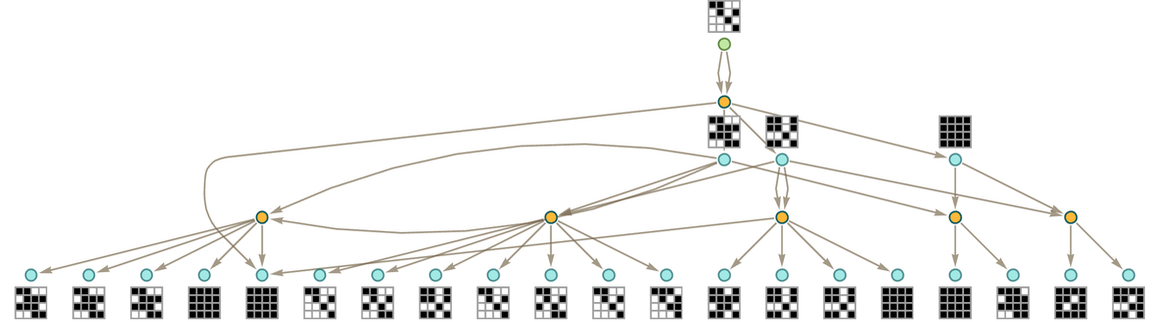

After a couple more iterations the structure of the branchial graph is (with each node sized according to the size of expression it represents):

|

Continuing another iteration, the structure becomes:

|

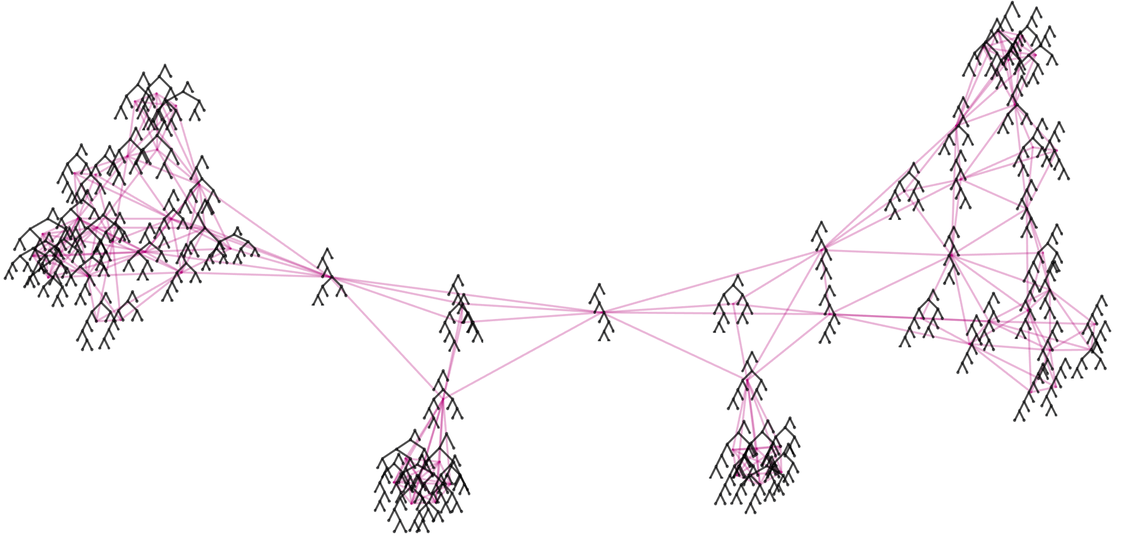

And in essence this structure can indeed be thought of as defining a kind of “metamathematical space” in which the different expressions are embedded. But what is the “geography” of this space? This shows how expressions (drawn as trees) are laid out on a particular branchial graph

|

and we see that there is at least a general clustering of similar trees on the graph—indicating that “similar expressions” tend to be “nearby” in the metamathematical space defined by this axiom system.

An important feature of branchial graphs is that effects are—essentially by construction—always local in the branchial graph. For example, if one changes an expression at a particular step in the evolution of a multiway system, it can only affect a region of the branchial graph that essentially expands by one edge per step.

One can think of the affected region—in analogy with a light cone in spacetime—as being the “entailment cone” of a particular expression. The edge of the entailment cone in effect expands at a certain “maximum metamathematical speed” in metamathematical (i.e. branchial) space—which one can think of as being measured in units of “expression change per multiway step”.

By analogy with physics one can start talking in general about motion in metamathematical space. A particular proof path in the multiway graph will progressively “move around” in the branchial graph that defines metamathematical space. (Yes, there are many subtle issues here, not least the fact that one has to imagine a certain kind of limit being taken so that the structure of the branchial graph is “stable enough” to “just be moving around” in something like a “fixed background space”.)

By the way, the shortest proof path in the multiway graph is the analog of a geodesic in spacetime. And later we’ll talk about how the “density of activity” in the branchial graph is the analog of energy in physics, and how it can be seen as “deflecting” the path of geodesics, just as gravity does in spacetime.

It’s worth mentioning just one further subtlety. Branchial graphs are in effect associated with “transverse slices” of the multiway graph—but there are many consistent ways to make these slices. In physics terms one can think of the foliations that define different choices of sequences of slices as being like “reference frames” in which one is specifying a sequence of “simultaneity surfaces” (here “branchtime hypersurfaces”). The particular branchial graphs we’ve shown here are ones associated with what in physics might be called the cosmological rest frame in which every node is the result of the same number of updates since the beginning.

6 | The Issue of Generated Variables

A rule like

|

|

defines transformations for any expressions ![]() and

and ![]() . So, for example, if we use the rule from left to right on the expression

. So, for example, if we use the rule from left to right on the expression ![]() the “pattern variable”

the “pattern variable” ![]() will be taken to be a while

will be taken to be a while ![]() will be taken to be b ∘ a, and the result of applying the rule will be

will be taken to be b ∘ a, and the result of applying the rule will be ![]() .

.

But consider instead the case where our rule is:

|

|

Applying this rule (from left to right) to ![]() we’ll now get

we’ll now get ![]() . And applying the rule to

. And applying the rule to ![]() we’ll get

we’ll get ![]() . But what should we make of those

. But what should we make of those ![]() ’s? And in particular, are they “the same”, or not?

’s? And in particular, are they “the same”, or not?

A pattern variable like z_ can stand for any expression. But do two different z_’s have to stand for the same expression? In a rule like ![]()

![]() … we’re assuming that, yes, the two z_’s always stand for the same expression. But if the z_’s appear in different rules it’s a different story. Because in that case we’re dealing with two separate and unconnected z_’s—that can stand for completely different expressions.

… we’re assuming that, yes, the two z_’s always stand for the same expression. But if the z_’s appear in different rules it’s a different story. Because in that case we’re dealing with two separate and unconnected z_’s—that can stand for completely different expressions.

To begin seeing how this works, let’s start with a very simple example. Consider the (for now, one-way) rule

|

|

where ![]() is the literal symbol

is the literal symbol ![]() , and x_ is a pattern variable. Applying this to

, and x_ is a pattern variable. Applying this to ![]() we might think we could just write the result as:

we might think we could just write the result as:

|

Then if we apply the rule again both branches will give the same expression ![]() , so there’ll be a merge in the multiway graph:

, so there’ll be a merge in the multiway graph:

|

But is this really correct? Well, no. Because really those should be two different x_’s, that could stand for two different expressions. So how can we indicate this? One approach is just to give every “generated” x_ a new name:

|

But this result isn’t really correct either. Because if we look at the second step we see the two expressions ![]() and

and ![]() . But what’s really the difference between these? The names

. But what’s really the difference between these? The names ![]() are arbitrary; the only constraint is that within any given expression they have to be different. But between expressions there’s no such constraint. And in fact

are arbitrary; the only constraint is that within any given expression they have to be different. But between expressions there’s no such constraint. And in fact ![]() and

and ![]() both represent exactly the same class of expressions: any expression of the form

both represent exactly the same class of expressions: any expression of the form ![]() .

.

So in fact it’s not correct that there are two separate branches of the multiway system producing two separate expressions. Because those two branches produce equivalent expressions, which means they can be merged. And turning both equivalent expressions into the same canonical form we get:

|

It’s important to notice that this isn’t the same result as what we got when we assumed that every x_ was the same. Because then our final result was the expression ![]() which can match

which can match ![]() but not

but not ![]() —whereas now the final result is

—whereas now the final result is ![]() which can match both

which can match both ![]() and

and ![]() .

.

This may seem like a subtle issue. But it’s critically important in practice. Not least because generated variables are in effect what make up all “truly new stuff” that can be produced. With a rule like ![]() one’s essentially just taking whatever one started with, and successively rearranging the pieces of it. But with a rule like

one’s essentially just taking whatever one started with, and successively rearranging the pieces of it. But with a rule like ![]() there’s something “truly new” generated every time z_ appears.

there’s something “truly new” generated every time z_ appears.

By the way, the basic issue of “generated variables” isn’t something specific to the particular symbolic expression setup we’ve been using here. For example, there’s a direct analog of it in the hypergraph rewriting systems that appear in our Physics Project. But in that case there’s a particularly clear interpretation: the analog of “generated variables” are new “atoms of space” produced by the application of rules. And far from being some kind of footnote, these “generated atoms of space” are what make up everything we have in our universe today.

The issue of generated variables—and especially their naming—is the bane of all sorts of formalism for mathematical logic and programming languages. As we’ll see later, it’s perfectly possible to “go to a lower level” and set things up with no names at all, for example using combinators. But without names, things tend to seem quite alien to us humans—and certainly if we want to understand the correspondence with standard presentations of mathematics it’s pretty necessary to have names. So at least for now we’ll keep names, and handle the issue of generated variables by uniquifying their names, and canonicalizing every time we have a complete expression.

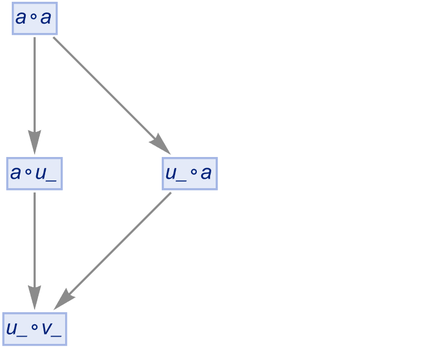

Let’s look at another example to see the importance of how we handle generated variables. Consider the rule:

|

|

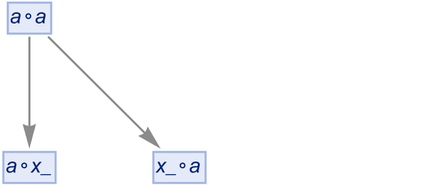

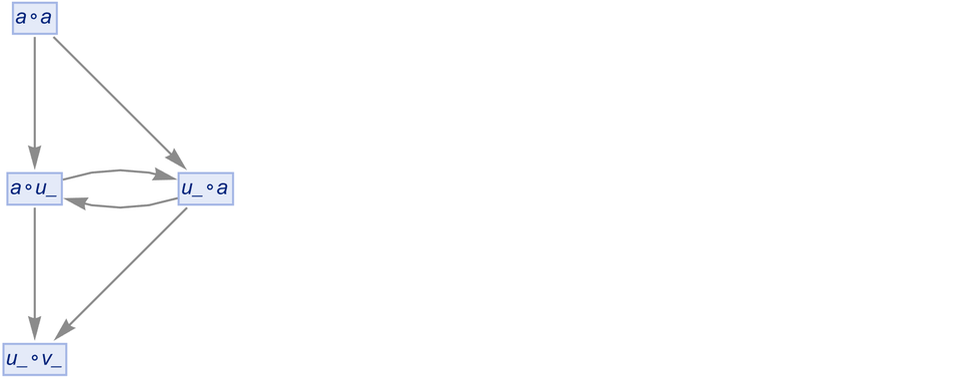

If we start with a ∘ a and do no uniquification, we’ll get:

|

With uniquification, but not canonicalization, we’ll get a pure tree:

|

But with canonicalization this is reduced to:

|

A confusing feature of this particular example is that this same result would have been obtained just by canonicalizing the original “assume-all-x_’s-are-the-same” case.

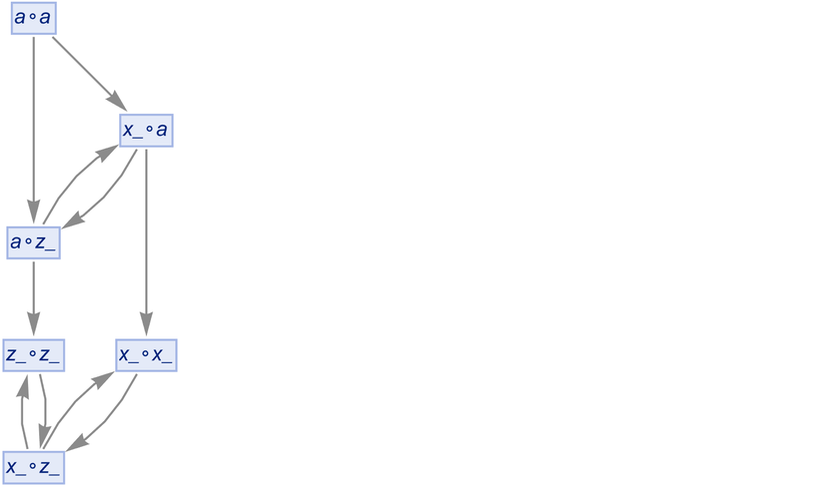

But things don’t always work this way. Consider the rather trivial rule

|

|

starting from ![]() . If we don’t do uniquification, and don’t do canonicalization, we get:

. If we don’t do uniquification, and don’t do canonicalization, we get:

|

If we do uniquification (but not canonicalization), we get a pure tree:

|

But if we now canonicalize this, we get:

|

And this is now not the same as what we would get by canonicalizing, without uniquifying:

|

7 | Rules Applied to Rules

In what we’ve done so far, we’ve always talked about applying rules (like ![]() ) to expressions (like

) to expressions (like ![]() ). But if everything is a symbolic expression there shouldn’t really need to be a distinction between “rules” and “ordinary expressions”. They’re all just expressions. And so we should as well be able to apply rules to rules as to ordinary expressions.

). But if everything is a symbolic expression there shouldn’t really need to be a distinction between “rules” and “ordinary expressions”. They’re all just expressions. And so we should as well be able to apply rules to rules as to ordinary expressions.

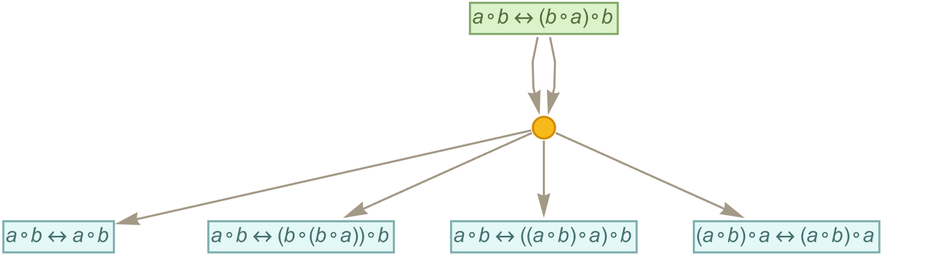

And indeed the concept of “applying rules to rules” is something that has a familiar analog in standard mathematics. The “two-way rules” we’ve been using effectively define equivalences—which are very common kinds of statements in mathematics, though in mathematics they’re usually written with ![]() rather than with

rather than with ![]() . And indeed, many axioms and many theorems are specified as equivalences—and in equational logic one takes everything to be defined using equivalences. And when one’s dealing with theorems (or axioms) specified as equivalences, the basic way one derives new theorems is by applying one theorem to another—or in effect by applying rules to rules.

. And indeed, many axioms and many theorems are specified as equivalences—and in equational logic one takes everything to be defined using equivalences. And when one’s dealing with theorems (or axioms) specified as equivalences, the basic way one derives new theorems is by applying one theorem to another—or in effect by applying rules to rules.

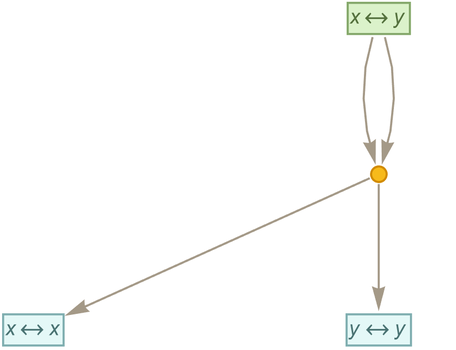

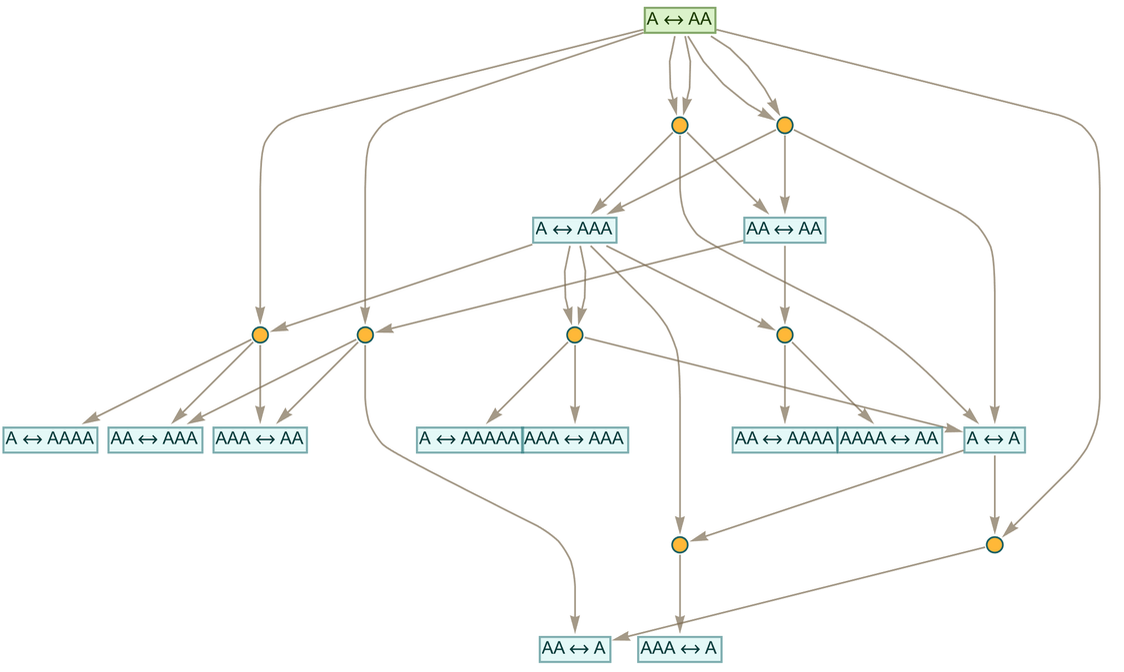

As a specific example, let’s say we have the “axiom”:

|

|

We can now apply this to the rule

|

|

to get (where since ![]() is equivalent to

is equivalent to ![]() we’re sorting each two-way rule that arises)

we’re sorting each two-way rule that arises)

|

or after a few more steps:

|

In this example all that’s happening is that the substitutions specified by the axiom are getting separately applied to the left- and right-hand sides of each rule that is generated. But if we really take seriously the idea that everything is a symbolic expression, things can get a bit more complicated.

Consider for example the rule:

|

|

If we apply this to

|

|

then if x_ “matches any expression” it can match the whole expression ![]() giving the result:

giving the result:

|

|

Standard mathematics doesn’t have an obvious meaning for something like this—although as soon as one “goes metamathematical” it’s fine. But in an effort to maintain contact with standard mathematics we’ll for now have the “meta rule” that x_ can’t match an expression whose top-level operator is ![]() . (As we’ll discuss later, including such matches would allow us to do exotic things like encode set theory within arithmetic, which is again something usually considered to be “syntactically prevented” in mathematical logic.)

. (As we’ll discuss later, including such matches would allow us to do exotic things like encode set theory within arithmetic, which is again something usually considered to be “syntactically prevented” in mathematical logic.)

Another—still more obscure—meta rule we have is that x_ can’t “match inside a variable”. In Wolfram Language, for example, a_ has the full form Pattern[a,Blank[]], and one could imagine that x_ could match “internal pieces” of this. But for now, we’re going to treat all variables as atomic—even though later on, when we “descend below the level of variables”, the story will be different.

When we apply a rule like ![]() to

to ![]() we’re taking a rule with pattern variables, and doing substitutions with it on a “literal expression” without pattern variables. But it’s also perfectly possible to apply pattern rules to pattern rules—and indeed that’s what we’ll mostly do below. But in this case there’s another subtle issue that can arise. Because if our rule generates variables, we can end up with two different kinds of variables with “arbitrary names”: generated variables, and pattern variables from the rule we’re operating on. And when we canonicalize the names of these variables, we can end up with identical expressions that we need to merge.

we’re taking a rule with pattern variables, and doing substitutions with it on a “literal expression” without pattern variables. But it’s also perfectly possible to apply pattern rules to pattern rules—and indeed that’s what we’ll mostly do below. But in this case there’s another subtle issue that can arise. Because if our rule generates variables, we can end up with two different kinds of variables with “arbitrary names”: generated variables, and pattern variables from the rule we’re operating on. And when we canonicalize the names of these variables, we can end up with identical expressions that we need to merge.

Here’s what happens if we apply the rule ![]() to the literal rule

to the literal rule ![]() :

:

|

If we apply it to the pattern rule ![]() but don’t do canonicalization, we’ll just get the same basic result:

but don’t do canonicalization, we’ll just get the same basic result:

|

But if we canonicalize we get instead:

|

The effect is more dramatic if we go to two steps. When operating on the literal rule we get:

|

Operating on the pattern rule, but without canonicalization, we get

|

while if we include canonicalization many rules merge and we get:

|

8 | Accumulative Evolution

We can think of “ordinary expressions” like ![]() as being like “data”, and rules as being like “code”. But when everything is a symbolic expression, it’s perfectly possible—as we saw above—to “treat code like data”, and in particular to generate rules as output. But this now raises a new possibility. When we “get a rule as output”, why not start “using it like code” and applying it to things?

as being like “data”, and rules as being like “code”. But when everything is a symbolic expression, it’s perfectly possible—as we saw above—to “treat code like data”, and in particular to generate rules as output. But this now raises a new possibility. When we “get a rule as output”, why not start “using it like code” and applying it to things?

In mathematics we might apply some theorem to prove a lemma, and then we might subsequently use that lemma to prove another theorem—eventually building up a whole “accumulative structure” of lemmas (or theorems) being used to prove other lemmas. In any given proof we can in principle always just keep using the axioms over and over again—but it’ll be much more efficient to progressively build a library of more and more lemmas, and use these. And in general we’ll build up a richer structure by “accumulating lemmas” than always just going back to the axioms.

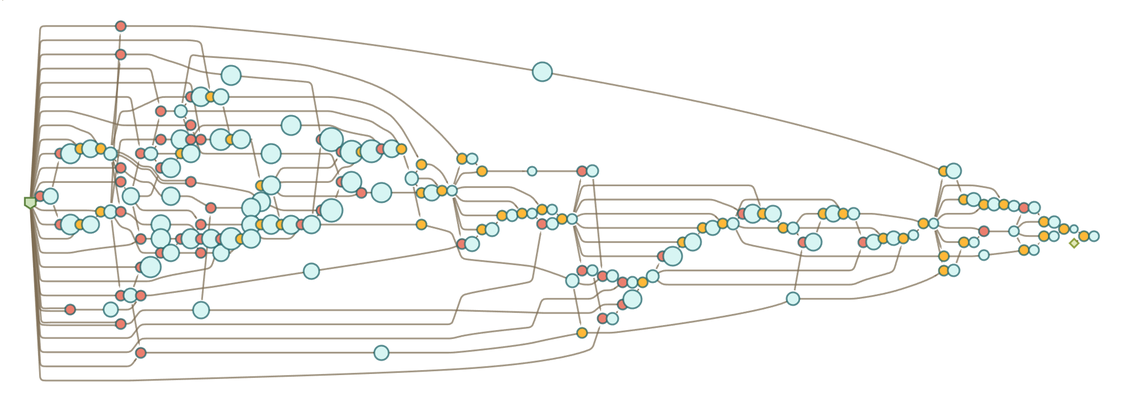

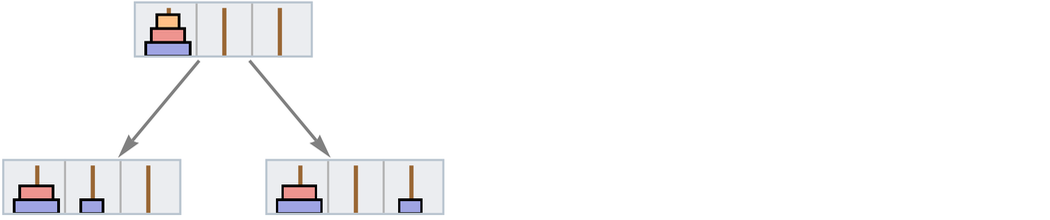

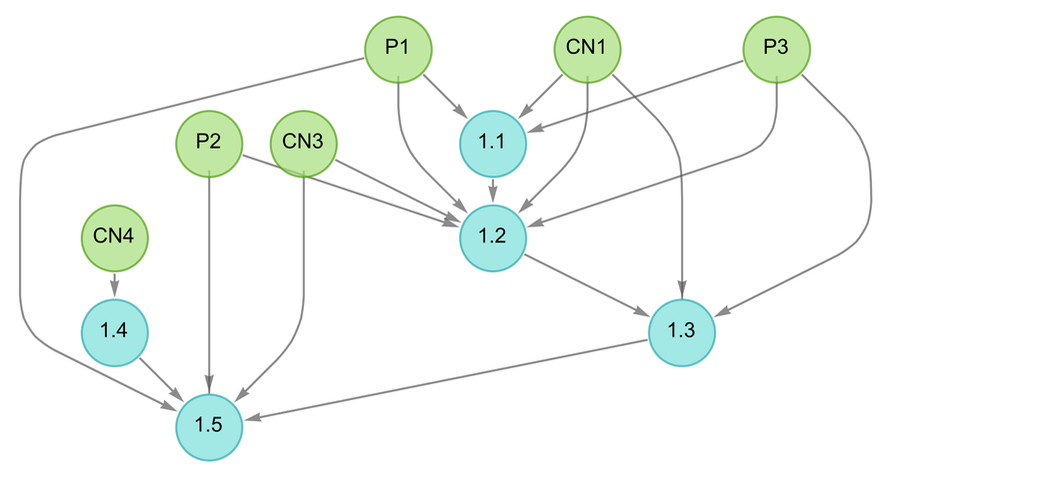

In the multiway graphs we’ve drawn so far, each edge represents the application of a rule, but that rule is always a fixed axiom. To represent accumulative evolution we need a slightly more elaborate structure—and it’ll be convenient to use token-event graphs rather than pure multiway graphs.

Every time we apply a rule we can think of this as an event. And with the setup we’re describing, that event can be thought of as taking two tokens as input: one the “code rule” and the other the “data rule”. The output from the event is then some collection of rules, which can then serve as input (either “code” or “data”) to other events.

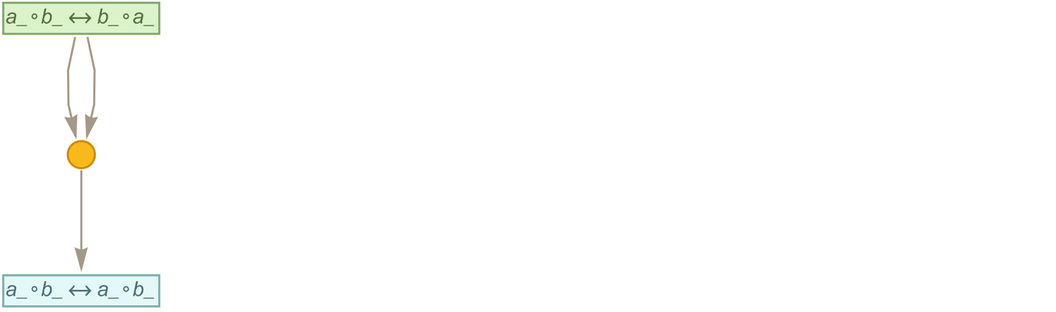

Let’s start with the very simple example of the rule

|

|

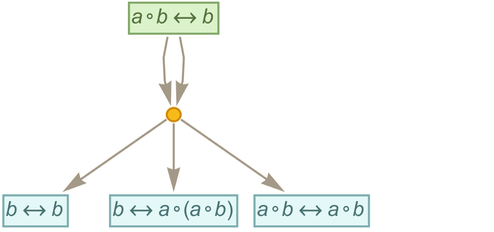

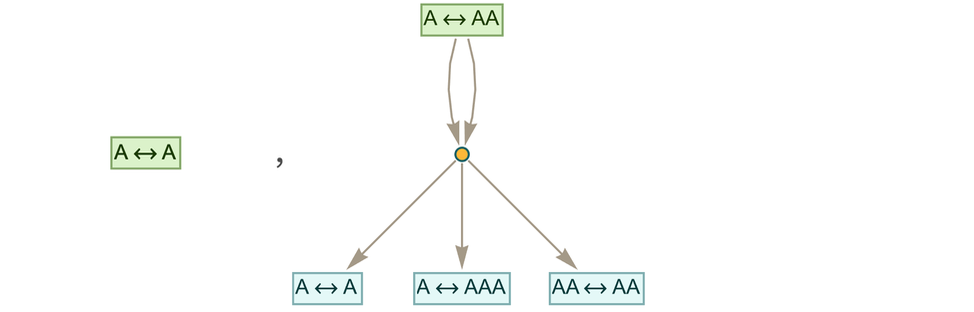

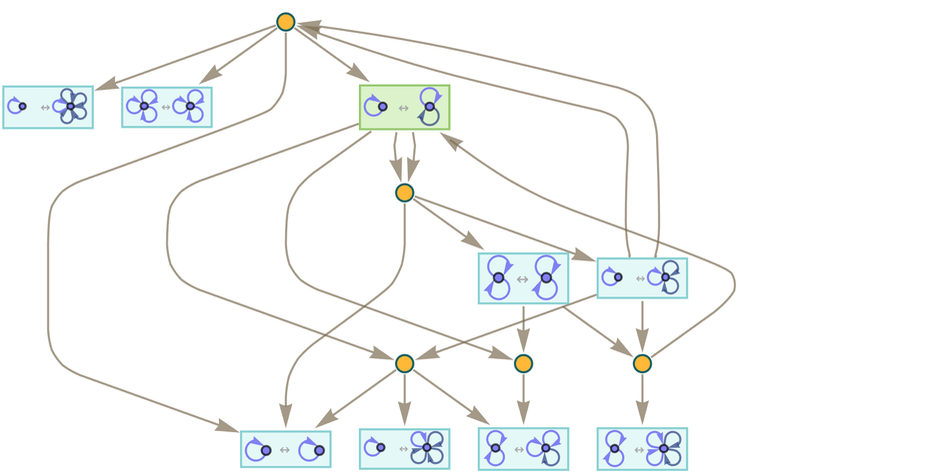

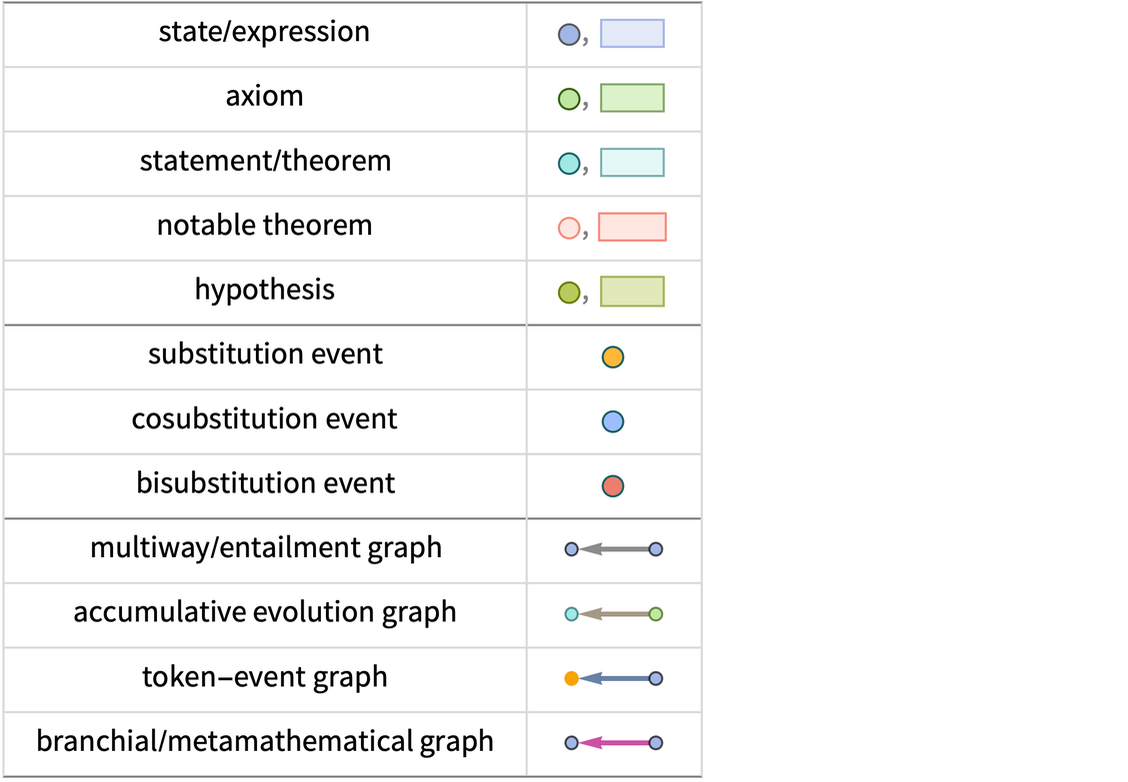

where for now there are no patterns being used. Starting from this rule, we get the token-event graph (where now we’re indicating the initial “axiom” statement using a slightly different color):

|

One subtlety here is that the ![]() is applied to itself—so there are two edges going into the event from the node representing the rule. Another subtlety is that there are two different ways the rule can be applied, with the result that there are two output rules generated.

is applied to itself—so there are two edges going into the event from the node representing the rule. Another subtlety is that there are two different ways the rule can be applied, with the result that there are two output rules generated.

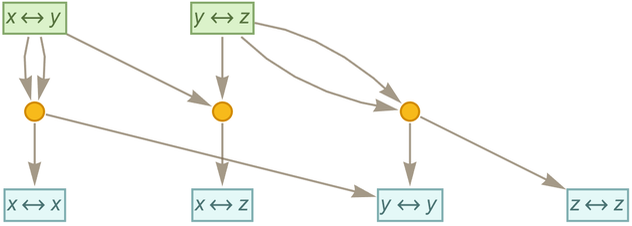

Here’s another example, based on the two rules:

|

|

|

Continuing for another step we get:

|

Typically we will want to consider ![]() as “defining an equivalence”, so that

as “defining an equivalence”, so that ![]() means the same as

means the same as ![]() , and can be conflated with it—yielding in this case:

, and can be conflated with it—yielding in this case:

|

Now let’s consider the rule:

|

|

After one step we get:

|

After 2 steps we get:

|

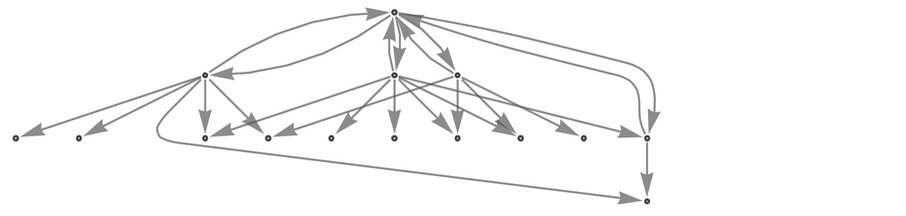

The token-event graphs after 3 and 4 steps in this case are (where now we’ve deduplicated events):

|

Let’s now consider a rule with the same structure, but with pattern variables instead of literal symbols:

|

|

Here’s what happens after one step (note that there’s canonicalization going on, so a_’s in different rules aren’t “the same”)

|

and we see that there are different theorems from the ones we got without patterns. After 2 steps with the pattern rule we get

|

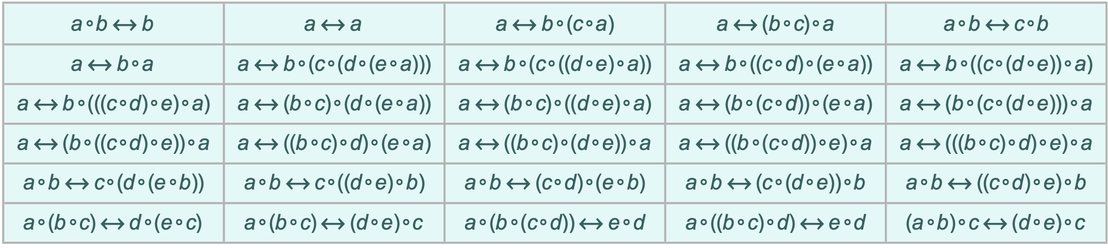

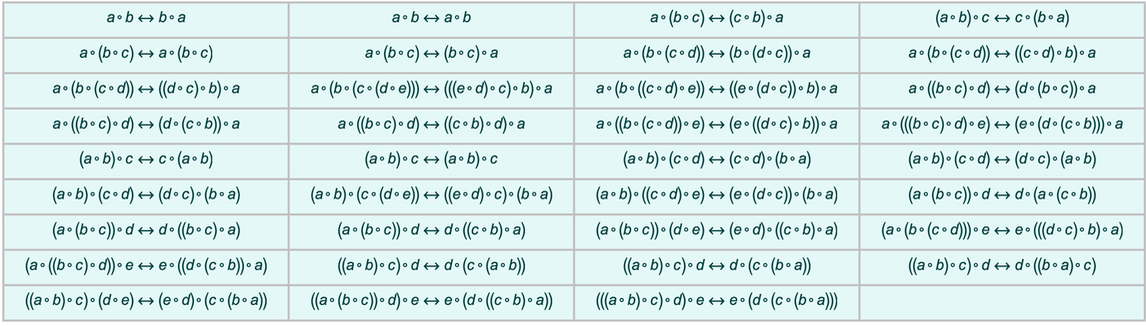

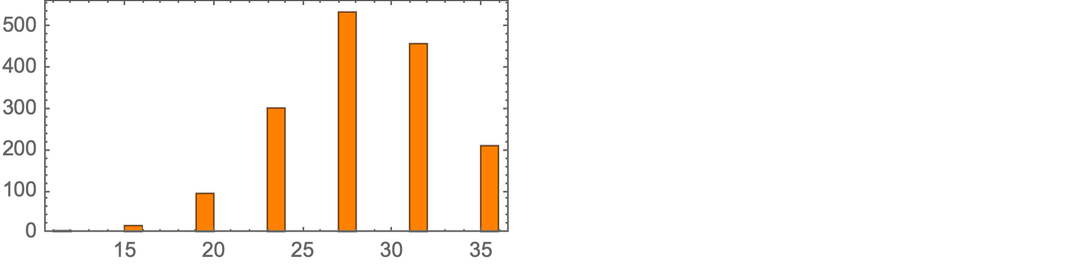

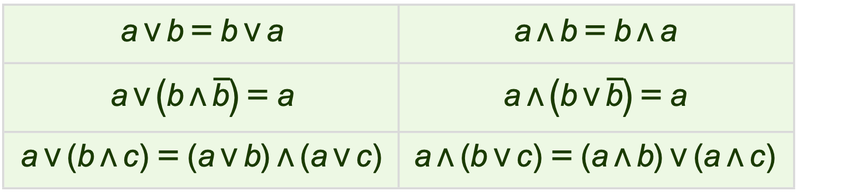

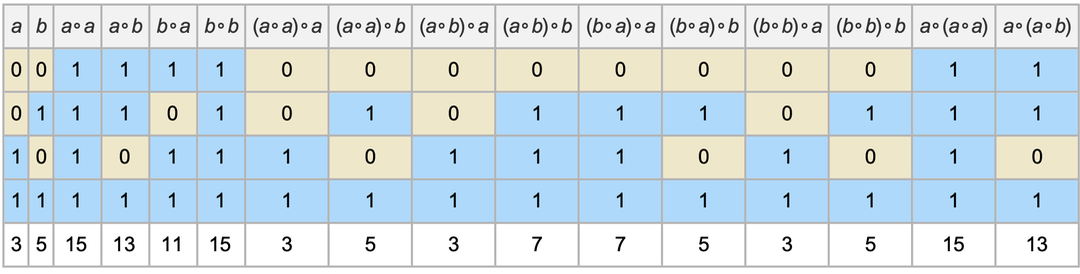

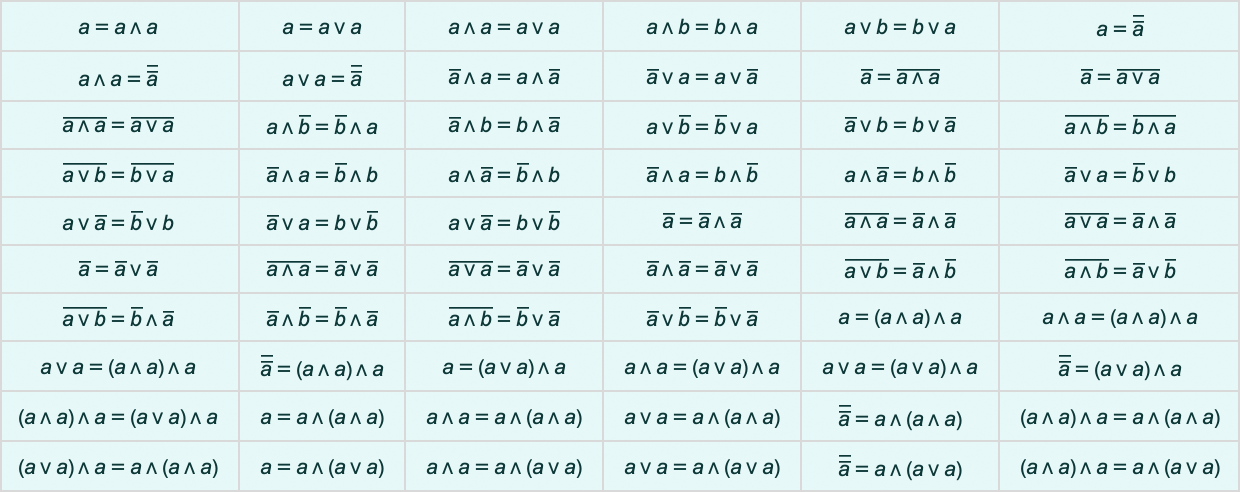

where now the complete set of “theorems that have been derived” is (dropping the _’s for readability)

|

or as trees:

|

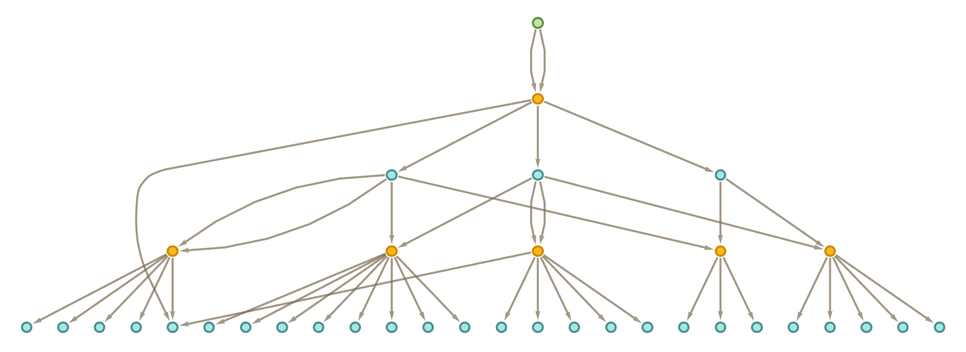

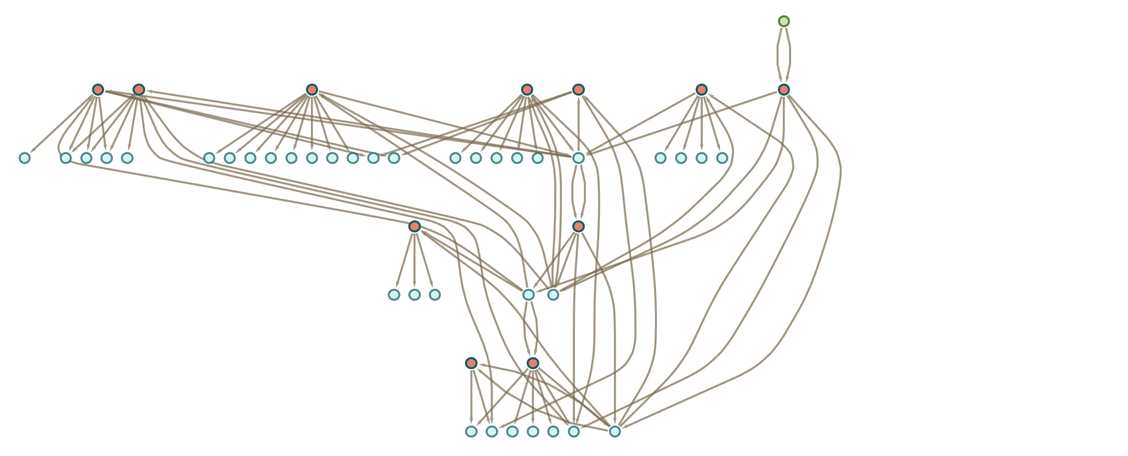

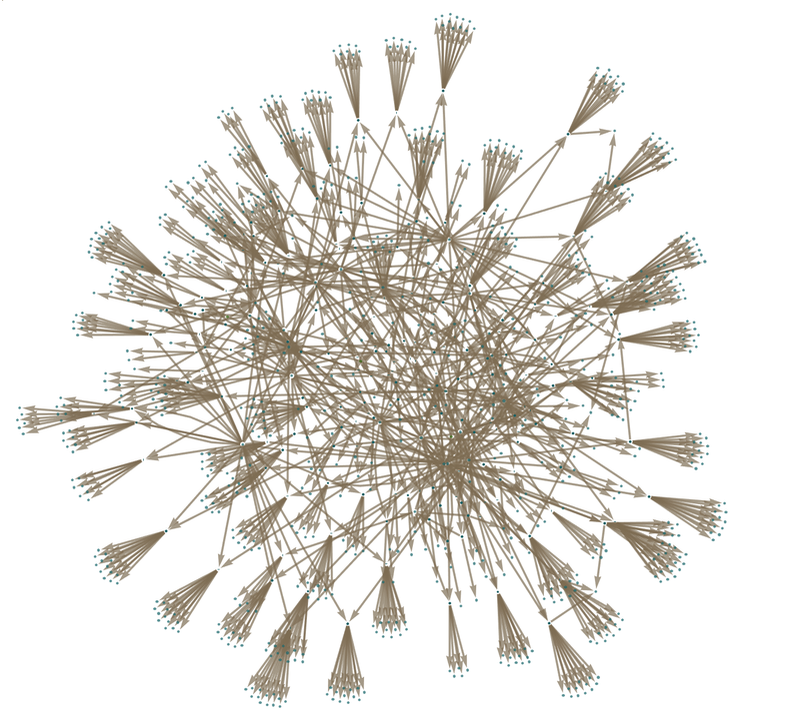

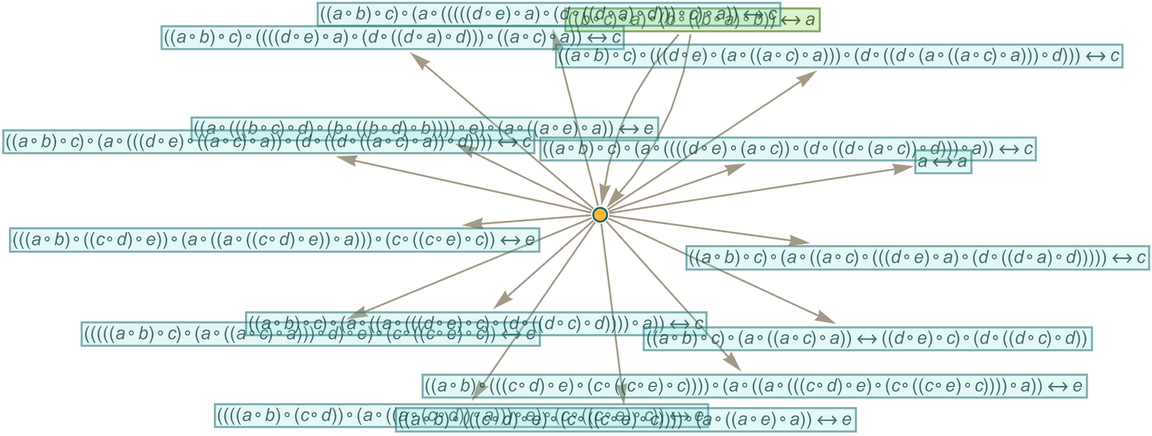

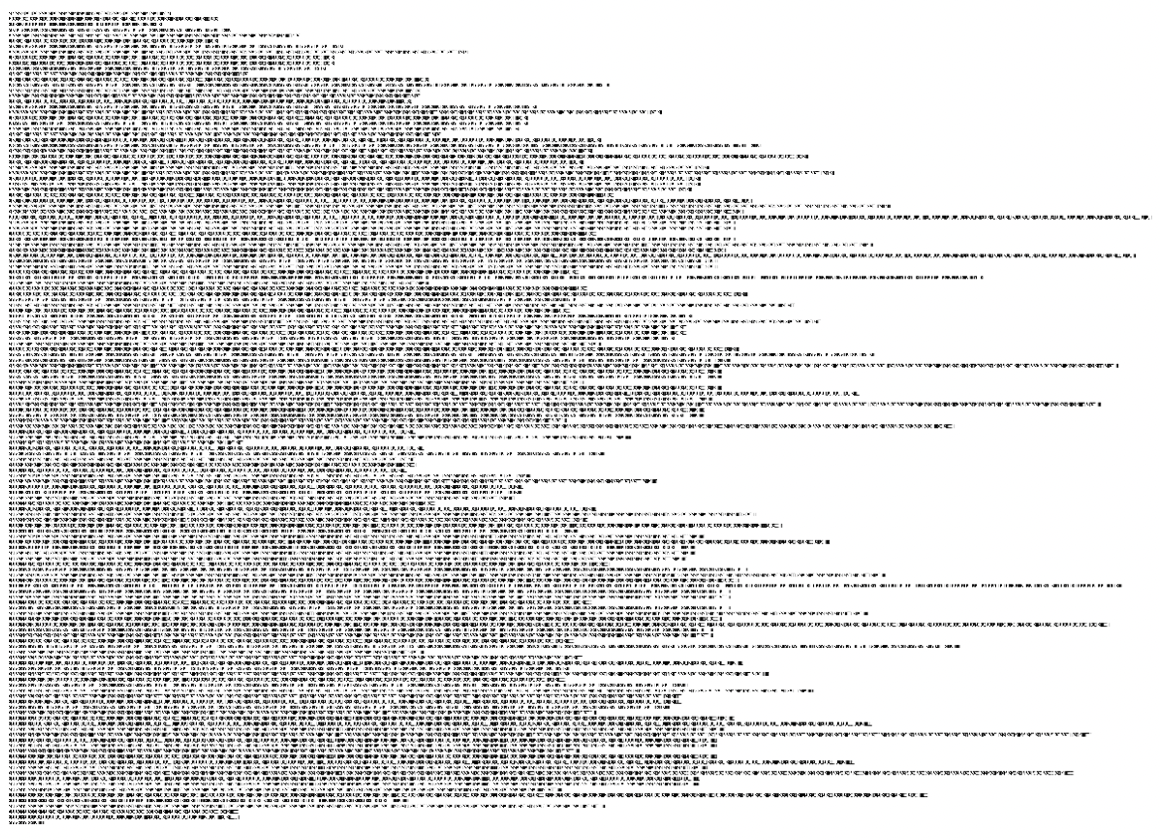

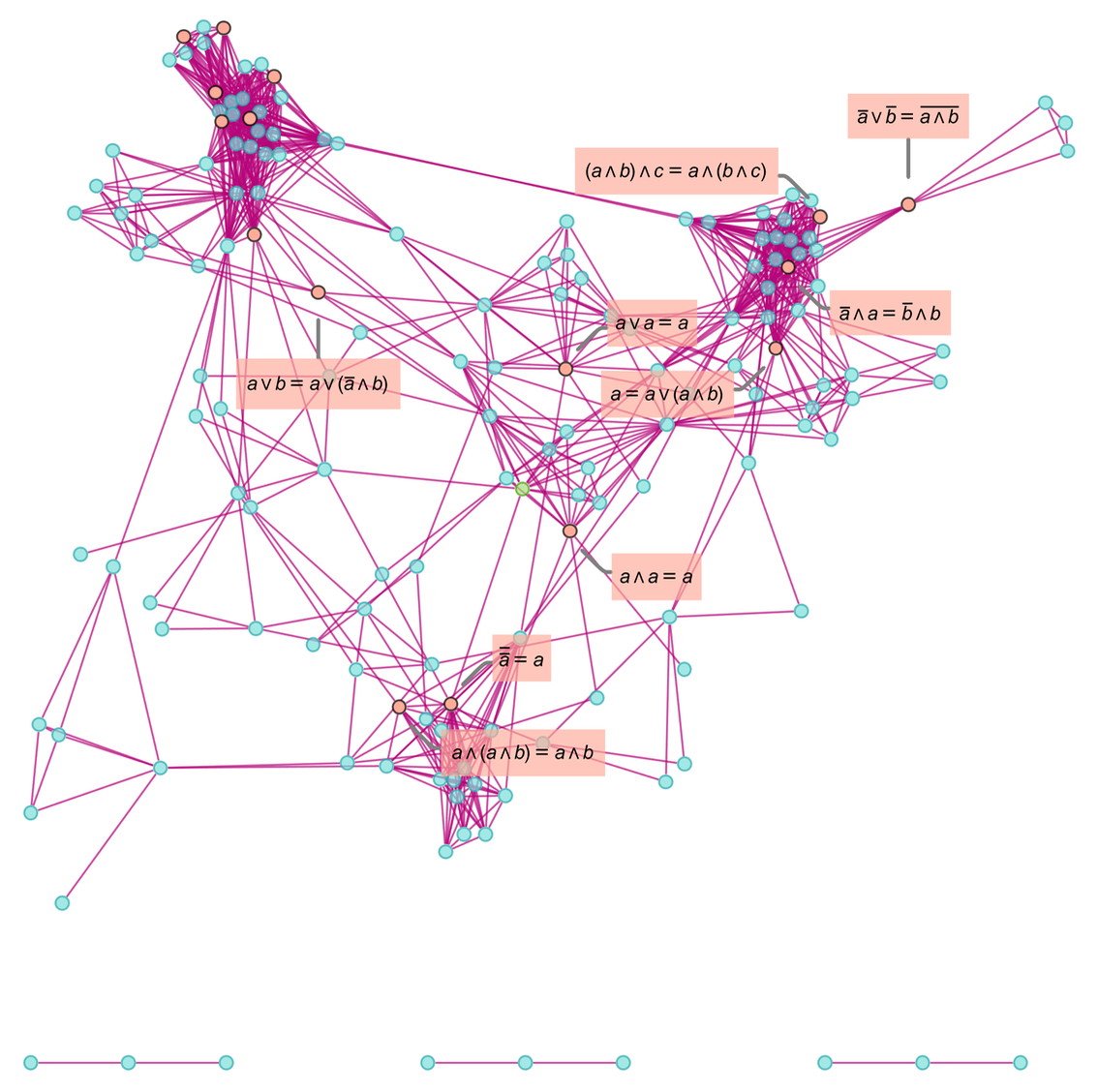

After another step one gets

|

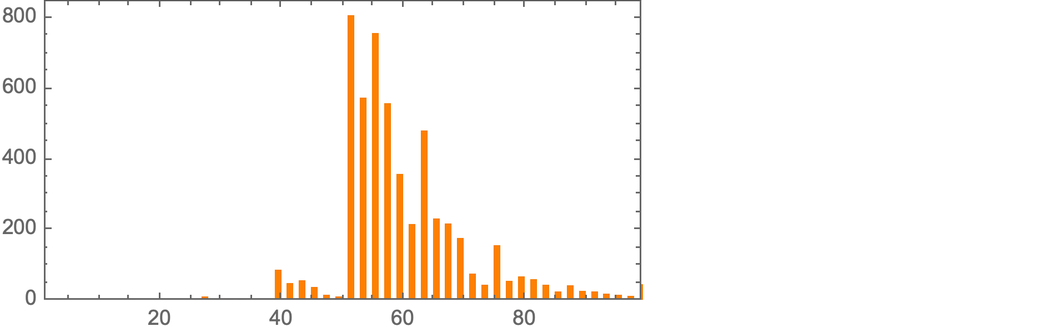

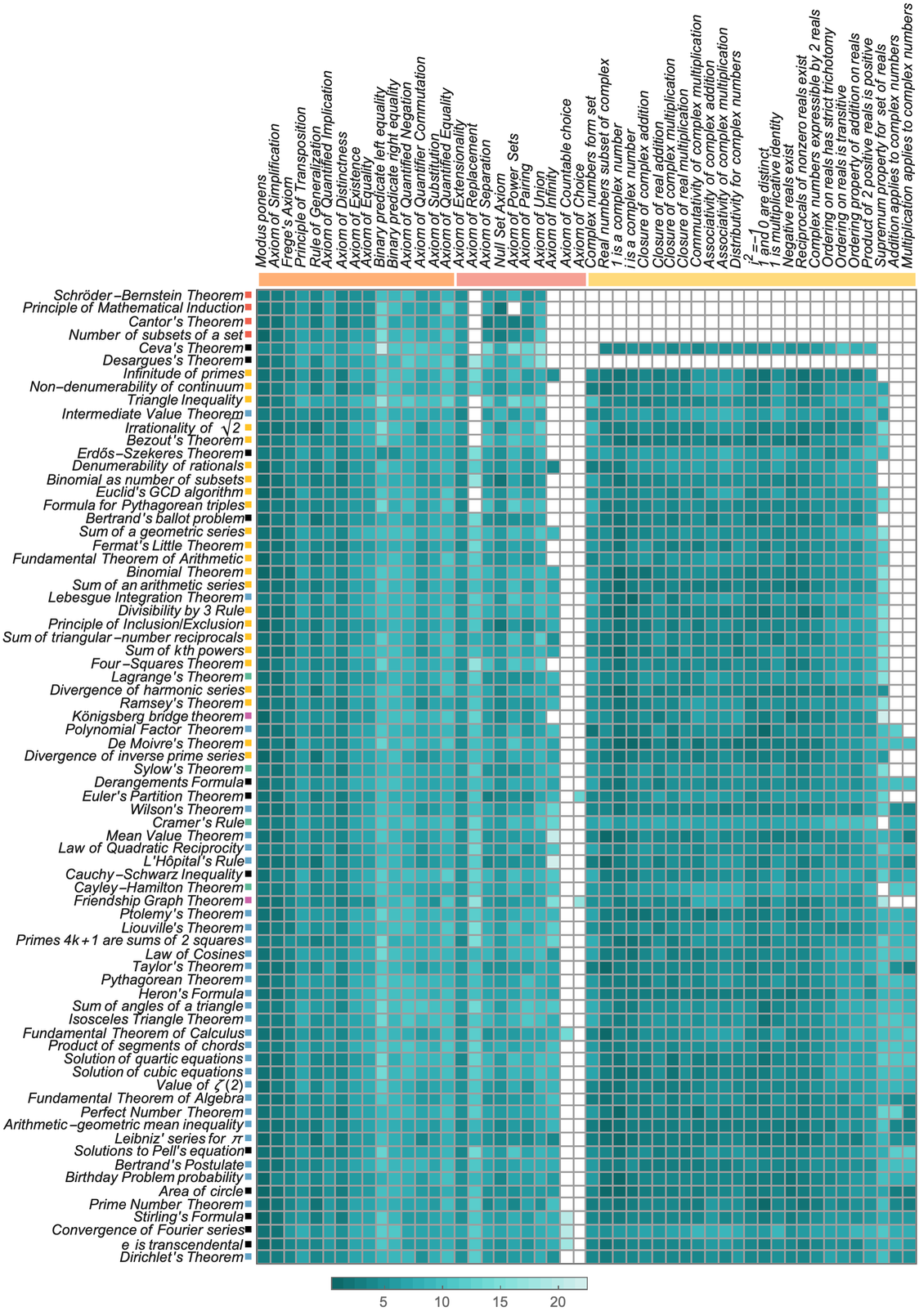

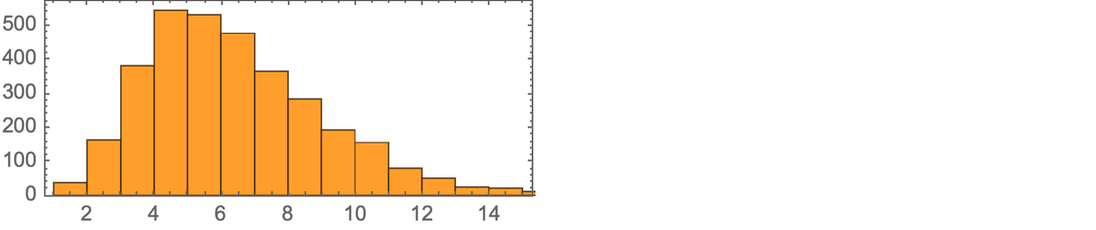

where now there are 2860 “theorems”, roughly exponentially distributed across sizes according to

|

and with a typical “size-19” theorem being:

|

|

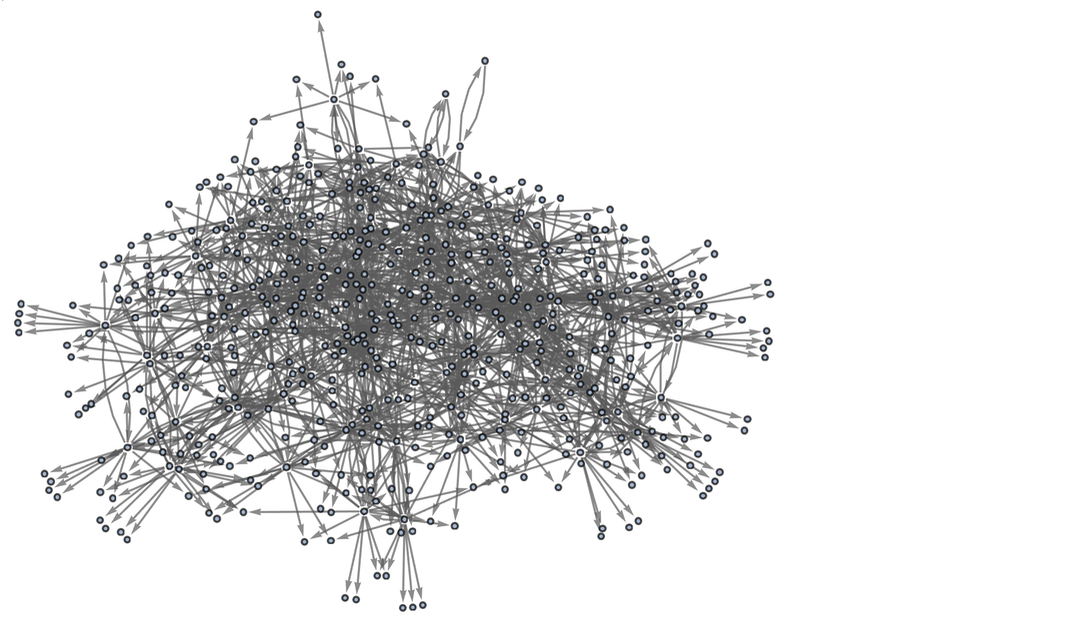

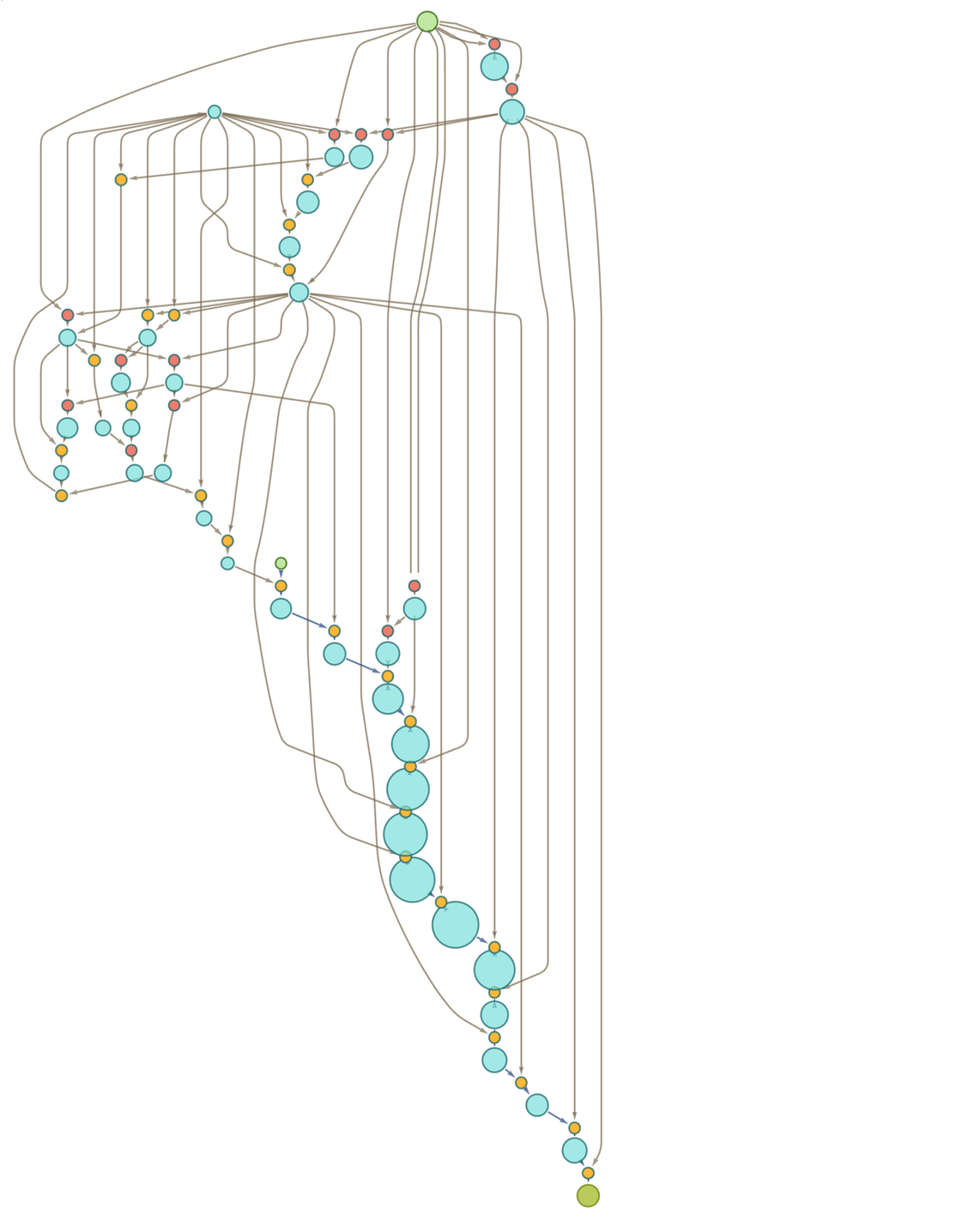

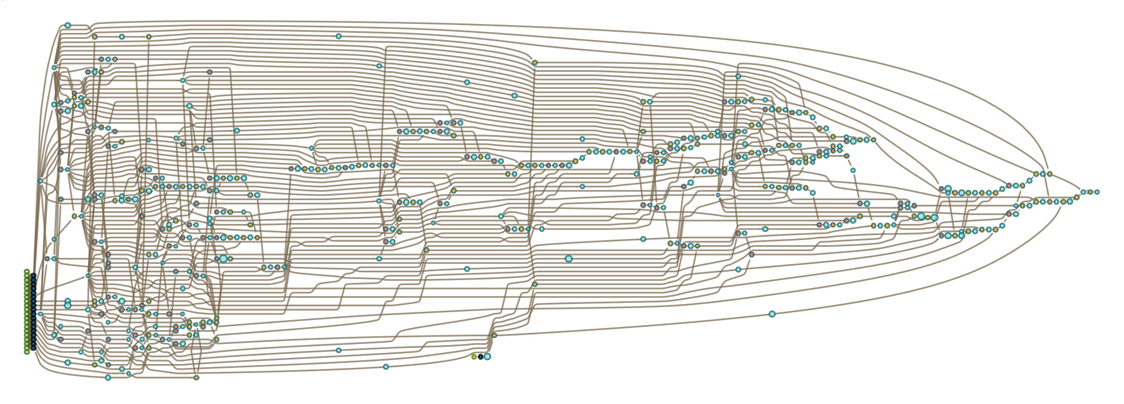

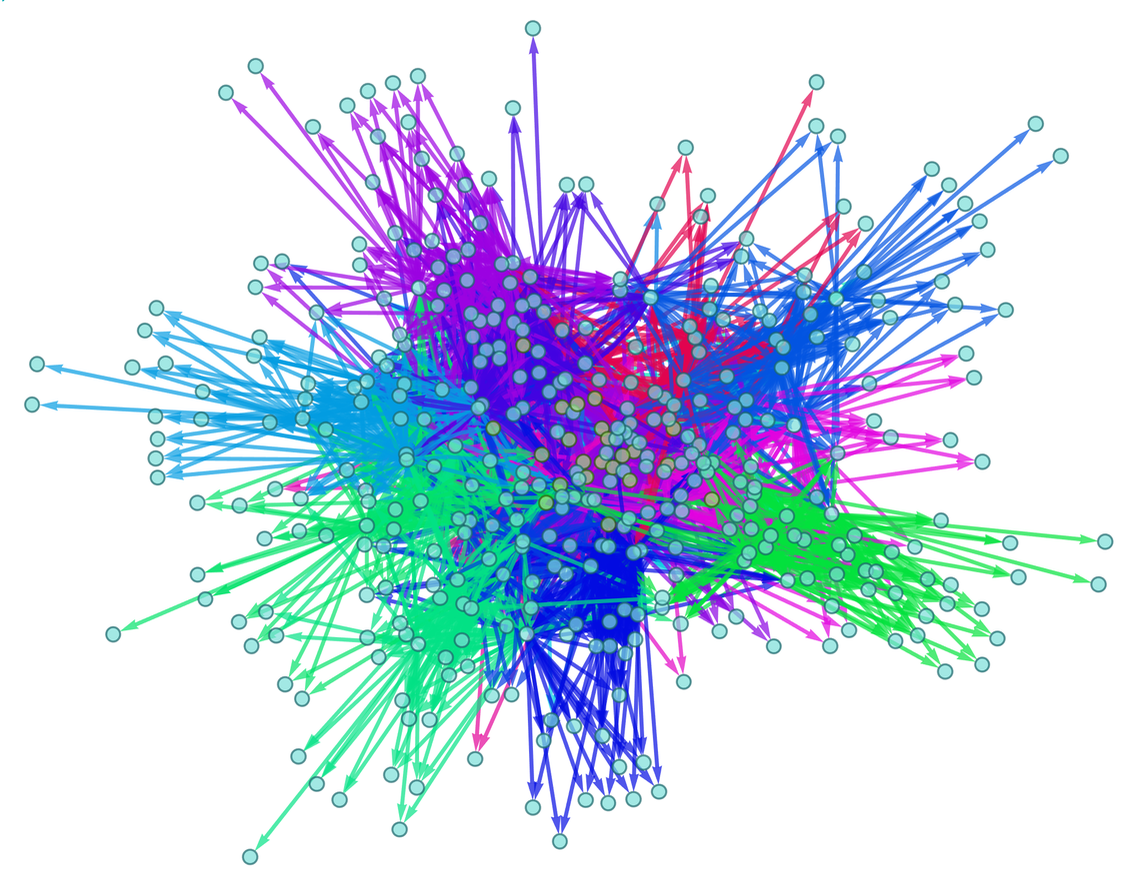

In effect we can think of our original rule (or “axiom”) as having initiated some kind of “mathematical Big Bang” from which an increasing number of theorems are generated. Early on we described having a “gas” of mathematical theorems that—a little like molecules—can interact and create new theorems. So now we can view our accumulative evolution process as a concrete example of this.

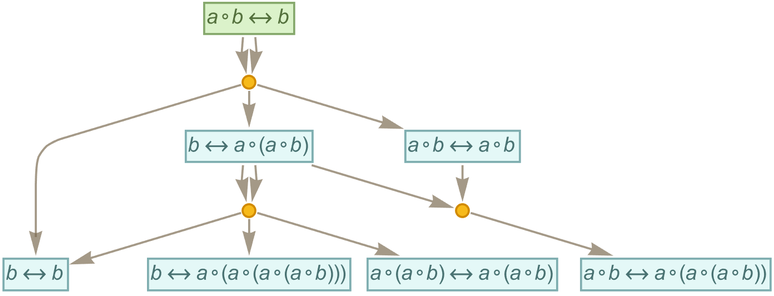

Let’s consider the rule from previous sections:

|

|

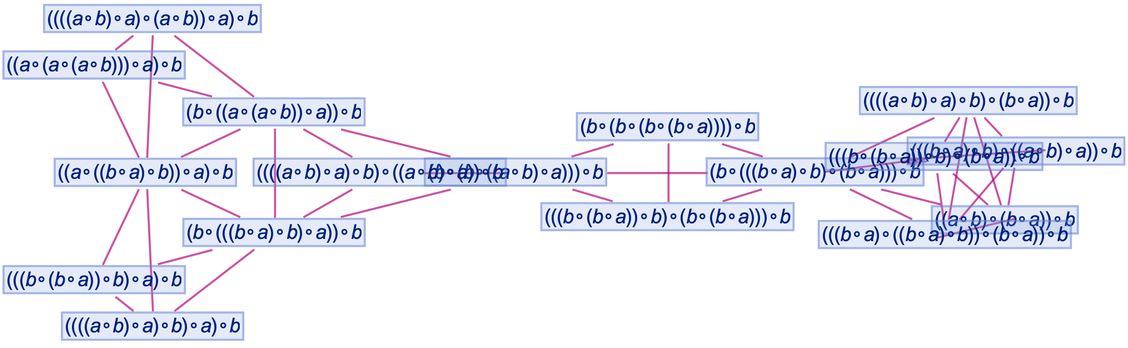

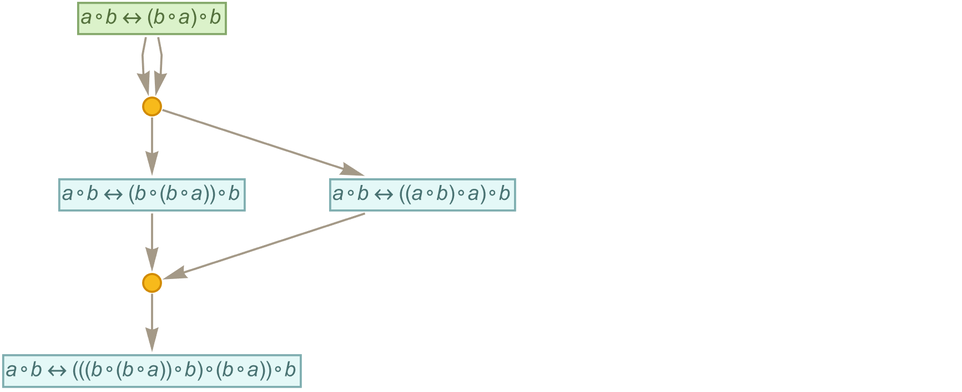

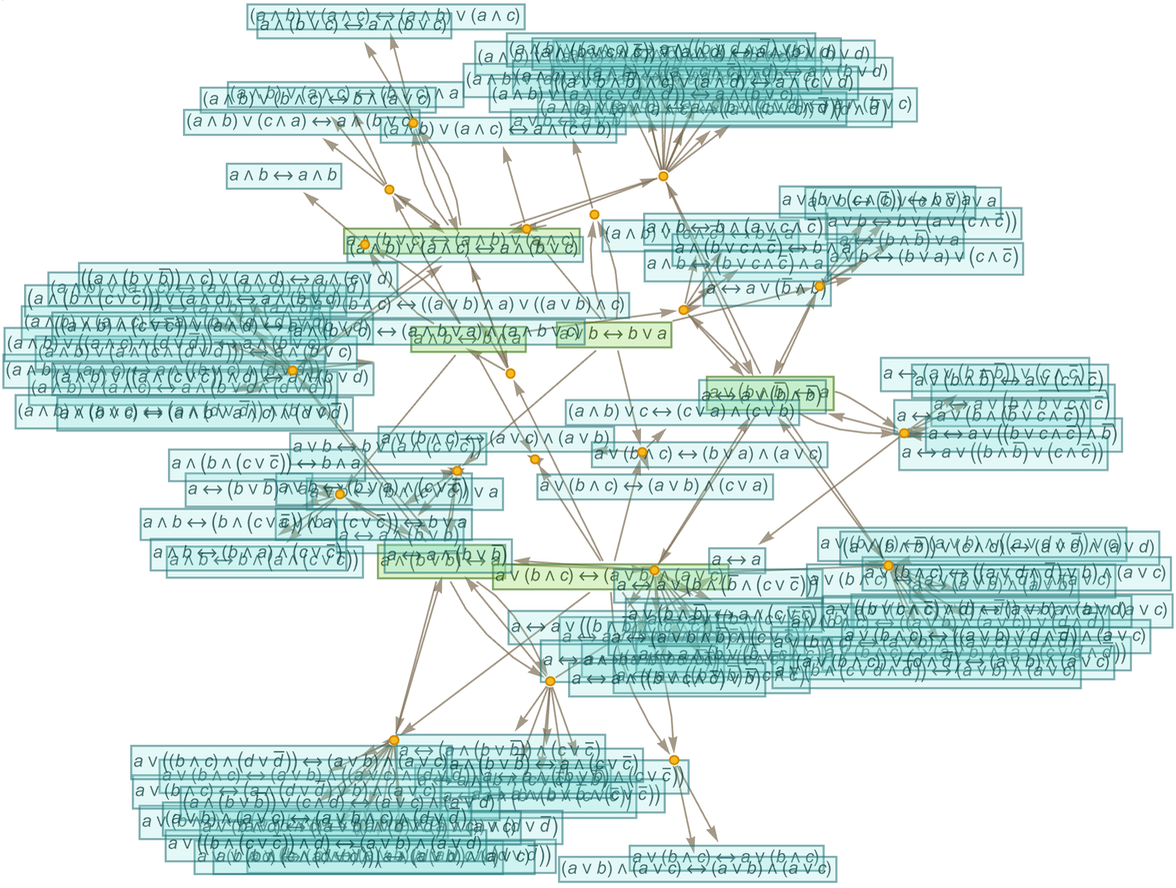

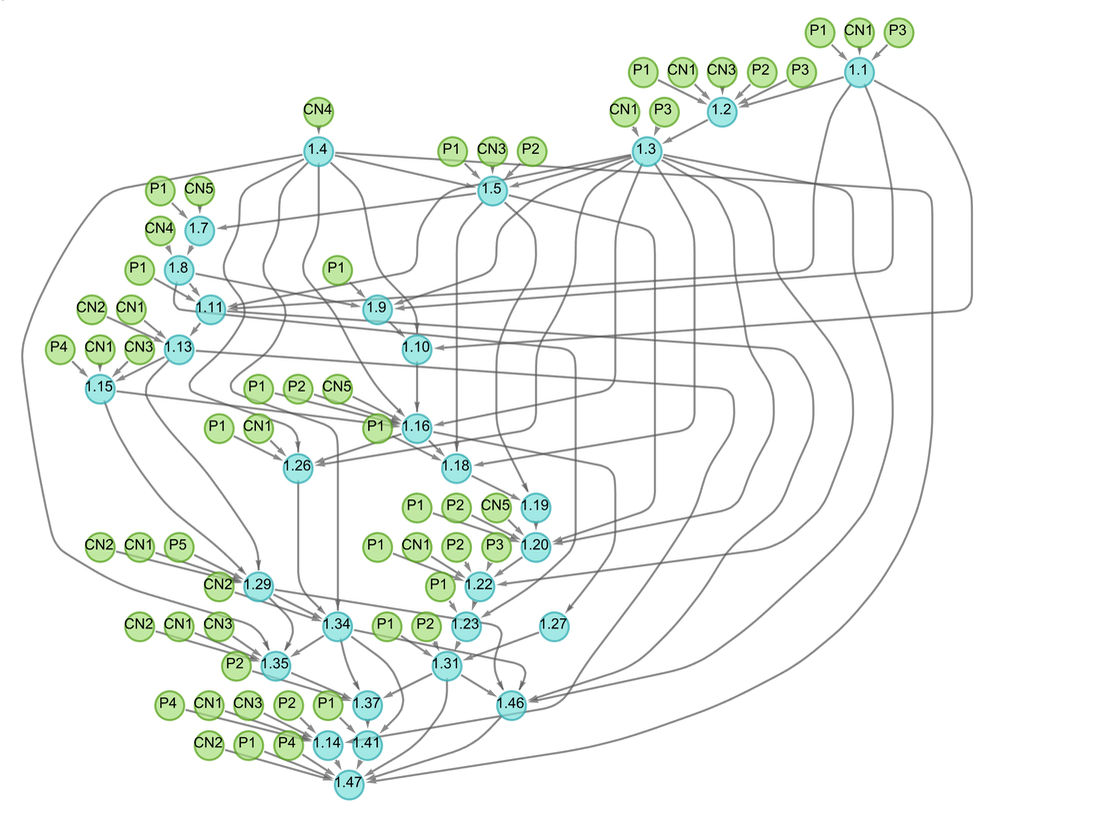

After one step of accumulative evolution according to this rule we get:

|

After 2 and 3 steps the results are:

|

What is the significance of all this complexity? At a basic level, it’s just an example of the ubiquitous phenomenon in the computational universe (captured in the Principle of Computational Equivalence) that even systems with very simple rules can generate behavior as complex as anything. But the question is whether—on top of all this complexity—there are simple “coarse-grained” features that we can identify as “higher-level mathematics”; features that we can think of as capturing the “bulk” behavior of the accumulative evolution of axiomatic mathematics.

9 | Accumulative String Systems

As we’ve just seen, the accumulative evolution of even very simple transformation rules for expressions can quickly lead to considerable complexity. And in an effort to understand the essence of what’s going on, it’s useful to look at the slightly simpler case not of rules for “tree-structured expressions” but instead at rules for strings of characters.

Consider the seemingly trivial case of the rule:

|

|

After one step this gives

|

while after 2 steps we get

|

though treating ![]() as the same as

as the same as ![]() this just becomes:

this just becomes:

|

Here’s what happens with the rule:

|

|

|

After 2 steps we get

|

and after 3 steps

|

where now there are a total of 25 “theorems”, including (unsurprisingly) things like:

|

|

It’s worth noting that despite the “lexical similarity” of the string rule ![]() we’re now using to the expression rule

we’re now using to the expression rule ![]() from the previous section, these rules actually work in very different ways. The string rule can apply to characters anywhere within a string, but what it inserts is always of fixed size. The expression rule deals with trees, and only applies to “whole subtrees”, but what it inserts can be a tree of any size. (One can align these setups by thinking of strings as expressions in which characters are “bound together” by an associative operator, as in A·B·A·A. But if one explicitly gives associativity axioms these will lead to additional pieces in the token-event graph.)

from the previous section, these rules actually work in very different ways. The string rule can apply to characters anywhere within a string, but what it inserts is always of fixed size. The expression rule deals with trees, and only applies to “whole subtrees”, but what it inserts can be a tree of any size. (One can align these setups by thinking of strings as expressions in which characters are “bound together” by an associative operator, as in A·B·A·A. But if one explicitly gives associativity axioms these will lead to additional pieces in the token-event graph.)

A rule like ![]() also has the feature of involving patterns. In principle we could include patterns in strings too—both for single characters (as with _) and for sequences of characters (as with __)—but we won’t do this here. (We can also consider one-way rules, using → instead of

also has the feature of involving patterns. In principle we could include patterns in strings too—both for single characters (as with _) and for sequences of characters (as with __)—but we won’t do this here. (We can also consider one-way rules, using → instead of ![]() .)

.)

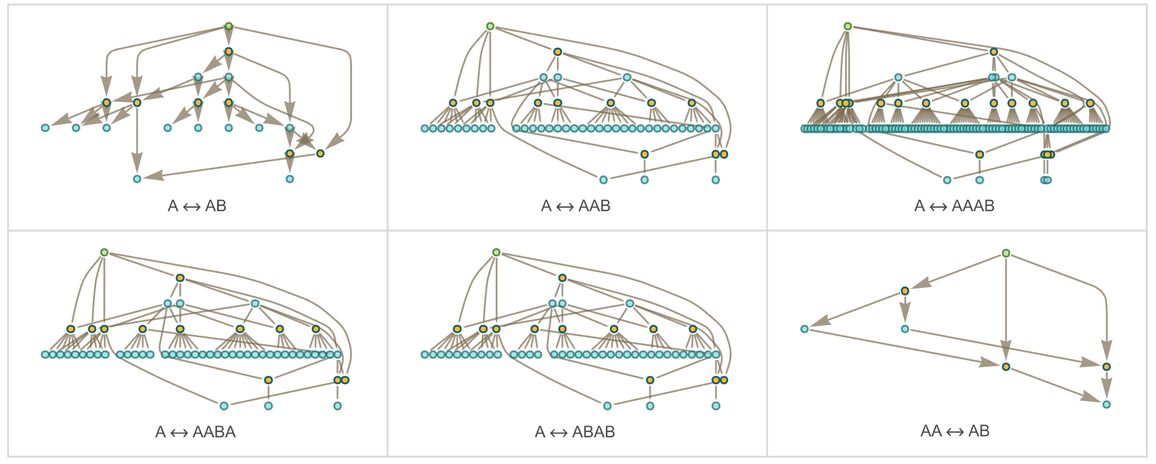

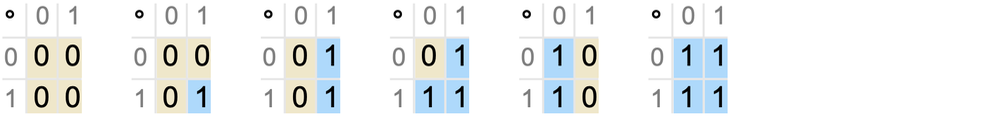

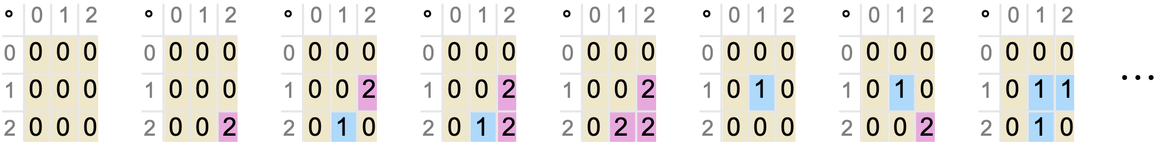

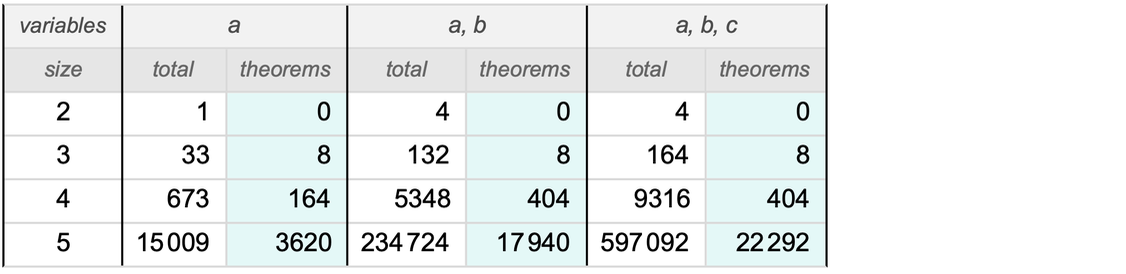

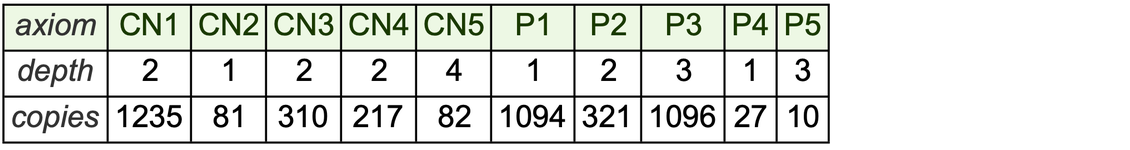

To get a general sense of the kinds of things that happen in accumulative (string) systems, we can consider enumerating all possible distinct two-way string transformation rules. With only a single character A, there are only two distinct cases

|

because ![]() systematically generates all possible

systematically generates all possible ![]() rules

rules

|

and at t steps gives a total number of rules equal to:

|

|

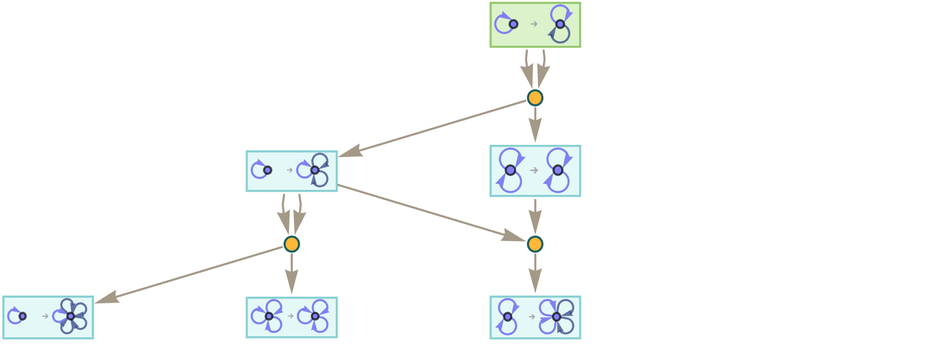

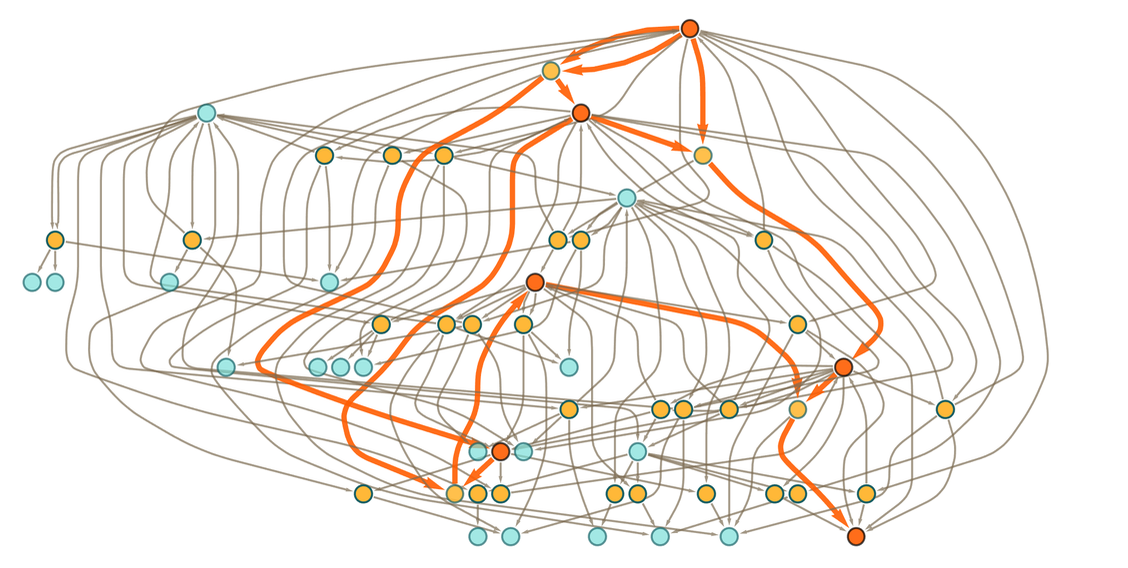

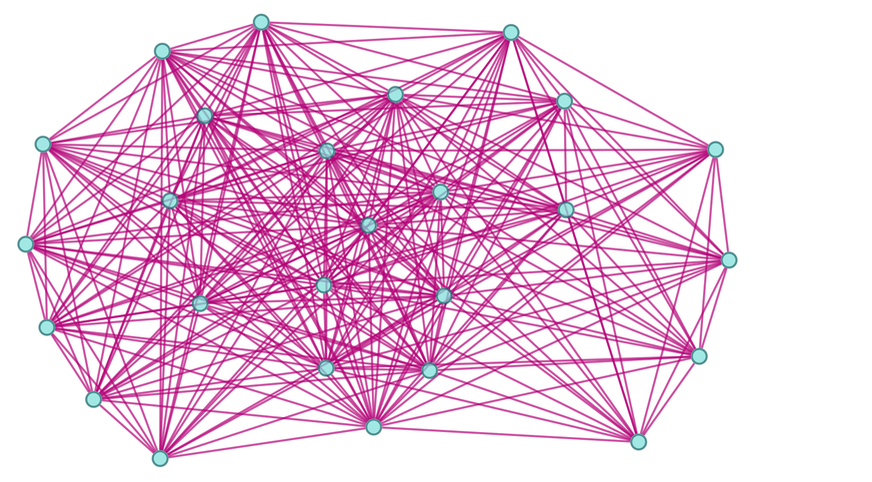

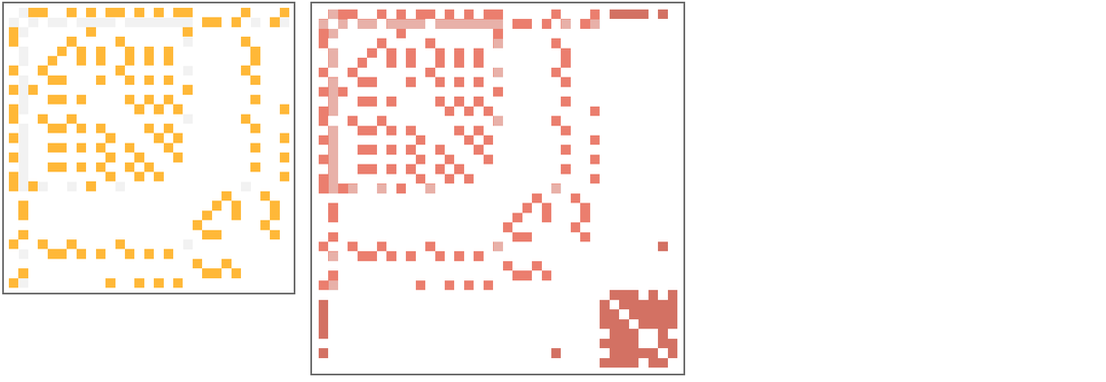

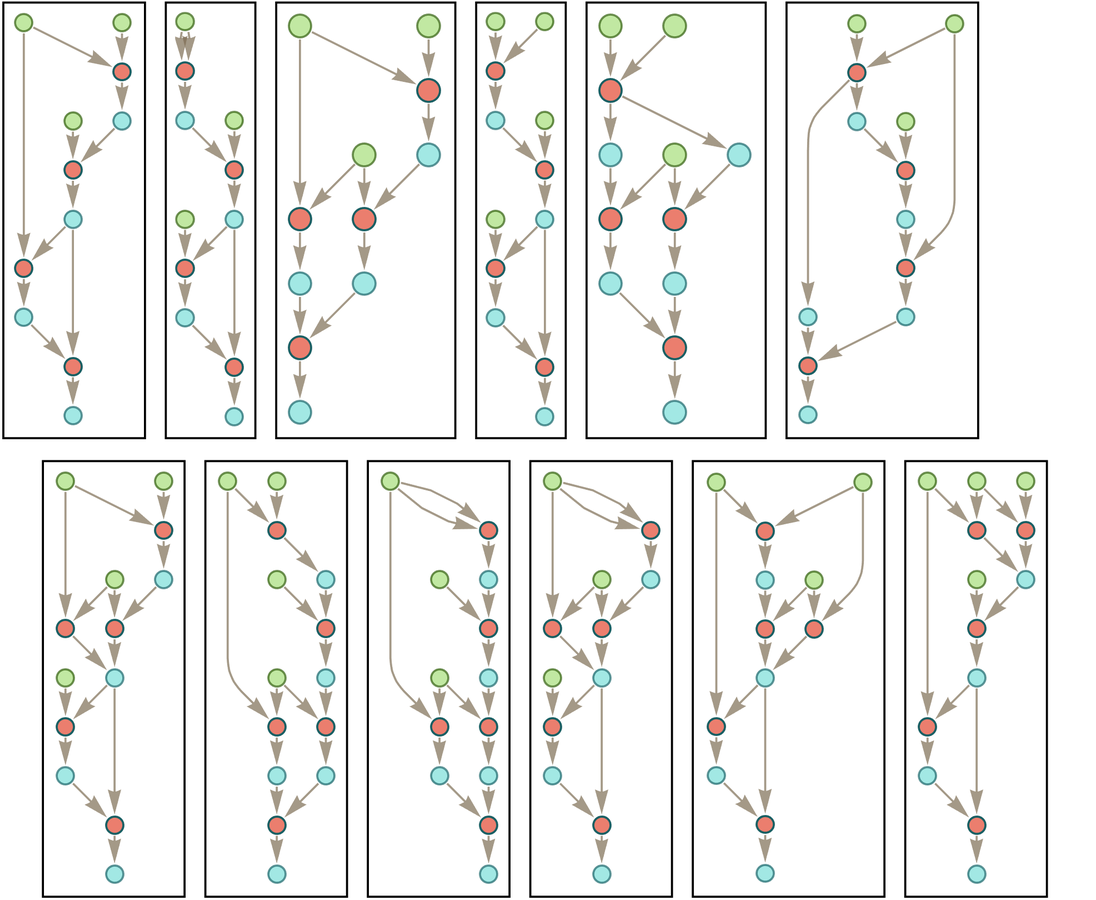

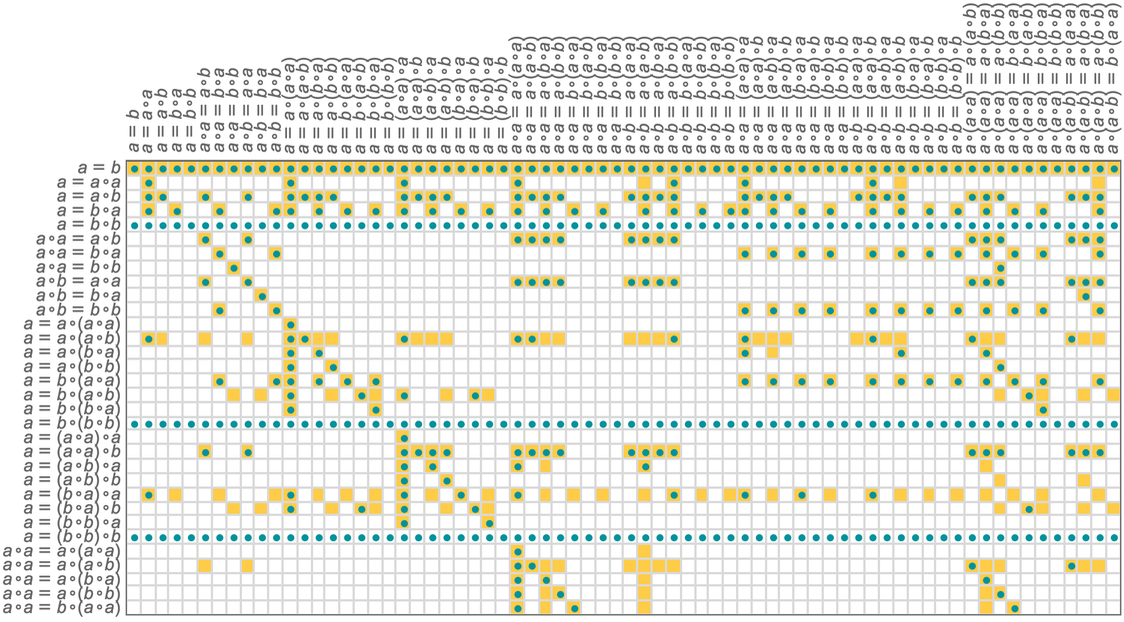

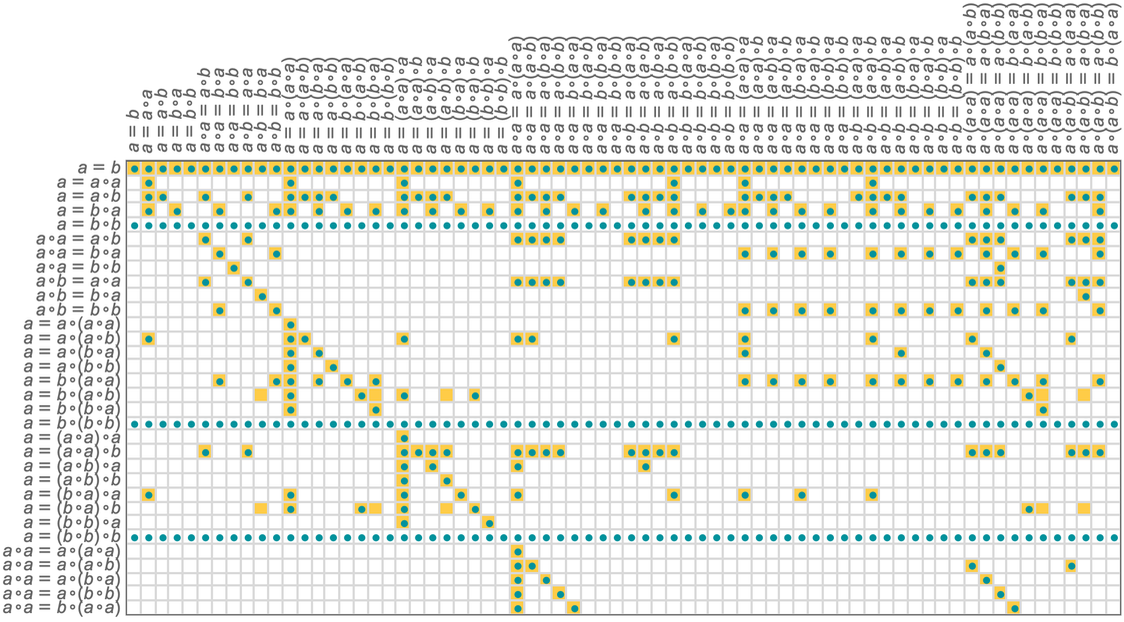

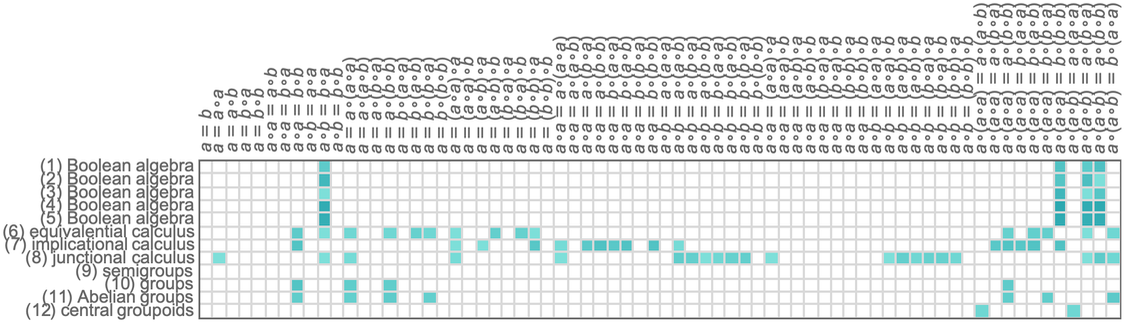

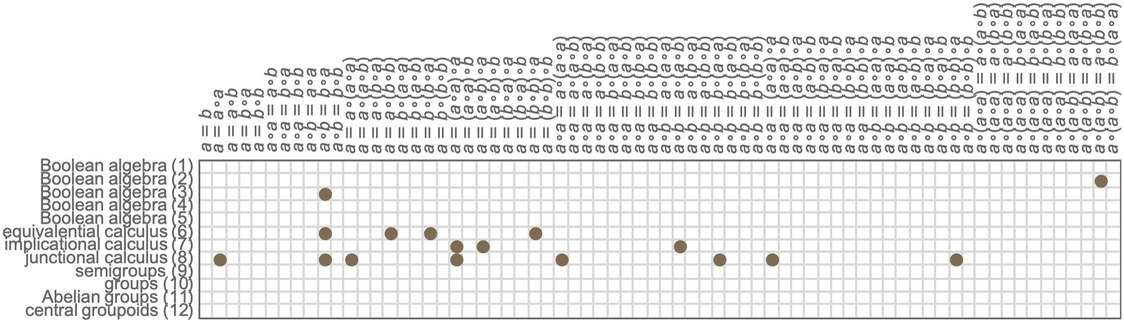

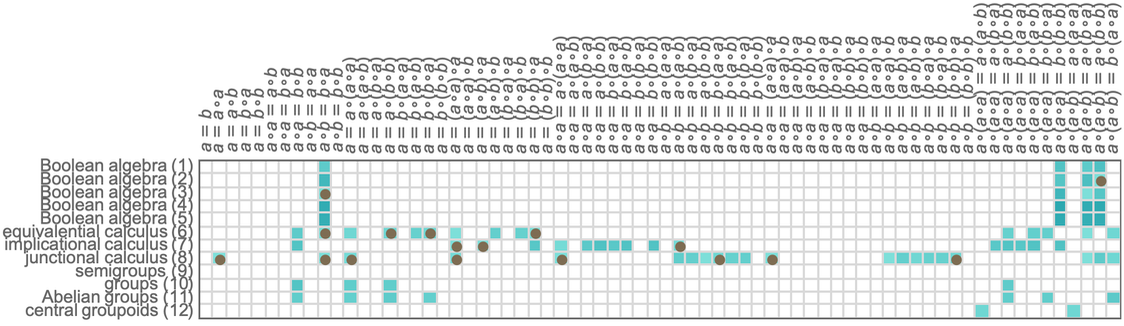

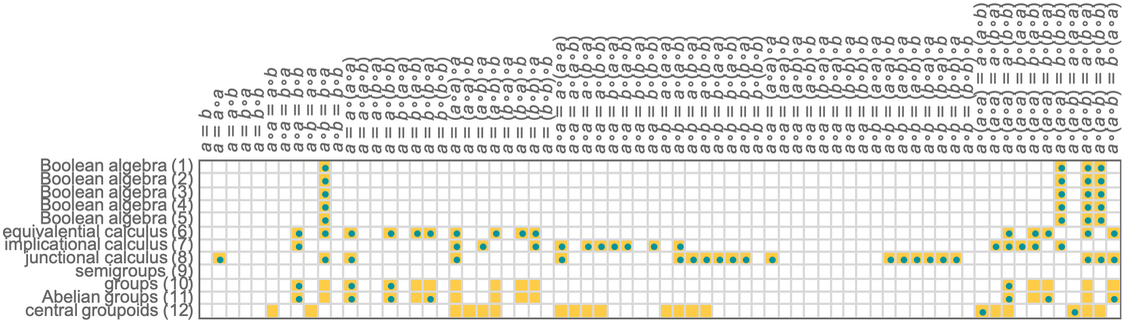

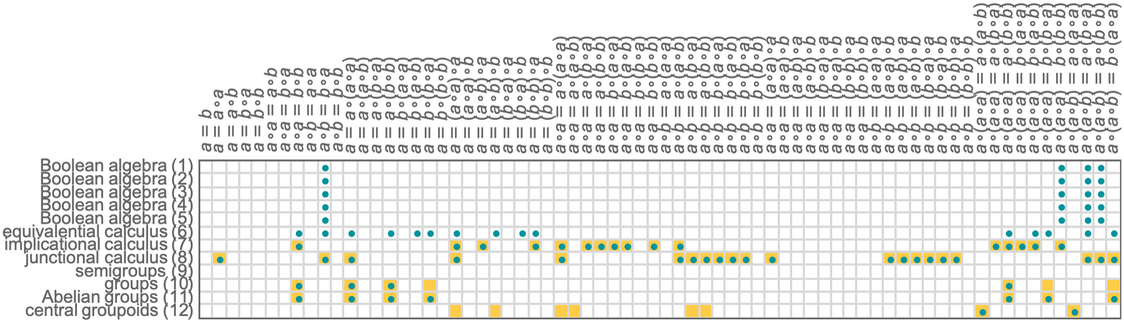

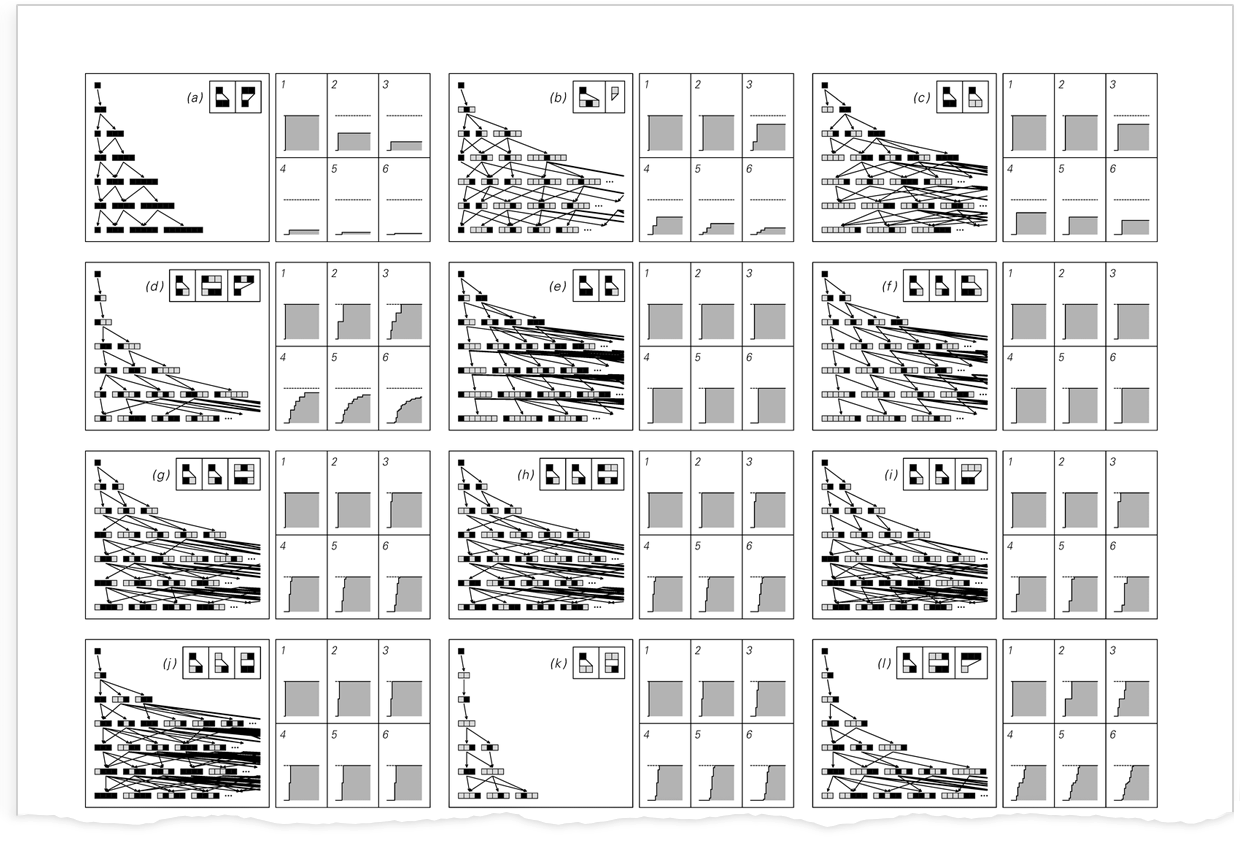

With characters A and B the distinct token-event graphs generated starting from rules with a total of at most 5 characters are:

|

Note that when the strings in the initial rule are the same length, only a rather trivial finite token-event graph is ever generated, as in the case of ![]() :

:

|

But when the strings are of different lengths, there is always unbounded growth.

10 | The Case of Hypergraphs

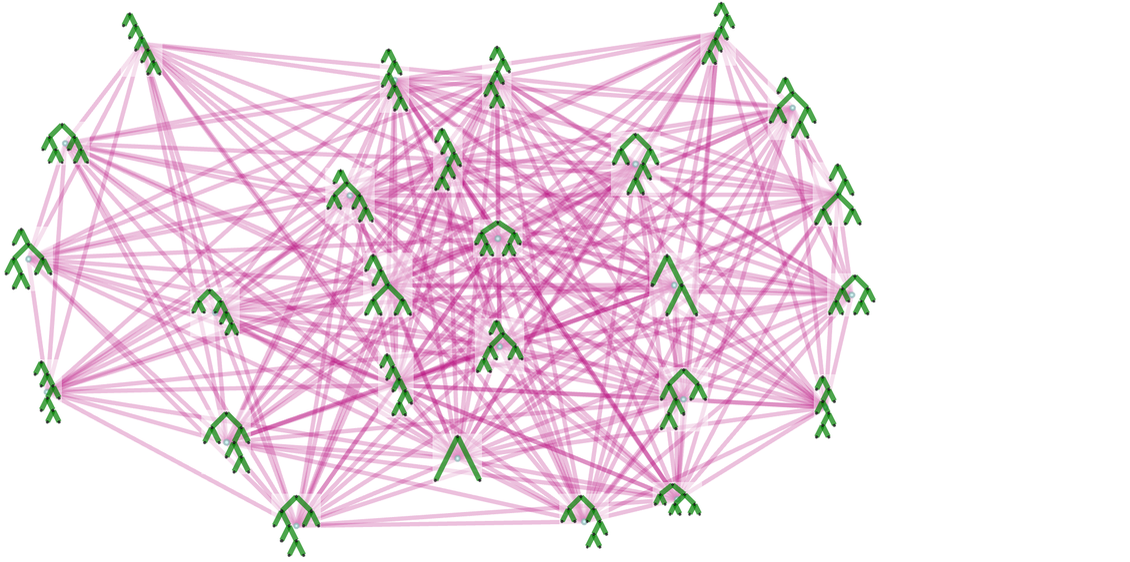

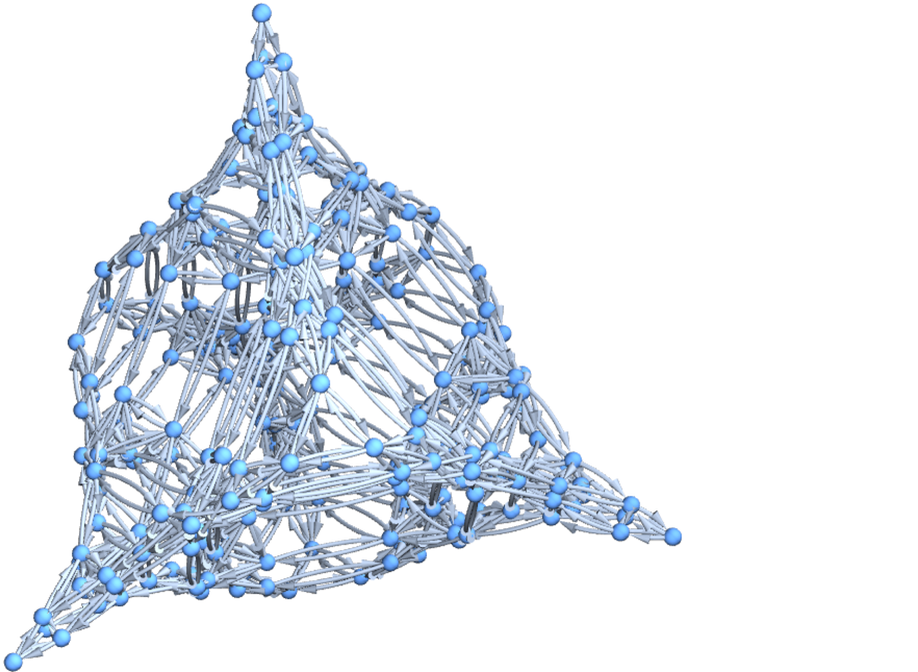

We’ve looked at accumulative versions of expression and string rewriting systems. So what about accumulative versions of hypergraph rewriting systems of the kind that appear in our Physics Project?

Consider the very simple hypergraph rule

|

|

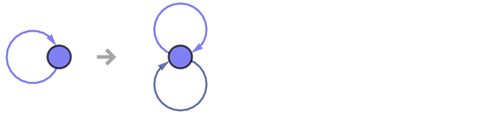

or pictorially:

|

(Note that the nodes that are named 1 here are really like pattern variables, that could be named for example x_.)

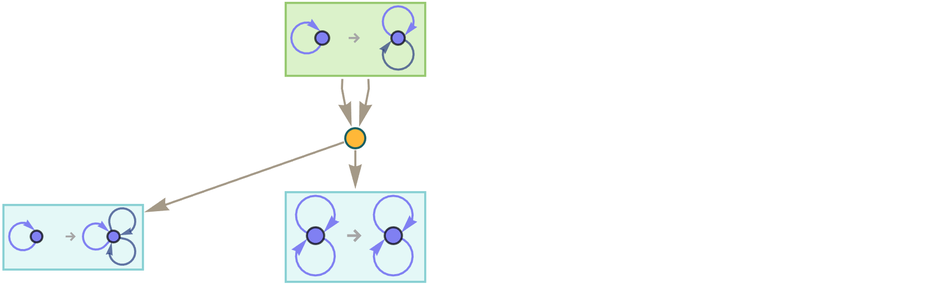

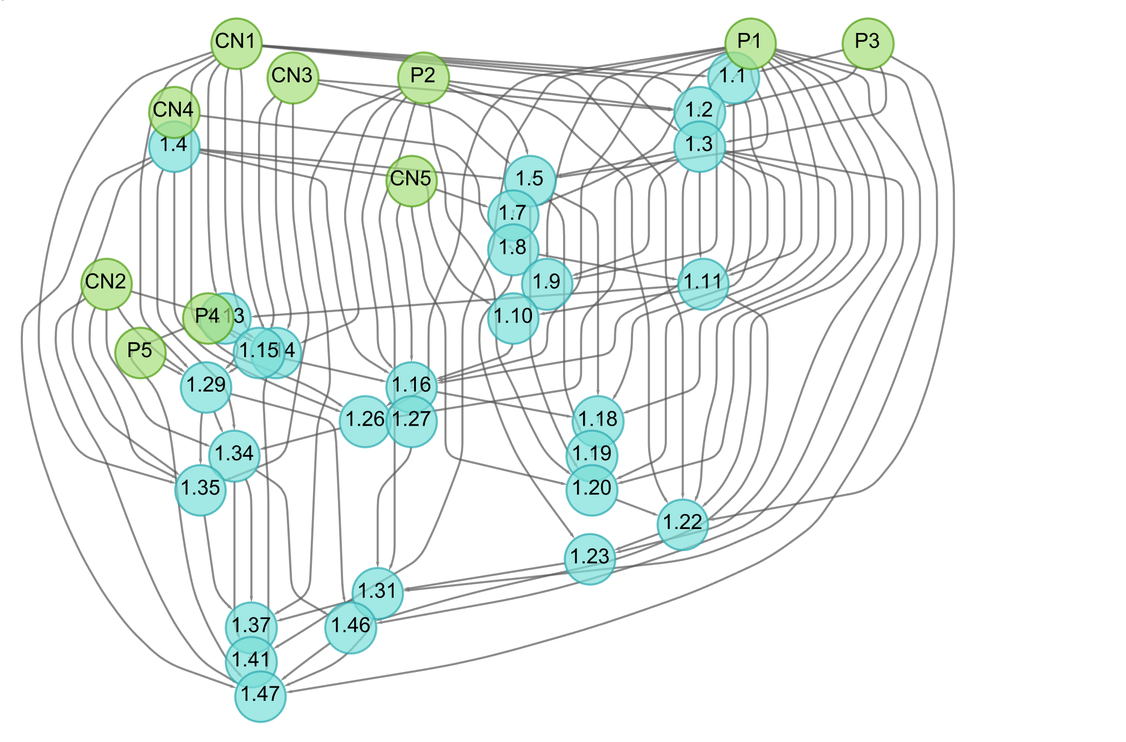

We can now do accumulative evolution with this rule, at each step combining results that involve equivalent (i.e. isomorphic) hypergraphs:

|

After two steps this gives:

|

And after 3 steps:

|

How does all this compare to “ordinary” evolution by hypergraph rewriting? Here’s a multiway graph based on applying the same underlying rule repeatedly, starting from an initial condition formed from the rule:

|

What we see is that the accumulative evolution in effect “shortcuts” the ordinary multiway evolution, essentially by “caching” the result of every piece of every transformation between states (which in this case are rules), and delivering a given state in fewer steps.

In our typical investigation of hypergraph rewriting for our Physics Project we consider one-way transformation rules. Inevitably, though, the ruliad contains rules that go both ways. And here, in an effort to understand the correspondence with our metamodel of mathematics, we can consider two-way hypergraph rewriting rules. An example is the tw0-way version of the rule above:

|

|

|

Now the token-event graph becomes

|

or after 2 steps (where now the transformations from “later states” to “earlier states” have started to fill in):

|

Just like in ordinary hypergraph evolution, the only way to get hypergraphs with additional hyperedges is to start with a rule that involves the addition of new hyperedges—and the same is true for the addition of new elements. Consider the rule:

|

|

|

|

After 1 step this gives

|

while after 2 steps it gives:

|

The general appearance of this token-event graph is not much different from what we saw with string rewrite or expression rewrite systems. So what this suggests is that it doesn’t matter much whether we’re starting from our metamodel of axiomatic mathematics or from any other reasonably rich rewriting system: we’ll always get the same kind of “large-scale” token-event graph structure. And this is an example of what we’ll use to argue for general laws of metamathematics.

11 | Proofs in Accumulative Systems

In an earlier section, we discussed how paths in a multiway graph can represent proofs of “equivalence” between expressions (or the “entailment” of one expression by another). For example, with the rule (or “axiom”)

|

|

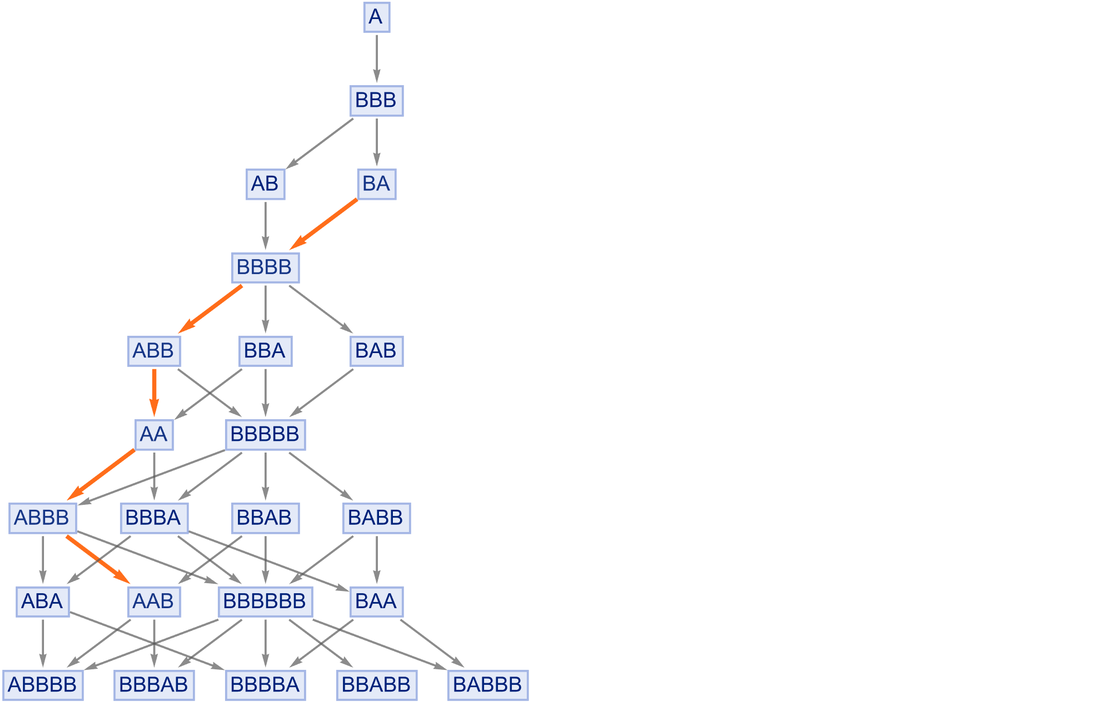

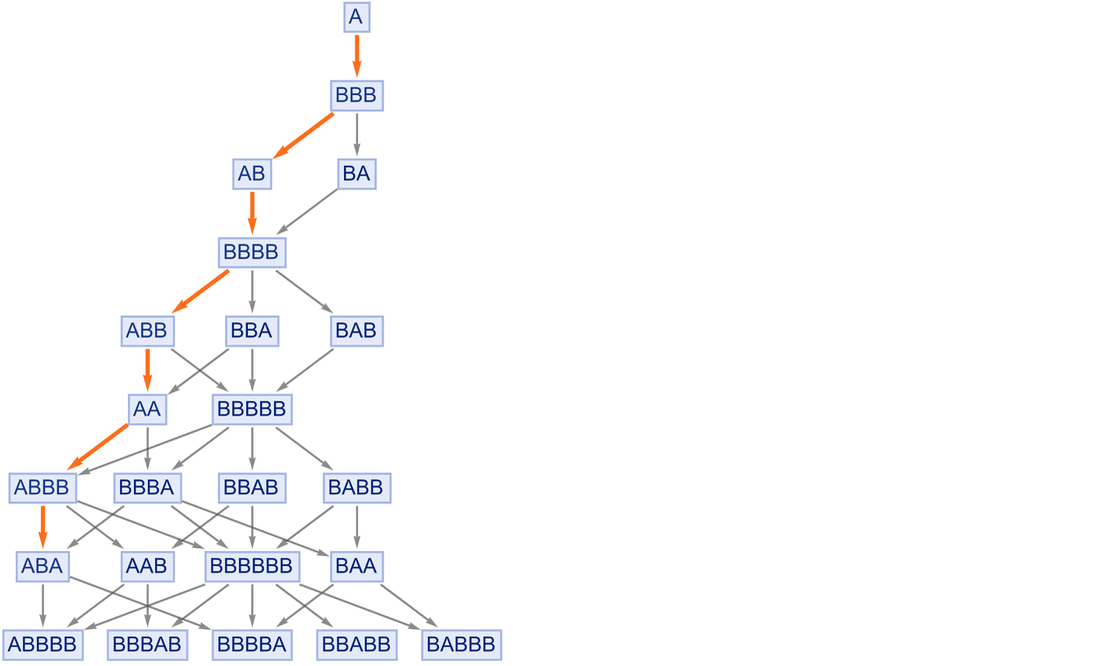

this shows a path that “proves” that “BA entails AAB”:

|

But once we know this, we can imagine adding this result (as what we can think of as a “lemma”) to our original rule:

|

|

And now (the “theorem”) “BA entails AAB” takes just one step to prove—and all sorts of other proofs are also shortened:

|

It’s perfectly possible to imagine evolving a multiway system with a kind of “caching-based” speed-up mechanism where every new entailment discovered is added to the list of underlying rules. And, by the way, it’s also possible to use two-way rules throughout the multiway system:

|

But accumulative systems provide a much more principled way to progressively “add what’s discovered”. So what do proofs look like in such systems?

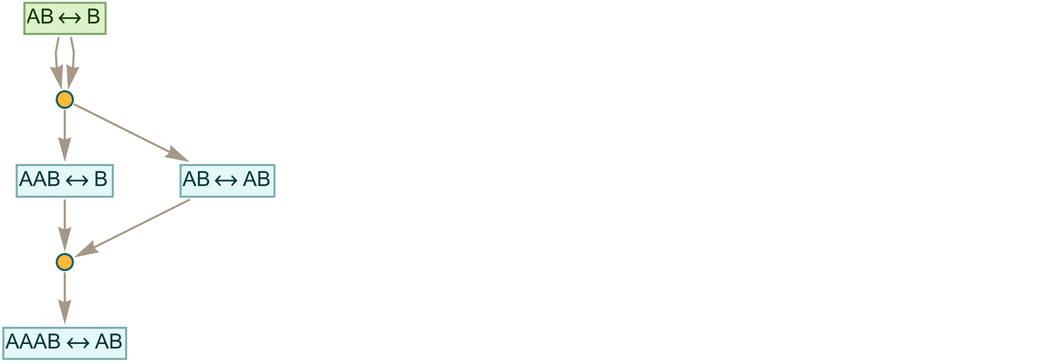

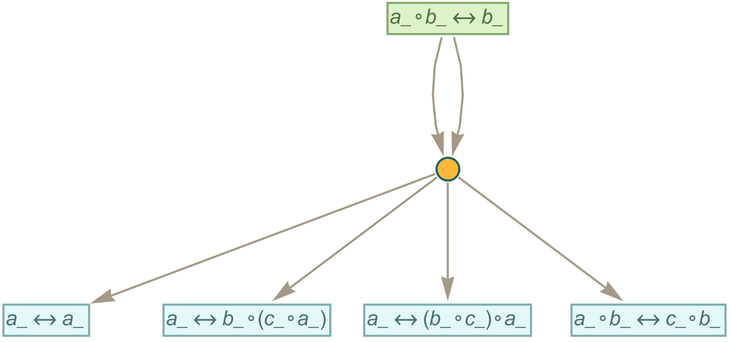

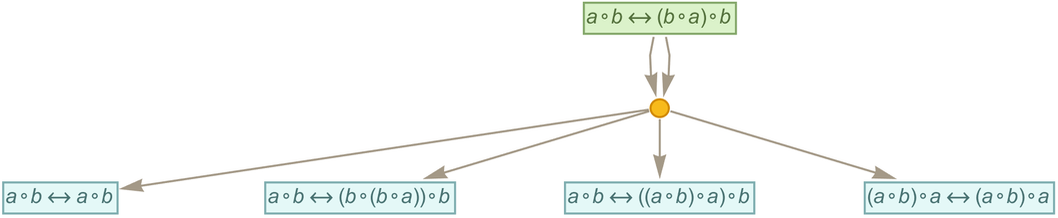

Consider the rule:

|

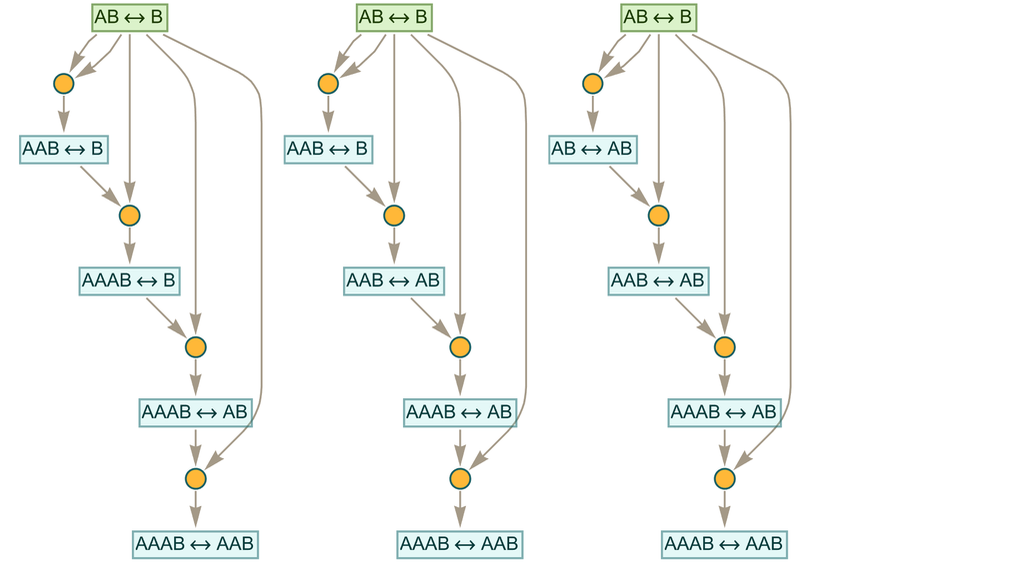

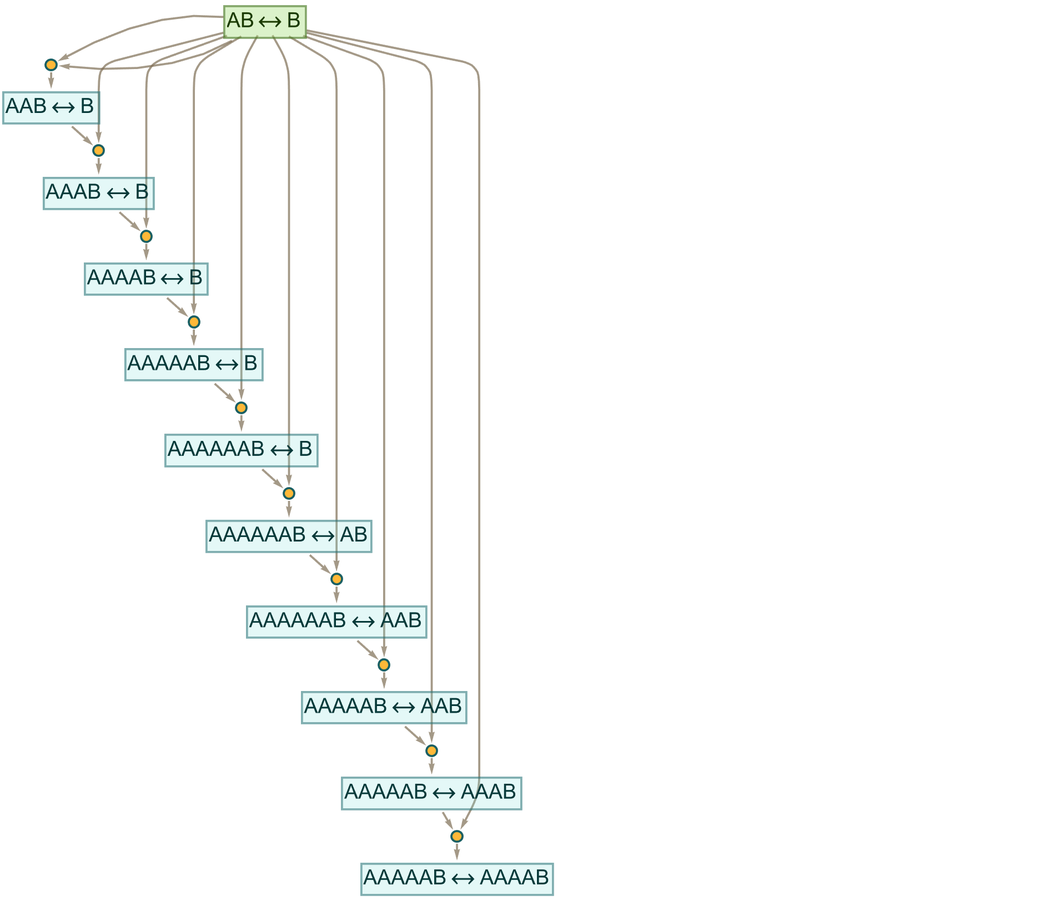

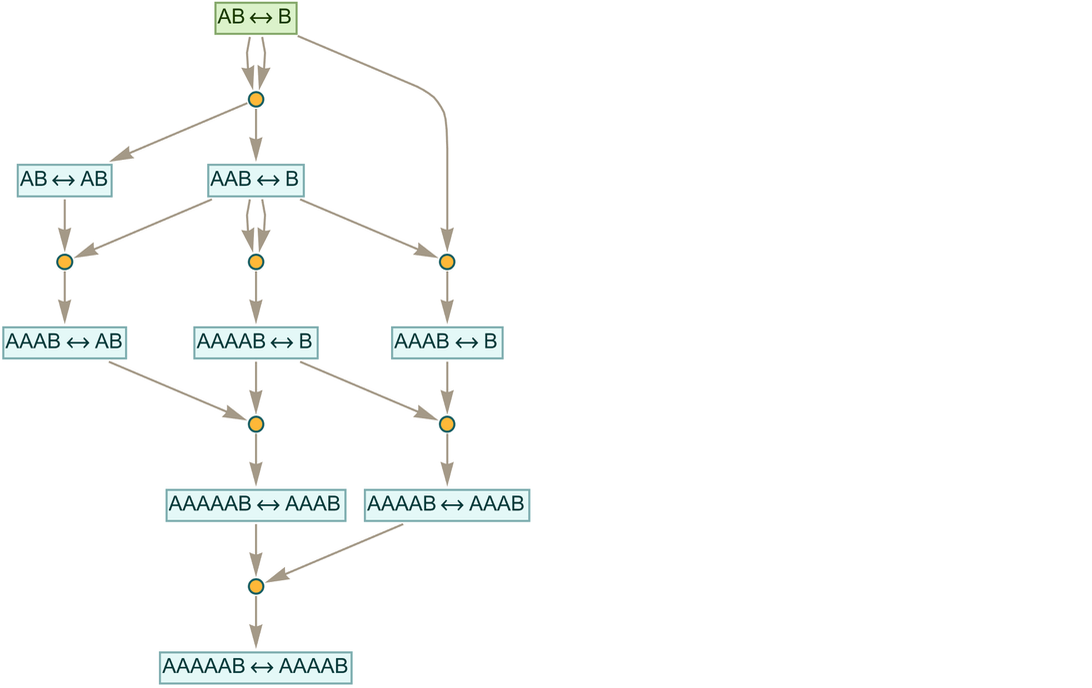

|

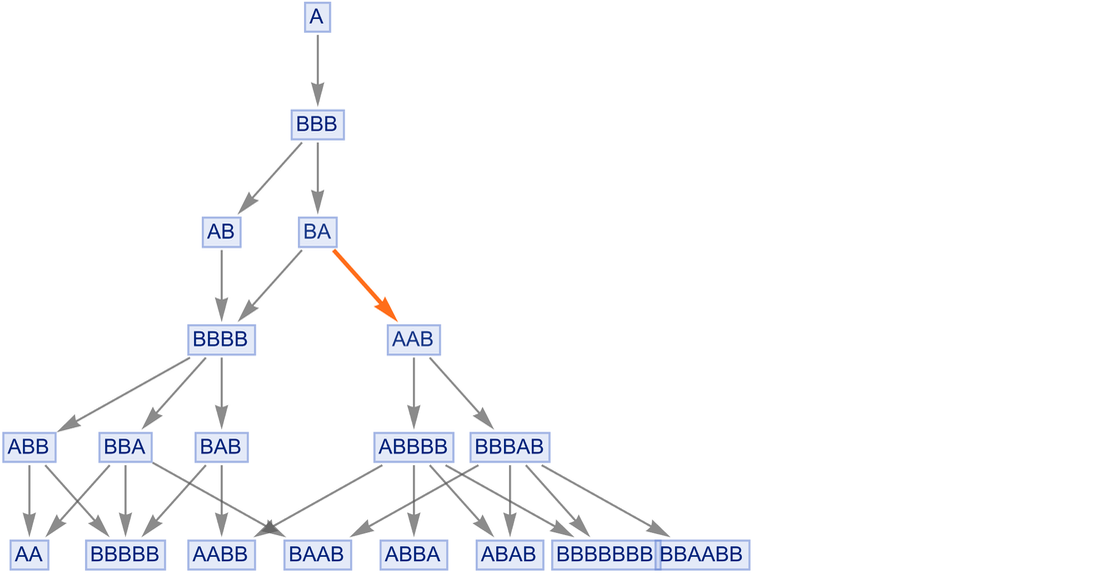

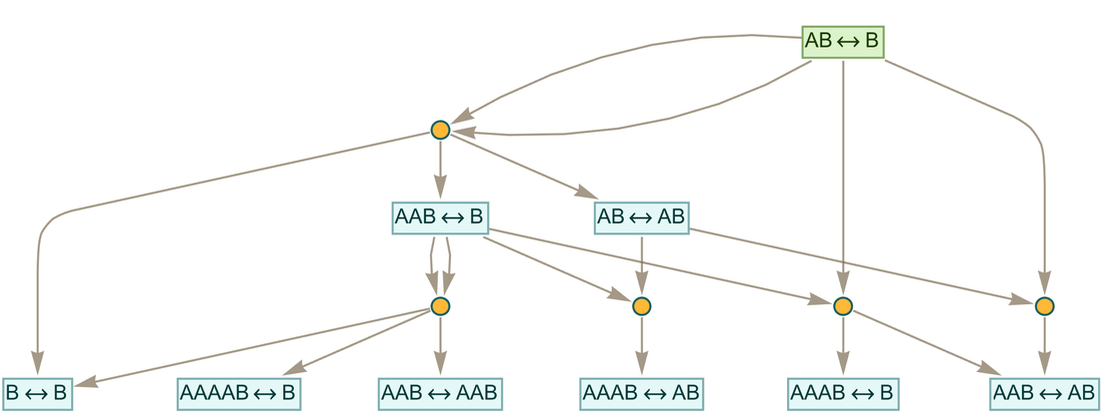

Running it for 2 steps we get the token-event graph:

|

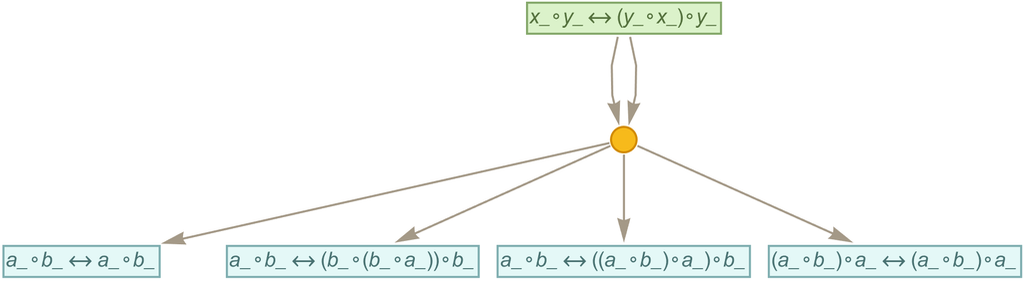

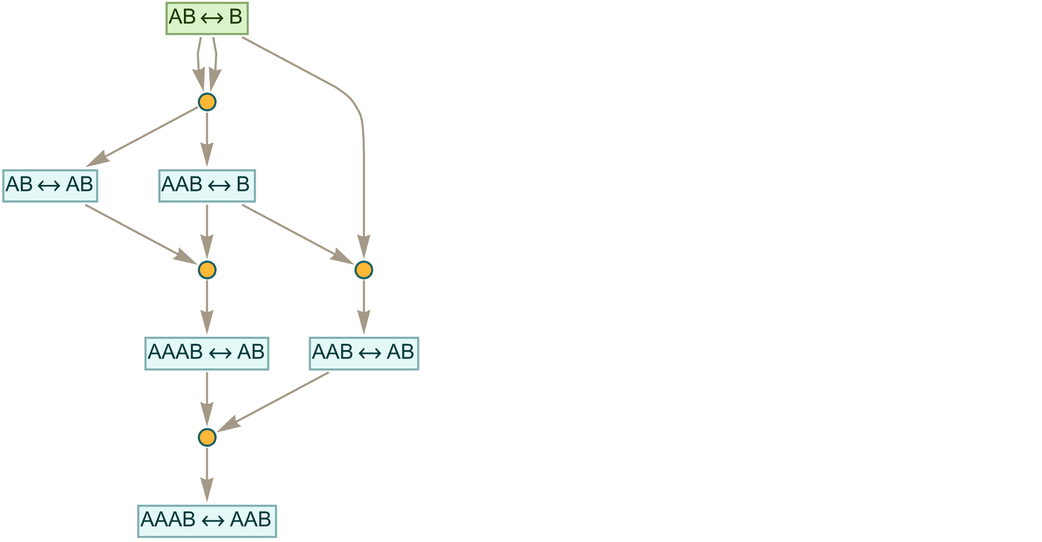

Now let’s say we want to prove that the original “axiom” ![]() implies (or “entails”) the “theorem”

implies (or “entails”) the “theorem” ![]() . Here’s the subgraph that demonstrates the result:

. Here’s the subgraph that demonstrates the result:

|

And here it is as a separate “proof graph”

|

where each event takes two inputs—the “rule to be applied” and the “rule to apply to”—and the output is the derived (i.e. entailed or implied) new rule or rules.

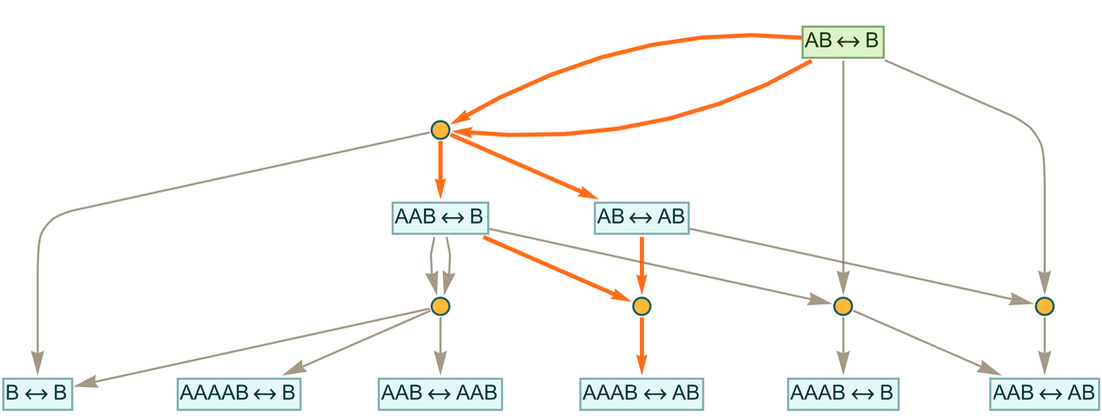

If we run the accumulative system for another step, we get:

|

Now there are additional “theorems” that have been generated. An example is:

|

|

And now we can find a proof of this theorem:

|

This proof exists as a subgraph of the token-event graph:

|

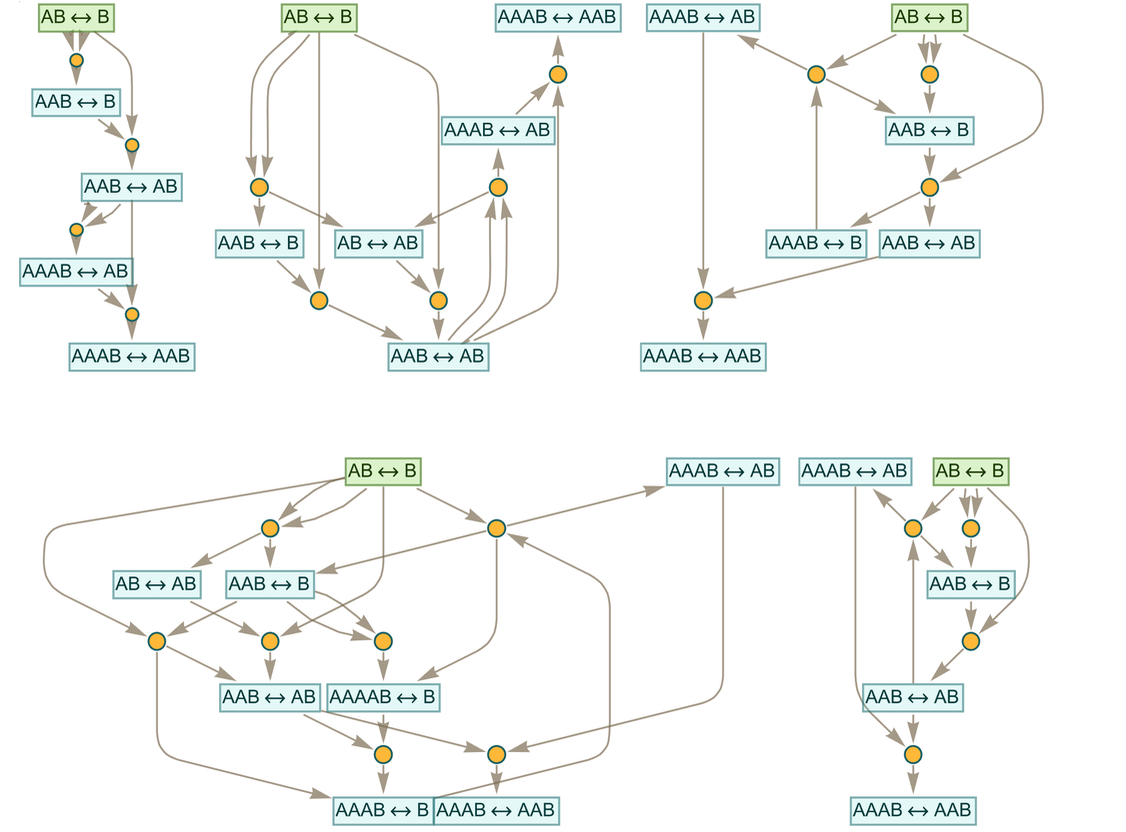

The proof just given has the fewest events—or “proof steps”—that can be used. But altogether there are 50 possible proofs, other examples being:

|

These correspond to the subgraphs:

|

How much has the accumulative character of these token-event graphs contributed to the structure of these proofs? It’s perfectly possible to find proofs that never use “intermediate lemmas” but always “go back to the original axiom” at every step. In this case examples are

|

which all in effect require at least one more “sequential event” than our shortest proof using intermediate lemmas.

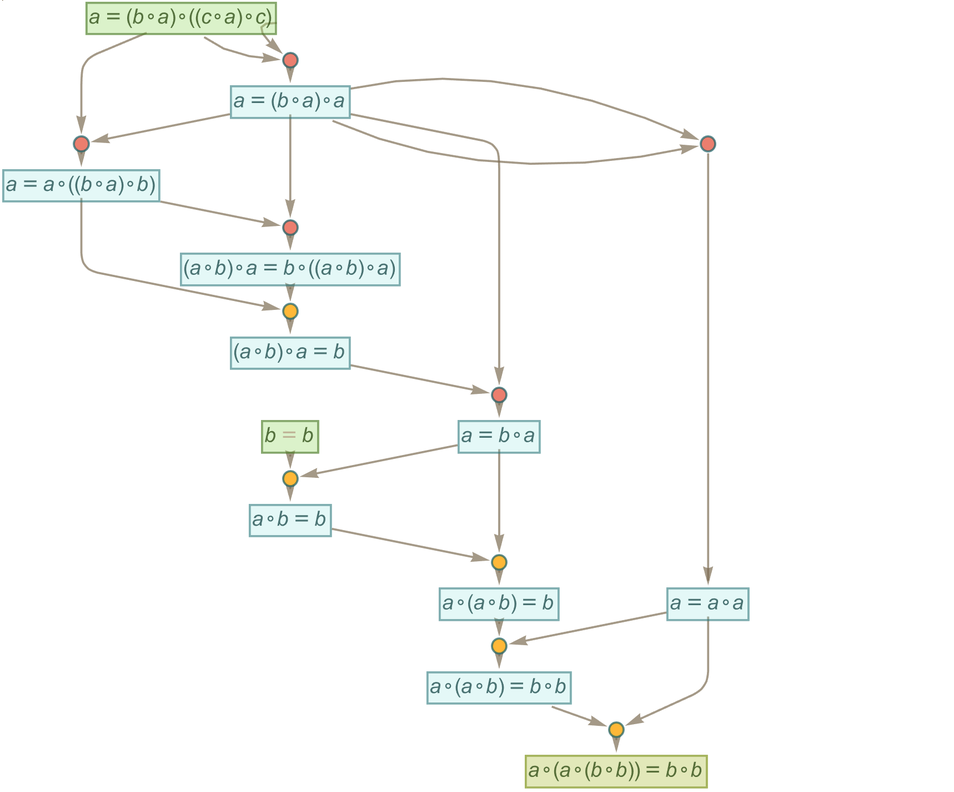

A slightly more dramatic example occurs for the theorem

|

|

where now without intermediate lemmas the shortest proof is

|

but with intermediate lemmas it becomes:

|

What we’ve done so far here is to generate a complete token-event graph for a certain number of steps, and then to see if we can find a proof in it for some particular statement. The proof is a subgraph of the “relevant part” of the full token-event graph. Often—in analogy to the simpler case of finding proofs of equivalences between expressions in a multiway graph—we’ll call this subgraph a “proof path”.

But in addition to just “finding a proof” in a fully constructed token-event graph, we can ask whether, given a statement, we can directly construct a proof for it. As discussed in the context of proofs in ordinary multiway graphs, computational irreducibility implies that in general there’s no “shortcut” way to find a proof. In addition, for any statement, there may be no upper bound on the length of proof that will be required (or on the size or number of intermediate “lemmas” that will have to be used). And this, again, is the shadow of undecidability in our systems: that there can be statements whose provability may be arbitrarily difficult to determine.

12 | Beyond Substitution: Cosubstitution and Bisubstitution

In making our “metamodel” of mathematics we’ve been discussing the rewriting of expressions according to rules. But there’s a subtle issue that we’ve so far avoided, that has to do with the fact that the expressions we’re rewriting are often themselves patterns that stand for whole classes of expressions. And this turns out to allow for additional kinds of transformations that we’ll call cosubstitution and bisubstitution.

Let’s talk first about cosubstitution. Imagine we have the expression f[a]. The rule ![]() would do a substitution for a to give f[b]. But if we have the expression f[c] the rule

would do a substitution for a to give f[b]. But if we have the expression f[c] the rule ![]() will do nothing.

will do nothing.

Now imagine that we have the expression f[x_]. This stands for a whole class of expressions, including f[a], f[c], etc. For most of this class of expressions, the rule ![]() will do nothing. But in the specific case of f[a], it applies, and gives the result f[b].

will do nothing. But in the specific case of f[a], it applies, and gives the result f[b].

If our rule is f[x_] → s then this will apply as an ordinary substitution to f[a], giving the result s. But if the rule is f[b] → s this will not apply as an ordinary substitution to f[a]. However, it can apply as a cosubstitution to f[x_] by picking out the specific case where x_ stands for b, then using the rule to give s.

In general, the point is that ordinary substitution specializes patterns that appear in rules—while what one can think of as the “dual operation” of cosubstitution specializes patterns that appear in the expressions to which the rules are being applied. If one thinks of the rule that’s being applied as like an operator, and the expression to which the rule is being applied as an operand, then in effect substitution is about making the operator fit the operand, and cosubstitution is about making the operand fit the operator.

It’s important to realize that as soon as one’s operating on expressions involving patterns, cosubstitution is not something “optional”: it’s something that one has to include if one is really going to interpret patterns—wherever they occur—as standing for classes of expressions.

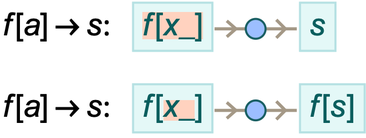

When one’s operating on a literal expression (without patterns) only substitution is ever possible, as in

|

|

corresponding to this fragment of a token-event graph:

|

Let’s say we have the rule f[a] → s (where f[a] is a literal expression). Operating on f[b] this rule will do nothing. But what if we apply the rule to f[x_]? Ordinary substitution still does nothing. But cosubstitution can do something. In fact, there are two different cosubstitutions that can be done in this case:

|

What’s going on here? In the first case, f[x_] has the “special case” f[a], to which the rule applies (“by cosubstitution”)—giving the result s. In the second case, however, it’s ![]() on its own which has the special case f[a], that gets transformed by the rule to s, giving the final cosubstitution result f[s].

on its own which has the special case f[a], that gets transformed by the rule to s, giving the final cosubstitution result f[s].

There’s an additional wrinkle when the same pattern (such as ![]() ) appears multiple times:

) appears multiple times:

|

In all cases, x_ is matched to a. But which of the x_’s is actually replaced is different in each case.

Here’s a slightly more complicated example:

|

In ordinary substitution, replacements for patterns are in effect always made “locally”, with each specific pattern separately being replaced by some expression. But in cosubstitution, a “special case” found for a pattern will get used throughout when the replacement is done.

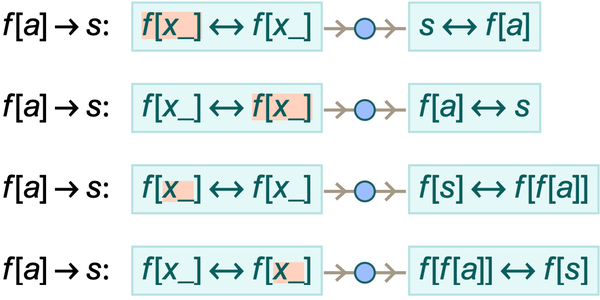

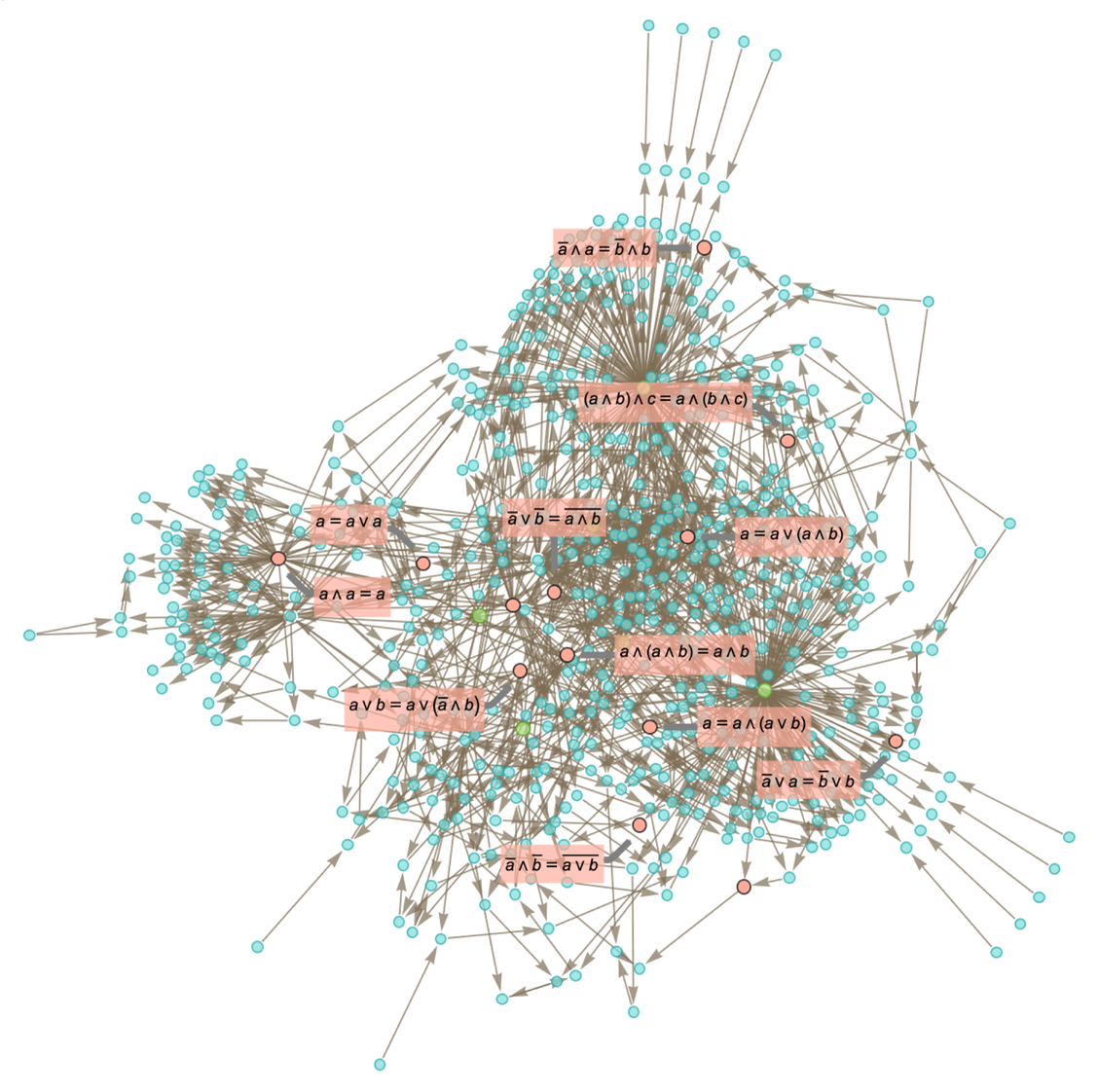

Let’s see how this all works in an accumulative axiomatic system. Consider the very simple rule:

|

|

One step of substitution gives the token-event graph (where we’ve canonicalized the names of pattern variables to a_ and b_):

|

But one step of cosubstitution gives instead:

|

Here are the individual transformations that were made (with the rule at least nominally being applied only in one direction):

|

The token-event graph above is then obtained by canonicalizing variables, and combining identical expressions (though for clarity we don’t merge rules of the form ![]() and

and ![]() ).

).

If we go another step with this particular rule using only substitution, there are additional events (i.e. transformations) but no new theorems produced:

|

Cosubstitution, however, produces another 27 theorems

|

or altogether

|

or as trees:

|

We’ve now seen examples of both substitution and cosubstitution in action. But in our metamodel for mathematics we’re ultimately dealing not with each of these individually, but rather with the “symmetric” concept of bisubstitution, in which both substitution and cosubstitution can be mixed together, and applied even to parts of the same expression.

In the particular case of ![]() , bisubstitution adds nothing beyond cosubstitution. But often it does. Consider the rule:

, bisubstitution adds nothing beyond cosubstitution. But often it does. Consider the rule:

|

|

Here’s the result of applying this to three different expressions using substitution, cosubstitution and bisubstitution (where we consider only matches for “whole ∘ expressions”, not subparts):

|

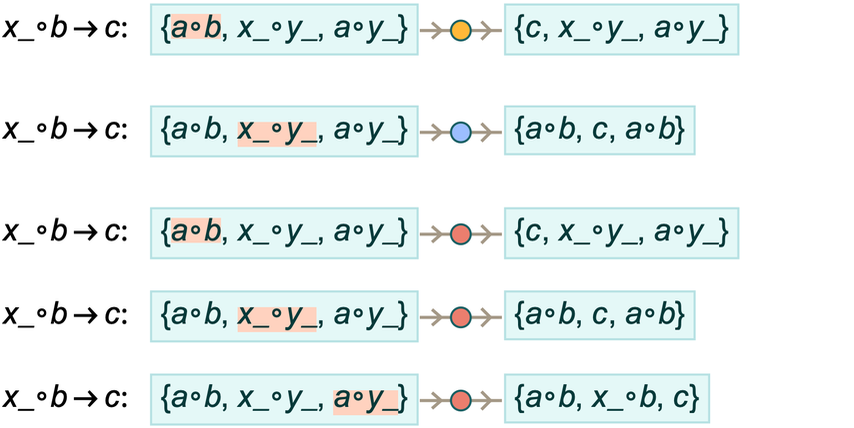

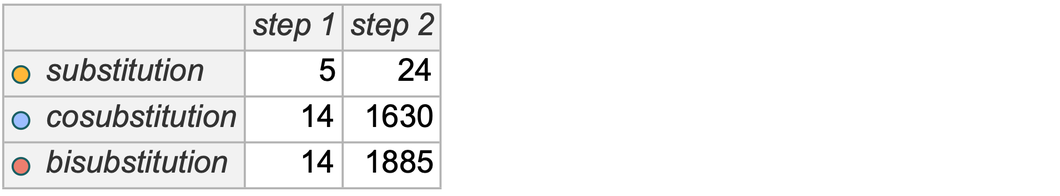

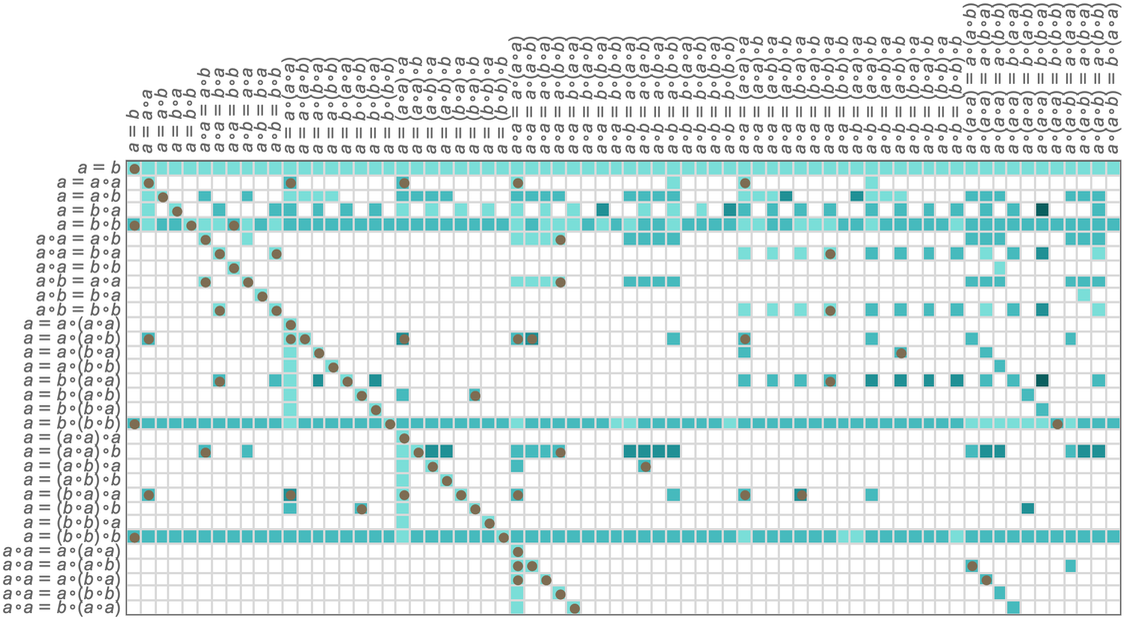

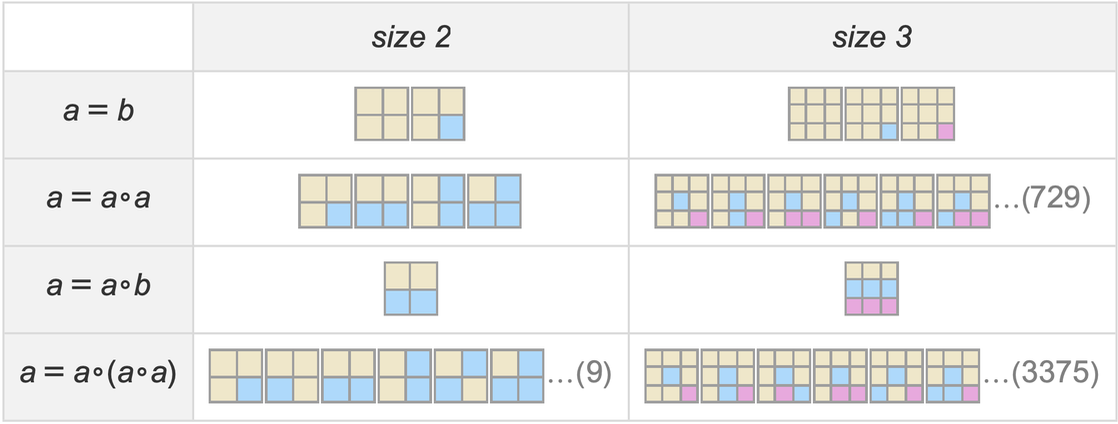

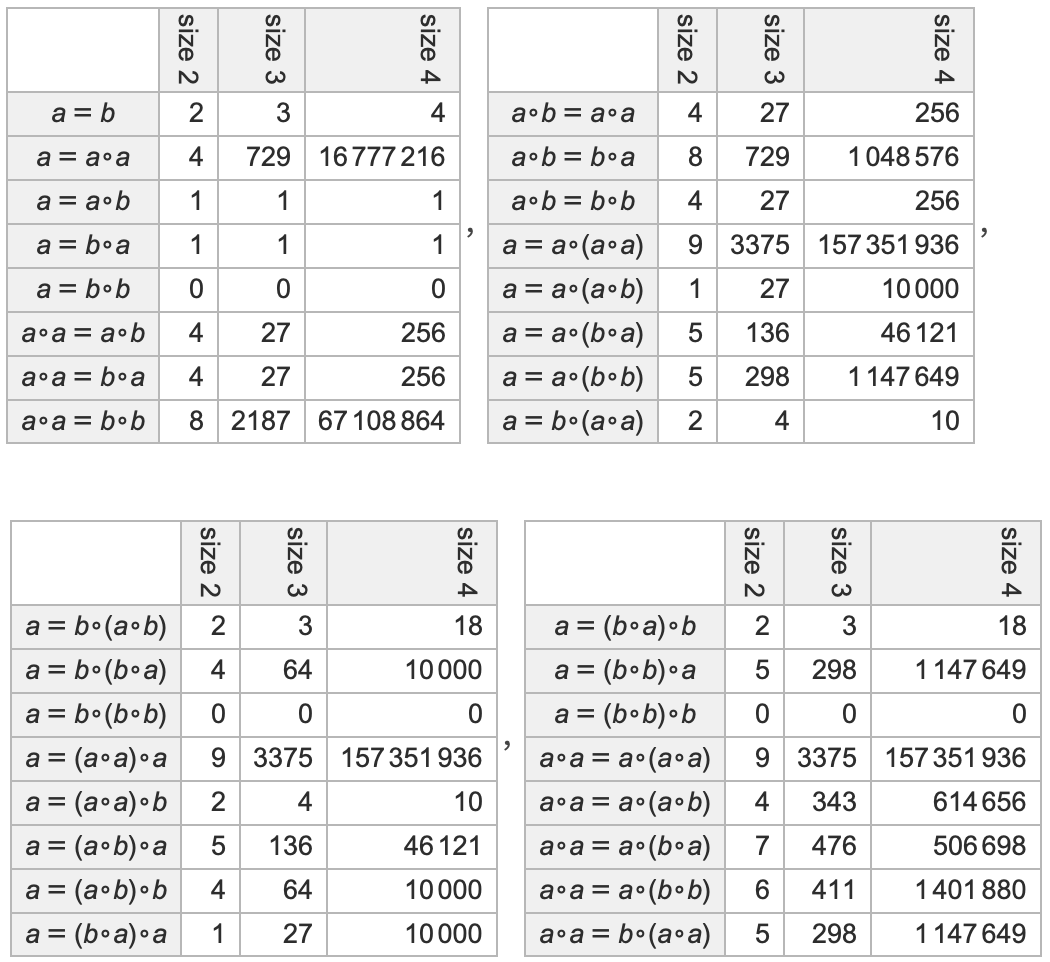

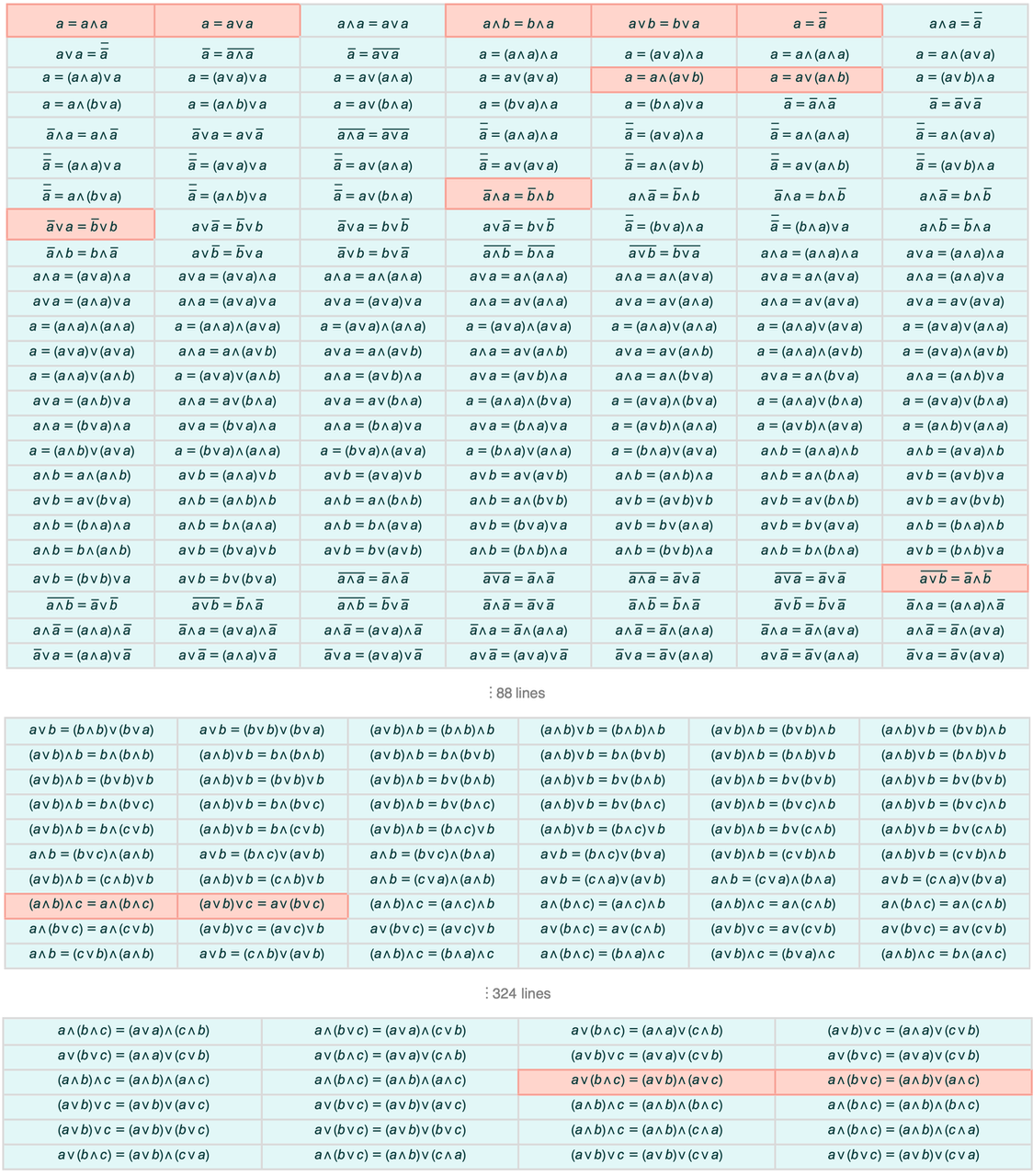

Cosubstitution very often yields substantially more transformations than substitution—bisubstitution then yielding modestly more than cosubstitution. For example, for the axiom system

|

|

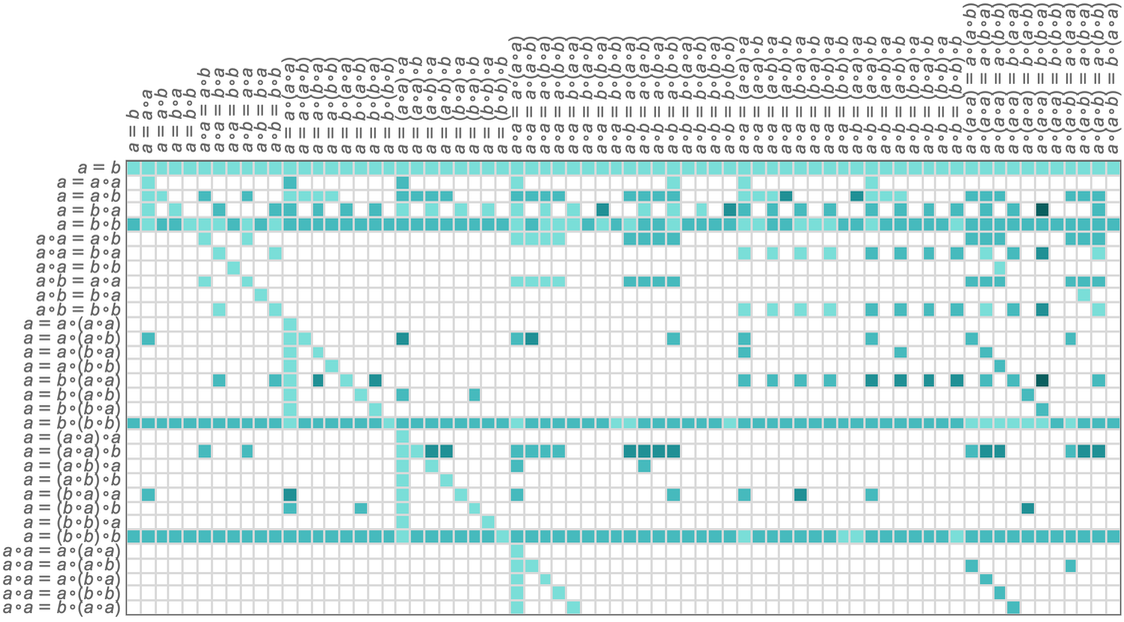

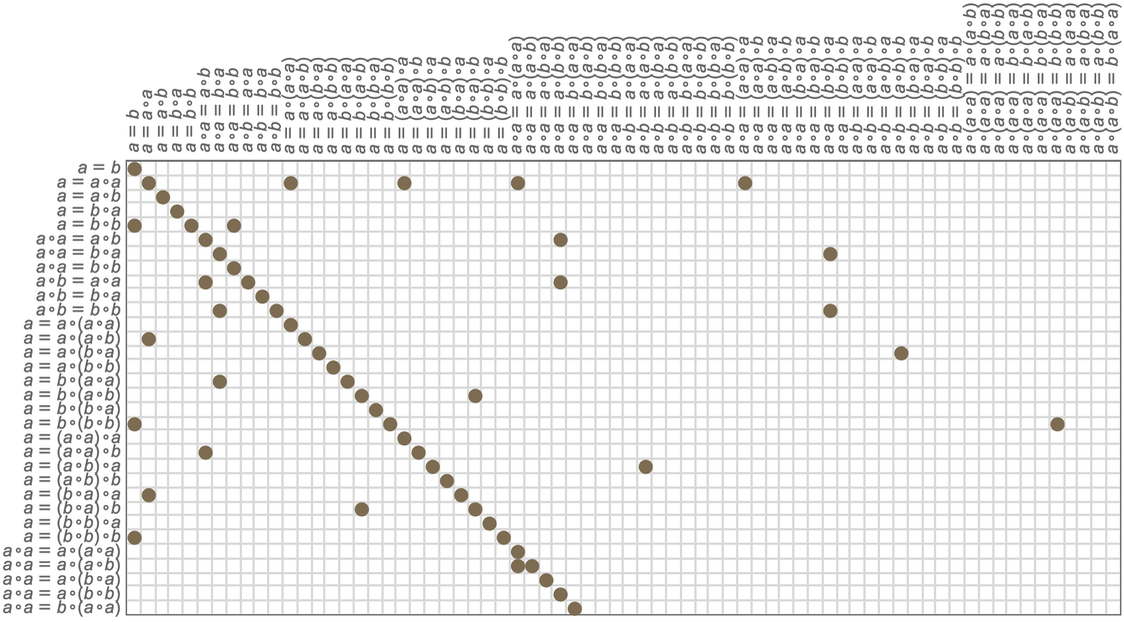

the number of theorems derived after 1 and 2 steps is given by:

|

In some cases there are theorems that can be produced by full bisubstitution, but not—even after any number of steps—by substitution or cosubstitution alone. However, it is also common to find that theorems can in principle be produced by substitution alone, but that this just takes more steps (and sometimes vastly more) than when full bisubstitution is used. (It’s worth noting, however, that the notion of “how many steps” it takes to “reach” a given theorem depends on the foliation one chooses to use in the token-event graph.)

The various forms of substitution that we’ve discussed here represent different ways in which one theorem can entail others. But our overall metamodel of mathematics—based as it is purely on the structure of symbolic expressions and patterns—implies that bisubstitution covers all entailments that are possible.

In the history of metamathematics and mathematical logic, a whole variety of “laws of inference” or “methods of entailment” have been considered. But with the modern view of symbolic expressions and patterns (as used, for example, in the Wolfram Language), bisubstitution emerges as the fundamental form of entailment, with other forms of entailment corresponding to the use of particular types of expressions or the addition of further elements to the pure substitutions we’ve used here.

It should be noted, however, that when it comes to the ruliad different kinds of entailments correspond merely to different foliations—with the form of entailment that we’re using representing just a particularly straightforward case.

The concept of bisubstitution has arisen in the theory of term rewriting, as well as in automated theorem proving (where it is often viewed as a particular “strategy”, and called “paramodulation”). In term rewriting, bisubstitution is closely related to the concept of unification—which essentially asks what assignment of values to pattern variables is needed in order to make different subterms of an expression be identical.