Henry Frankenstein: Look! It’s transferring. It’s alive. It’s alive… It’s alive, it’s transferring, it’s alive, it’s alive, it’s alive, it’s alive, IT’S ALIVE!

Victor Moritz: Henry – Within the identify of God!

Henry Frankenstein: Oh, within the identify of God! Now I do know what it feels wish to be God!Frankenstein (1931)

Introduction

In 1915, after a number of failed makes an attempt, Albert Einstein promulgated the Basic Concept of Relativity, a mathematical principle of gravitation that reconciled gravity along with his Particular Concept of Relativity and defined the gravitational drive because the warping of area and time by matter and power. Amongst different issues, the speculation predicted a barely completely different deflection of sunshine by our bodies such because the Solar than the prevailing Newtonian principle of gravitation. In 1919 Einstein grew to become a world celeb when the English astronomer Arthur Eddington introduced outcomes from the measurement of the deflection of starlight by the Solar throughout a photo voltaic eclipse confirming Einstein’s principle. However, the story of Einstein’s triumph is extra sophisticated than that. Einstein patched his principle to agree with observations and the prejudices of his time in 1917. Then, he later discarded the patch when new observations appeared to substantiate his authentic principle of 1915. Not too long ago, in an astonishing about face, physicists and astronomers have resurrected Einstein’s till then embarrassing patch to drive settlement between new observations and the reigning Large Bang principle/Basic Concept of Relativity. It is a latest instance of the development of Frankenstein Capabilities through which scientists and mathematicians assemble arbitrary features out of many mathematical items to match observational information or just preconceived notions.

Einstein Subject Equations (Basic Relativity)

The Basic Concept of Relativity is a set of equations relating the so-called metric of space-time [tex] G_{munu} [/tex], loosely a measure of the curvature of space-time, to the density of mass and power variously expressed in trendy mathematical notation as:

Brief model (utilizing Einstein tensor [tex] G_{munu} [/tex])

[tex] G_{munu} = frac{8pi G}{c^4},T_{munu} [/tex]

[tex] G_{munu} [/tex] is the so-called metric of area and time. [tex] G [/tex] is Newton’s Gravitational Fixed. [tex] c [/tex] is the velocity of sunshine. [tex] T_{munu} [/tex] is the so-called stress-energy tensor which loosely represents the density of mass and power in area and time. The indices [tex] mu [/tex] and [tex] nu [/tex] normally run from 0 to three (0,1,2,3) or 1 to 4 (1,2,3,4) or over the symbols [tex]x[/tex], [tex] y [/tex], [tex] z [/tex], and [tex] t [/tex] referring to the three spatial dimensions and the only time “dimension.” The creator makes use of scare quotes for the time “dimension” as a result of it differs radically from the three spatial dimensions in frequent expertise, though it may be represented in a really comparable symbolic mathematical method because the numerical calendar time indicated by a clock.

Utilizing normal cosmological models [tex] G = c = 1 [/tex], the equations are written:

[tex] G_{munu} = 8pi,T_{munu} [/tex]

The lengthy model (utilizing Ricci curvature tensor [tex] R_{munu} [/tex] and scalar curvature [tex] R = Tr(R_{munu}) [/tex]) is:

[tex] R_{munu} – frac{1}{2},R,g_{munu} = 8pi,T_{munu} [/tex]

or

[tex] T_{munu}- frac{1}{2},T,g_{munu} = frac{1}{8pi},R_{munu} [/tex]

There may be the symmetric” decomposition, into the scalar half:

[tex] R = -,8pi,T [/tex]

and the traceless symmetric tensor half:

[tex] R_{munu} – frac{1}{4},R,g_{munu} = 8pileft(T_{munu} – frac{1}{4},T,g_{munu}proper) [/tex]

The Best Blunder

There was nevertheless an issue. When astronomers, physicists, and mathematicians labored out the implications of the equations of Basic Relativity, they discovered that the universe as an entire have to be both increasing or contracting. The universe was not steady within the equations of Basic Relativity. On the time, each the proof of observational astronomy and the philosophical bias of most scientists was that the universe was neither increasing nor contracting. The universe was static, maybe of infinite age. Confronted with proof apparently clearly falsifying his principle, Einstein did what scientists, philosophers, students, attorneys, and political activists have achieved since time immemorial. He patched his principle to suit the observations and prejudices of his time. Einstein added a mysterious further time period referred to as the “cosmological fixed” that counterbalanced the anticipated growth or contraction of the universe:

[tex] R_{munu} – frac{1}{2},R,g_{munu} – lambda g_{munu} = 8pi,T_{munu} [/tex]

the place the additional time period is [tex] – lambda g_{munu} [/tex] the place the fixed [tex] lambda [/tex] is called the cosmological fixed. Bodily the cosmological fixed could correspond to a mysterious power area filling your entire universe. With a correct alternative of the cosmological fixed [tex] lambda [/tex], the universe was static, neither increasing nor contracting.

Subsequently, the redshifts of extragalactic nebulae had been reinterpreted as as a result of movement of the nebulae. That’s, the nebulae, acknowledged as galaxies exterior our galaxy the Milky Means, had been flying away from us, inflicting a redshift of sunshine. Over time, the astronomer Edwin Hubble was in a position to present that the dimmer the galaxy and subsequently presumably the farther away (a minimum of on common) the galaxy, the bigger the redshift and thus the sooner the galaxy seemed to be working away from the Earth. This slightly peculiar commentary might be simply defined if the universe was increasing as predicted by the unique Basic Concept of Relativity with out the cosmological time period (the cosmological fixed was both zero or almost zero).

As is likely to be imagined, Einstein did what scientists, philosophers, students, attorneys, and political activists have achieved since time immemorial when confronted by proof apparently clearly falsifying their present principle however confirming the unique un-patched principle. He dropped the cosmological time period like a sizzling potato. In his autobiography, the physicist George Gamow recounted an alleged dialog with Einstein through which Einstein described the cosmological fixed because the “biggest blunder” of his life. Whether or not true or not, this citation was extensively repeated in widespread physics articles, textbooks, casual conversations by physicists, and so forth till the late 1990’s when observations by the Hubble House Telescope that had been apparently inconsistent with the prevailing Large Bang principle of cosmology had been made. Nevertheless, one may save the Large Bang principle by reintroducing the cosmological time period with a non-zero cosmological fixed, not robust sufficient to stop the growth of the universe however enough to trigger an acceleration that will resolve the in any other case falsifying observations. The non-zero cosmological fixed was attributed to a mysterious “darkish power” filling the universe, maybe as a result of an as but undiscovered subatomic particle/area predicted by a unified area principle or principle of every thing (TOE).

The expected growth of the universe within the Basic Concept of Relativity grew to become a principle of the origin and evolution of the universe referred to as the Large Bang principle. On the whole, the completely different types of the Large Bang principle envision the universe starting as a degree, a “singularity” within the Basic Concept of Relativity, and increasing, exploding into the universe that we see right now. The Large Bang is assumed to have occurred 14-25 billion years in the past. The precise time has various over the past century with completely different observations and theoretical calculations.

Up till the Hubble House Telescope observations, the story of Einstein’s “biggest blunder” was extensively recounted as a morality story of the prevalence of rational scientific thought over fluffy philosophy or blind prejudice. If solely Einstein had caught to his authentic “rational” principle slightly than being influenced by fluffy (to not point out wimpy) unscientific philosophical or sociological considerations (agreeing with all people else), all would have been properly. Thus, the “biggest blunder” grew to become a story in regards to the primacy of science over different “methods of figuring out,” to make use of an appropriately fluffy New Age cliche.

The Large Bang principle and trendy cosmology has a curious historical past. There have been repeated observations that seem to falsify the speculation or main parts of the speculation similar to the speculation of gravitation, whether or not Einstein’s or Newton’s principle of gravity. This has led to the introduction of a number of new ideas and parts expressed in symbolic arithmetic just like the cosmological time period. These embody “inflation” to account for the puzzling uniformity of the universe in sure measurements (for instance, the cosmological microwave background radiation is extraordinarily clean which is troublesome to elucidate in lots of variations of the Large Bang principle) and a number of other kinds of as but undetected “darkish matter” to account for gross anomalies within the measured angular momentum distribution of galaxies, clusters of galaxies, and so forth. There may be a complete trade looking for to detect the mysterious particles that comprise the as but hypothetical “darkish matter” and “darkish power.”

The saga of the cosmological time period and the opposite patches to the Basic Concept of Relativity and the Large Bang principle illustrates a deep mathematical downside that has bedeviled science and human society a minimum of for the reason that historical Greeks (and doubtless Babylonians or Sumerians as properly) constructed detailed mathematical fashions of the movement of the planets. It’s a downside that seems each in symbolic arithmetic, within the effort to assemble predictive symbolic mathematical theories of the world, in addition to in conceptual, verbal reasoning and discourse. It appears doubtless that the human thoughts, not like present-day symbolic arithmetic or pc applications, has a restricted, imperfect skill to resolve this downside.

What’s the downside? Given a set of observations — the positions and motions of the planets, the waveform of speech, the time and depth of earthquakes, the values of inventory costs, blood stress, any quantitative measurement — it’s attainable to decide on many completely different units of constructing block features that may be mixed (added, multiplied, and many others.) to match (or “match”) the observations as precisely as desired (merely add extra constructing block features). One can assemble not one, however many, the truth is an infinite quantity, of “Frankenstein Capabilities” that match the information. Whereas these units of constructing block features could be chosen to match the observational information, the “coaching” set in synthetic intelligence terminology, they typically will fail to foretell new observations. It’s typically mandatory so as to add new constructing block features to avoid wasting or patch the speculation as new observations are made. Nevertheless, if the constructing block features share some traits in frequent with the unknown “true” principle or arithmetic, then the speculation could give considerably appropriate predictions however nonetheless be mistaken or incomplete.

At a conceptual, verbal degree, it normally proves attainable to plot a believable, technically subtle idea to elucidate away the apparently falsifying observations and to justify the “patch” expressed in purely symbolic mathematical phrases. For instance, unified area theories or theories of every thing (TOE) normally predict new particles which can be in any other case unknown. These new, unknown particles would possibly in flip present the darkish matter or darkish power wanted to elucidate the opposite observations. Sure commonalities amongst identified particles and forces recommend an underlying unity.

The traditional Greeks constructed (or inherited from the even older Babylonian civilization) a mathematical principle of the universe, principally our trendy photo voltaic system, through which the planets orbited the Earth, a sphere about eight-thousand miles in diameter. Nevertheless, from the very starting, this principle had a significant issue. Sure planets, notably Mars, really backed up throughout their journey by the Zodiac. This was grossly inconsistent with a easy orbit. Therefore, the Greeks (and presumably the Babylonians earlier than them) launched the now notorious epicycles. The planets, envisioned because the Gods themselves, executed a fancy dance through which they carried out a round movement across the easy round orbit across the Earth. This might produce a interval when the outer planets — Mars, Jupiter, and Saturn — would seem to again up within the Zodiac, precisely as noticed. But, the speculation by no means fairly labored. Over the centuries and in the end virtually two millenia, astronomers and astrologers (principally the identical factor) added increasingly epicycles to create the “Ptolemaic” principle that existed on the time of Copernicus, Galileo, and Kepler.

The Ptolemaic principle had a whole bunch of epicycles, epicycles on high of epicycles on high of epicycles. It was very complicated and required in depth effort and time to make predictions utilizing the pen, paper, and printed mathematical tables of the time. There have been no computer systems. It may predict the movement of Mars to round one % accuracy. This was really a lot better than the unique heliocentric principle proposed by Copernicus. In truth, Copernicus additionally used epicycles. A tough headed comparability of the geocentric and heliocentric theories primarily based solely on quantitative goodness of match measures would have chosen the standard geocentric Ptolemaic principle. It was not till a minimum of 1609 when Kepler revealed his discovery of the elliptical orbits and presumably even later (Kepler made errors) that the heliocentric theories clearly outperformed the Ptolemaic principle.

The orbits of the planets across the Solar are virtually periodic. The movement of the planets as seen from Earth is quasi-periodic. Thus, if one makes use of periodic features such because the uniform round movement of the Ptolemaic epicycles, one can reproduce a lot of the noticed movement of the planets. The Ptolemaic fashions had some predictive energy.

The lesson of Copernicus, Galileo, and Kepler in addition to subsequent successes in science gave the impression to be to desire “easy” theories with few constructing block features, few phrases within the mathematical expressions, and so forth. This led Einstein to pick his authentic Basic Concept of Relativity as the best or one of many easiest units of differential equations per the Particular Concept of Relativity in addition to identified observations (the speculation needed to largely reproduce Newtons’ principle of gravitation). For a few years, widespread science and widespread physics accounts such because the “biggest blunder” tales embraced this choice for “simplicity” below the banner of Occam’s Razor because the clearly scientific, rational option to do issues. It’s really troublesome to justify this choice. Right now, nevertheless, the favored science orthodoxy has modified as in any other case falsifying observations have accrued. For instance,

However Einstein didn’t exclude phrases with larger derivatives for this or for another sensible purpose, however for an aesthetic purpose: They weren’t wanted, so why embody them? And it was simply this aesthetic judgment that led him to remorse that he had ever launched the cosmological fixed.

Since Einstein’s time, we now have discovered to mistrust this kind of aesthetic criterion. Our expertise in elementary particle physics has taught us that any time period within the area equations of physics that’s allowed by elementary physics is more likely to be there within the equations.

(…a number of paragraphs of explication in the same vein…)

Concerning his introduction of the cosmological fixed in 1917, Einstein’s actual mistake was that he thought it was a mistake.

Steven Weinberg (Nobel Prize in Physics, 1979)

“Einstein’s Errors”

Physics Right now, November 2005, pp. 31-35

By the way, Professor Weinberg’s article has the ironic subtitle “Science units itself other than different paths to fact by recognizing that even the best practitioners typically err.” That is in all probability a veiled jab at conventional faith. It’s in all probability doubly ironic in that some types of conventional faith clearly acknowledge the fallibility of their prophets. For instance, in his letters to his fellow feuding Christians of the primary century, the Apostle Paul makes a transparent distinction between his private opinions, which he considers fallible, and divine revelation.

With respect to Einstein’s aesthetic judgment, primarily any steady operate could be approximated to arbitrary accuracy by a polynomial of sufficiently excessive diploma — or certainly any of any infinite variety of compositions of arbitrarily chosen constructing block features. A polynomial is the sum of powers of [tex] x [/tex]. For instance,

[tex] p_2(x) = a + b x + c x^2 [/tex]

or

[tex] p_3(x) = a + b x + c x^2 + d x^3 [/tex]

On the whole,

[tex] p_n(x) = a_0 + a_1 x + a_2 x^2 cdots a_n x^n [/tex]

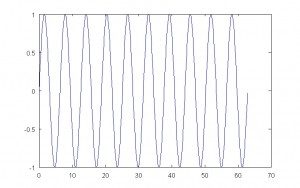

What does this imply? Let think about, for instance, an arbitrary operate such because the trigonometric sine operate [tex] sin(x) [/tex]. Right here is the sine operate plotted by Octave, a free Matlab appropriate numerical programming surroundings:

x = (0.0 : 0.1 : 20*pi)'; y = sin(x); plot(x,y,'-');

Sine Operate

Octave, like many comparable instruments similar to Mathematica, has a in-built operate, polyfit on this case, to suit a polynomial to information:

operate [] = plot_sinfit(x,y, n, m)

% plot_sinfit(x, y, n) suits polynomial of diploma n to information (x,y) in vary 0.0 to 2*pi

%

if nargin > 3

span = m;

else

span = 3;

finish

myx = x(1:63);

myy = y(1:63);

p = polyfit(x,y,n)

f = polyval(p,x);

x = (0: 0.1: span*2*pi)';

y = sin(x);

f = polyval(p,x);

plot(x,y,'o',x,f,'-')

axis([0 span*2*pi -1 1])

finish

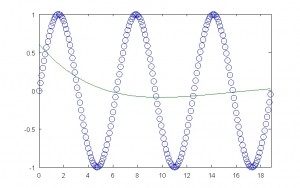

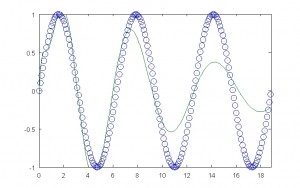

A sixth diploma polynomial is fitted to the information within the vary 0.0 to six.28 [tex] 2 pi [/tex] (the “coaching” set), however the fitted operate is displayed within the vary 0.0 to 18.42 [tex] 3*2*pi [/tex]. This offers:

Polynomial Match with 6 Phrases

One can see the settlement with six phrases is poor. Nevertheless, one can at all times add extra phrases:

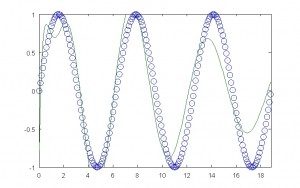

Polynomial Match with 12 Phrases

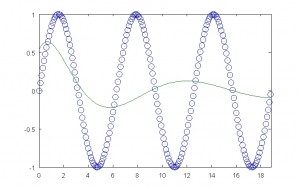

The settlement is considerably higher however nonetheless poor. One can nonetheless add extra phrases:

Polynomial Match with 24 Phrases

Now the match is getting higher, however there’s nonetheless room for enchancment. Each examination by eye and a rigorous goodness of match check would present the mathematical mannequin and the observational information disagree. One can nonetheless add extra phrases:

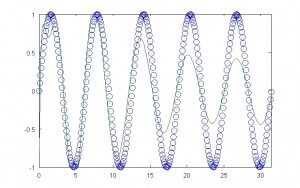

Polynomial Match with 48 Phrases

The settlement is even higher, though not good. One can see the disagreement by a bigger vary of information (recall the mannequin is fitted to the vary 0.0 to six.28 solely):

Polynomial Match with 48 Phrases (5 Cycles)

As one strikes farther away from the area used for the match (0.0 to six.28), the coaching set within the language of synthetic intelligence, the settlement will usually worsen. Nevertheless, one could make the settlement nearly as good as one desires by including increasingly phrases, increasingly powers of [tex] x [/tex]. It is very important notice {that a} sequence of powers of [tex] x [/tex] can by no means actually work. It’s going to by no means predict the long run habits of the information. The information on this illustrative instance is periodic. In distinction, powers of [tex] x [/tex] develop with out sure. Finally, as [tex] x [/tex] grows with out sure, the most important energy of [tex] x [/tex] will dominate the mathematical mannequin of the information and the mannequin will blow up, rising with out sure, and failing sooner or later to agree with the observations, the information.

If one used a mathematical mannequin constructed from periodic features, apart from the sine, one may patch collectively a “Frankenstein Operate” that will have some predictive energy and share among the gross traits of the particular information. That is what occurred with the Ptolemaic epicycles centuries in the past. In truth, one can assemble a Frankenstein Operate out of randomly chosen features, Gaussians, polynomials, trig features, items of different features, and so forth that may agree with observational information to any desired degree of settlement.

Many strategies in sample recognition and synthetic intelligence, such because the Hidden Markov Mannequin (HMM) speech recognition engines and synthetic neural networks, are makes an attempt to assemble extraordinarily complicated mathematical fashions composed of, in some circumstances, a whole bunch of 1000’s of constructing block features, to copy the power of human beings to categorise sounds or pictures or different kinds of information. In these makes an attempt, lots of the similar issues which have occurred in mathematical fashions such because the Ptolemaic mannequin of the photo voltaic system have recurred. Specifically, it has been discovered that neural networks and comparable fashions can typically precisely agree with a coaching set. In truth, this seeming settlement is usually dangerous. The coaching or becoming course of is usually deliberately stopped earlier than completion as a result of whereas the mathematical mannequin of classification will agree with the coaching set, it is going to typically fail to categorise new information similar to a so-called “validation” information set. Even extraordinarily complicated fashions similar to these utilized in speech recognition right now proceed to fail to achieve the human degree of efficiency, presumably for lots of the similar causes the epicycles of the Ptolemaic principle failed.

Falsifiability and Occam’s Razor

These difficulties with mathematical modeling lead one immediately to 2 pillars of widespread and typically scholarly science: the doctrine of falsifiability, normally attributed to the thinker of science Karl Popper, and Occam’s Razor. The doctrine of falsifiability holds that science proceeds by the falsification of theories by new proof. In mathematical phrases, one can examine the mathematical principle’s predictions with experimental information, apply a goodness of match check, and conclusively rule out the speculation. That is presupposed to differentiate science decisively from fluffy philosophical, spiritual, mystical, and political “data.” Science could not have the ability to inform us what’s true, however it might inform us conclusively what is fake. The doctrine of falsifiability is usually touted in discussions of so-called pseudoscience, particularly within the context of debates in regards to the principle of evolution and “creation science,” or, extra not too long ago, so-called “clever design.” Often, the argument is that falsifiability permits us to tell apart between true science which needs to be taught in colleges and customarily accepted and doubtful pseudoscientific “data.”

The issue with this, because the saga of the cosmological fixed now clearly reveals, is that theories could be patched and are regularly patched, typically clearly and typically extra subtly. Including increasingly phrases to a polynomial approximation is fairly apparent. The epicycles within the Ptolemaic theories had been fairly apparent. Really, add-ons such because the cosmological fixed are fairly apparent. However, quantum area principle and the varied unified area theories/theories of every thing similar to superstrings are so complicated and have such a protracted studying curve for most individuals that it’s typically troublesome to guage what is likely to be a patch (e.g. the idea of renormalization) and what won’t be a “patch.”

At this level, Occam’s Razor is normally invoked. William of Occam was an English Franciscan friar and scholastic thinker who lived from about 1288 to about 1348. He was concerned in a spread of theological and political conflicts throughout which he formulated his so-called Razor, fairly presumably for political and theological causes fairly alien to the trendy use (or misuse) of Occam’s Razor. In its trendy kind, Occam’s Razor is normally expressed as a necessity to not make advert hoc assumptions or to maintain a principle so simple as attainable whereas nonetheless agreeing with observations. After all, it’s laborious to outline an advert hoc assumption or simplicity in apply. In disputes about evolution and creation, Occam’s Razor is usually used to assault creationist explanations of the radioactive (and different) relationship of the Earth and fossils to million or billions of years of age. This proof of the nice age of the Earth is by far essentially the most troublesome observational proof for creationists to elucidate. In his criticism of the educating of evolution, William Jennings Bryan, who was not a fundamentalist (biblical literalist) as many imagine, merely accepted the nice age of the Earth as did many spiritual leaders of his time.

Right here is Steven Weinberg once more on the brand new revised Occam’s Razor:

Certainly, so far as we now have been in a position to do the calculations, quantum fluctuations by themselves would produce an infinite efficient cosmological fixed, in order that to cancel the infinity there must be an infinite “naked” cosmological fixed of the alternative signal within the area equations themselves. Occam’s razor is a effective instrument, nevertheless it needs to be utilized to ideas, not equations.

After all, in what is likely to be referred to as the robust AI principle of symbolic arithmetic, the symbols within the equations should correspond both immediately or in some oblique however exact, rigorous option to “ideas.” We simply don’t perceive the correspondence but. Except the robust AI principle of symbolic math is mistaken and a few ideas can’t be expressed in symbolic mathematical kind.

The place does this go away us? We all know rigorously that it’s attainable to assemble many arbitrarily complicated features or differential equations that may be primarily pressured to suit present observational information. How will we select which of them are more likely to be true? Human beings appear in the end to use some kind of judgment or instinct. Usually they’re mistaken, however nonetheless they’re proper rather more typically than random likelihood would recommend. Traditionally, it appears that evidently simplicity at each the extent of verbal ideas and on the degree of exact symbolic arithmetic has been a great criterion. We are able to’t actually justify this expertise “scientifically,” a minimum of as but.

Frankenstein Capabilities within the Laptop Age

With trendy computer systems, mathematical instruments similar to Mathematica, Matlab, Octave, and so forth, and trendy arithmetic with its myriad particular features, differential equations, and different exotica, it’s now attainable to assemble Frankenstein Capabilities on a scale that dwarfs the Ptolemaic epicycles. Synthetic intelligence strategies similar to Hidden Markov Mannequin primarily based speech recognition, genetic programming, synthetic neural networks and different strategies the truth is explicitly or implicitly incorporate mathematical fashions with, in some circumstances, a whole bunch of 1000’s of tunable parameters. These fashions can match coaching units of information precisely and but they fail considerably, typically completely when confronted with new information.

In elementary physics, the theories have grown more and more complicated. Even the total Lagrangian for the reigning normal mannequin of particle physics (for which Steven Weinberg shared the Nobel Prize in 1979) is kind of complicated and options such nonetheless unobserved advert hoc entities because the Higgs particle. Makes an attempt at grand unified theories or theories of every thing are usually extra complicated and elaborate. The reigning Large Bang principle has grown more and more baroque with the introduction of inflation, quite a few kinds of darkish matter, and now darkish power — the rebirth of the cosmological fixed. Computer systems, mathematical software program, superior trendy arithmetic, and legions of graduate college students and submit doctoral analysis associates all mix to make it attainable to assemble extraordinarily elaborate fashions far past the capability of the Renaissance astronomers. The very complexity and lengthy studying curve of the current day fashions could turn into a standing image and shield the theories from significant criticism.

Conclusion

Aesop’s Fables embody the humorous story of The Astronomer who spends all his time gazing up on the heavens in deep contemplation. He’s so mesmerized by his star gazing that he falls right into a properly at his ft. The ethical of the story is “My good man, if you are making an attempt to pry into the mysteries of heaven, you overlook the frequent objects which can be at your ft.” This may increasingly have a double which means within the present age of Frankenstein Capabilities. On the one hand, scientists and engineers could properly have turn into enamored of extraordinarily complicated fashions and forgotten the lesson of previous expertise that excessive complexity is usually a warning signal of deep issues, the lesson that Einstein initially heeded. It additionally raises the query of whether or not bizarre individuals, enterprise leaders, coverage makers, and others within the “actual world” want be involved about these complicated mathematical fashions, normally carried out in pc software program, and the difficulties related to them.

Extraordinarily complicated mathematical fashions, some apparently profitable, some in all probability much less so, are more and more part of life. Complicated fashions incorporating Basic Relativity are utilized by the World Positioning System to offer exact navigation info — to information every thing from hikers to ships to lethal missiles. Broadly quoted financial figures such because the unemployment fee and inflation fee are literally the product of more and more complicated mathematical fashions that bear a lower than clear relationship to frequent sense definitions of unemployment and inflation. Is a “discouraged employee” actually not unemployed? Are the fashions that extrapolate from the family surveys to the nationally reported “unemployment fee” actually appropriate? What ought to one make of hedonic corrections to the inflation fee through which alleged enhancements in high quality are used to regulate the value of an merchandise downward? Ought to the value of homes used within the shopper worth index (CPI) be the precise worth of buy or the mysterious “proprietor equal lease.” What’s the common particular person to make of the complicated pc fashions mentioned to exhibit world warming past an affordable doubt? Ought to we restrict the manufacturing and consumption of coal, oil, or pure gasoline primarily based on these fashions? How do oil corporations and governments like Saudi Arabia calculate the “confirmed reserves” of oil that they report annually? Are we experiencing “Peak Oil” as some declare or is there extra oil than generally reported?

Within the lead as much as the current monetary disaster and recession, the handful of economists and monetary practitioners (Dean Baker, Nouriel Roubini, Robert Shiller, Paul Krugman, Peter Schiff, and a few others) who clearly acknowledged and anticipated the housing bubble and related issues used quite simple, again of the envelope calculations and arguments to detect the bubble. Notably, housing costs in areas with vital zoning restrictions on house building rose far forward of inflation, one thing not often seen up to now after which normally throughout earlier housing bubbles. Dwelling costs in areas with vital zoning restrictions grew to become a lot larger than can be anticipated primarily based on condo rental charges in the identical areas. In different phrases, it was less expensive to lease than to personal a house of the identical measurement, one thing with little historic precedent. In distinction, the massive monetary companies peddling mortgage backed securities used extraordinarily complicated mathematical fashions, not sometimes cooked up by former physicists and different scientists, that proved grossly inaccurate.

It’s doubtless that simplicity and Occam’s Razor as generally understood have some fact in them, though we don’t actually perceive why that is the case. They aren’t good. Generally the complicated principle or the advert hoc assumption wins. Nonetheless, Frankenstein Capabilities and excessive complexity, each in ideas (verbal ideas) and exact symbolic arithmetic needs to be seen as a warning signal of hassle. By this criterion, the Large Bang principle, Basic Relativity, and quantum area principle could all be in want of serious revision.

Prompt Studying/References

“Einstein’s Errors (PDF),” Steven Weinberg, Physics Right now, November 2005, pp. 31-35

Aesop’s Fables, Chosen and Tailored by Jack Zipes, Penguin Books, New York, 1992

George Gamow, My World Line — An Casual Autobiography, Viking Press, New York, 1970

Copyright © 2010 John F. McGowan, Ph.D.

In regards to the Creator

John F. McGowan, Ph.D. is a software program developer, analysis scientist, and guide. He works primarily within the space of complicated algorithms that embody superior mathematical and logical ideas, together with speech recognition and video compression applied sciences. He has in depth expertise growing software program in C, C++, Visible Primary, Mathematica, MATLAB, and lots of different programming languages. He’s in all probability finest identified for his AVI Overview, an Web FAQ (Steadily Requested Questions) on the Microsoft AVI (Audio Video Interleave) file format. He has labored as a contractor at NASA Ames Analysis Heart concerned within the analysis and growth of picture and video processing algorithms and know-how. He has revealed articles on the origin and evolution of life, the exploration of Mars (anticipating the invention of methane on Mars), and low-cost entry to area. He has a Ph.D. in physics from the College of Illinois at Urbana-Champaign and a B.S. in physics from the California Institute of Know-how (Caltech). He could be reached at [email protected].

Sponsor’s message: Obtain free weekly updates about new math books. Don’t miss nice new titles within the genres you’re keen on (similar to Arithmetic, Science, Programming, and Sci-Fi): https://anynewbooks.com

![Erratum for “An inverse theorem for the Gowers U^s+1[N]-norm”](https://azmath.info/wp-content/uploads/2024/07/2211-erratum-for-an-inverse-theorem-for-the-gowers-us1n-norm-150x150.jpg)